ScreenRecorder 代码解读

本文最后更新于:1 年前

Reference

- ScreenRecorder

- Android实现录屏直播(三)MediaProjection + VirtualDisplay + librtmp + MediaCodec实现视频编码并推流到rtmp服务器

- Android获取实时屏幕画面

- MediaCodec

- MediaFormat

- MediaProjection

- Buffer

- ByteBuffer

- H264码流中SPS PPS详解

- H.264 NALU语法结构

- H264 获取SPS与PPS(附源码)

- 通过RTMP play分析FLV格式详解

- RTMP中FLV流到标准h264、aac的转换

- libRTMP使用说明

- 带你吃透RTMP

- 直播推流实现RTMP协议的一些注意事项

- RTMPdump(libRTMP) 源代码分析 8: 发送消息(Message)

- 简单的iOS直播推流——flv 编码与音视频时间戳同步

MyApplication

加载screenrecorderrtmpJNI库;保存 Context。

1 | |

LaunchActivity

获取权限;设置按钮点击后跳转到屏幕捕捉界面或相机捕捉界面。

1 | |

ScreenRecordListenerService

通知开始屏幕捕捉;监听推流进度。

1 | |

Packager

AvcPackager

1 | |

generateAvcDecoderConfigurationRecord(MediaFormat mediaFormat)

按FLV要求的AVC的AVCDecoderConfigurationRecord格式编码。

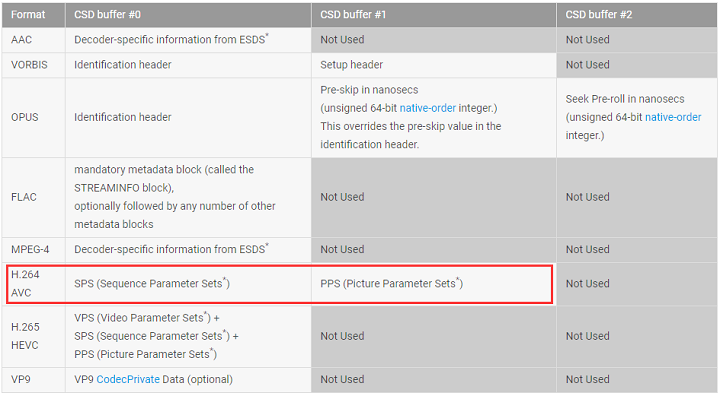

其中可以看到AVC格式的SPS、PPS分别对应csd-0和csd-1。

FlvPackager

1 | |

setAvcTag(byte[] dst, int pos, boolean isAvcSequenceHeader, boolean isIdr, int readDataLength)

按FLV要求的AVC的TAG body格式编码。

setAacTag(byte[] dst, int pos, boolean isAACSequenceHeader)

按FLV要求的AAC的TAG body格式编码。

ScreenRecorder

获取数据,并按照FLV封装格式编码。

1 | |

prepareEncoder()

准备编码器。

recordVirtualDisplay()

获取已解码的输出缓冲的索引,或者一个状态值。当是状态值MediaCodec.INFO_OUTPUT_FORMAT_CHANGED时,生成并发送AVC的AVCDecoderConfigurationRecord;否则,生成并发送AVC数据。

sendAvcDecoderConfigurationRecord(long timeMs, MediaFormat format)

生成并发送AVC的AVCDecoderConfigurationRecord。

sendAvcData(long timeMs, ByteBuffer data)

生成并发送AVC数据。

FlvMetaData

按FLV要求的onMetaData格式编码。

1 | |

screenrecorderrtmp

通过和librtmpC库交互,实现数据流读/写。

1 | |

RtmpStreamingSender

维护一个发送队列,在连接服务端成功后,不断发送数据到服务端,如果发送速率低于添加速率,会跳过部分帧。当屏幕捕捉后,尝试添加到队列中,如果队列已满,则放弃添加到队列中。

1 | |

ScreenRecordActivity

控制屏幕捕捉和推流。

1 | |

onActivityResult(int requestCode, int resultCode, Intent data)

向输入框中的地址进行RTMP推流。

startScreenCapture()

开始屏幕捕捉。

stopScreenRecord()

停止屏幕捕捉。

startScreenRecordService()

开始监听推流进度。

stopScreenRecordService()

停止监听推流进度。

ScreenRecorder 代码解读

https://weichao.io/ca5a600436ff/