在 Android 上运行 FFmpeg Examples

本文最后更新于:1 年前

Reference

项目源代码

部分代码

- CMakeLists.txt

1 | |

- native-lib.cpp

1 | |

- MainActivity.kt

1 | |

环境

FFmpeg 4.2

编译生成 so 参考NDK r21d + FFmpeg 4.2 编写脚本文件 & 编译生成 Android 所需的库Android Studio

Android Studio Bumblebee | 2021.1.1 Patch 2

Build # AI-211.7628.21.2111.8193401, built on February 17, 2022

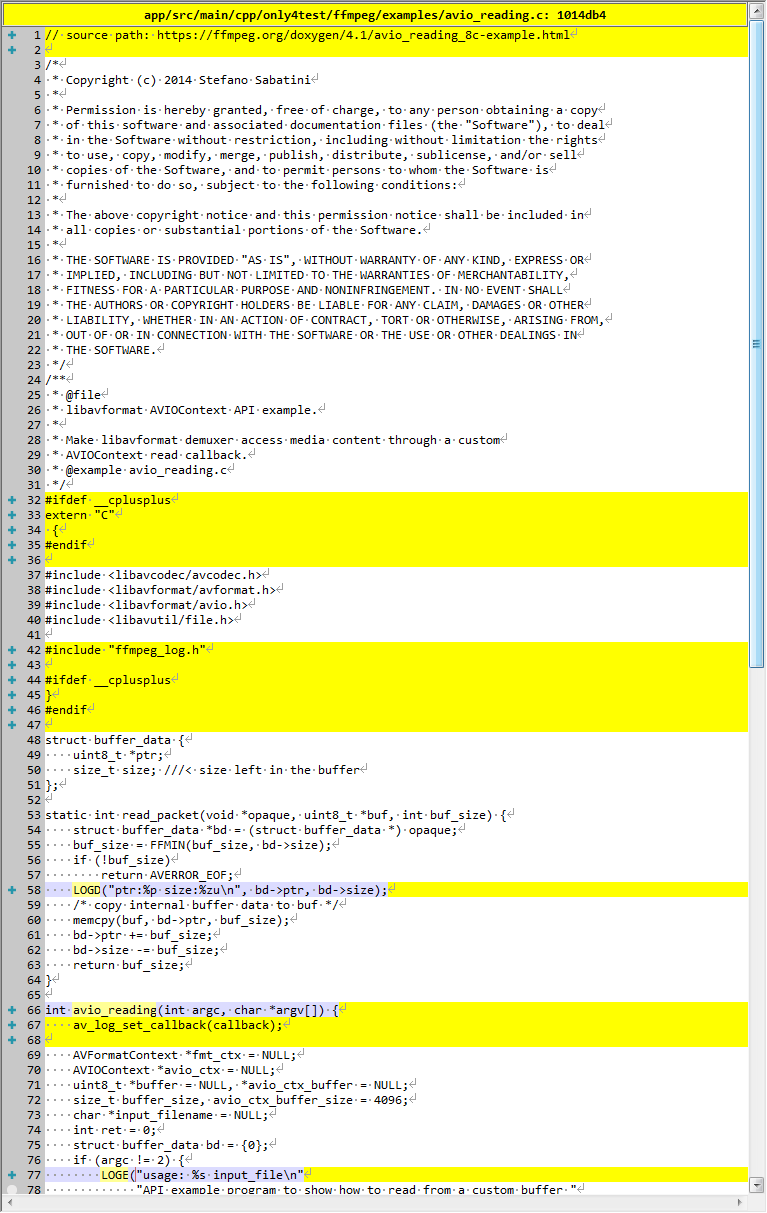

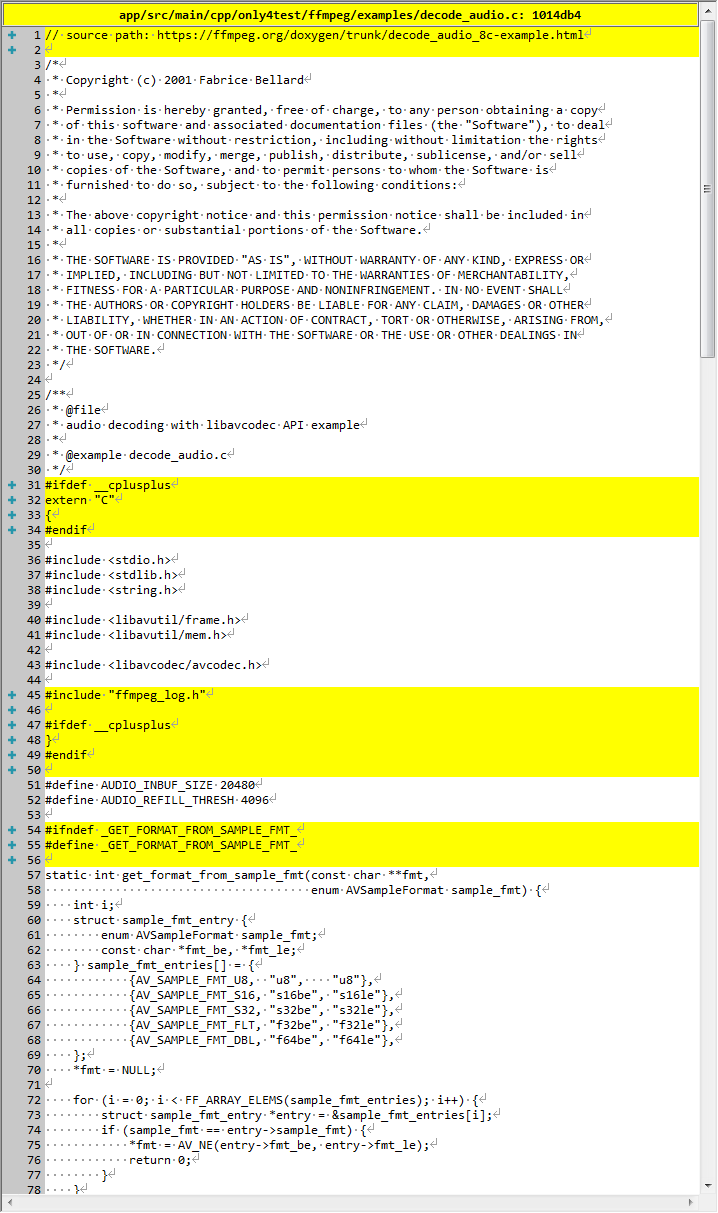

avio_reading

描述

libavformat AVIOContext API example.

Make libavformat demuxer access media content through a custom AVIOContext read callback.

libavformat AVIOContext API 示例。

使 libavformat demuxer 通过自定义 AVIOContext 读取回调访问媒体内容。

API example program to show how to read from a custom buffer accessed through AVIOContext.

API 示例程序,展示如何从通过 AVIOContext 访问的自定义缓冲区中读取数据。

演示

源代码修改

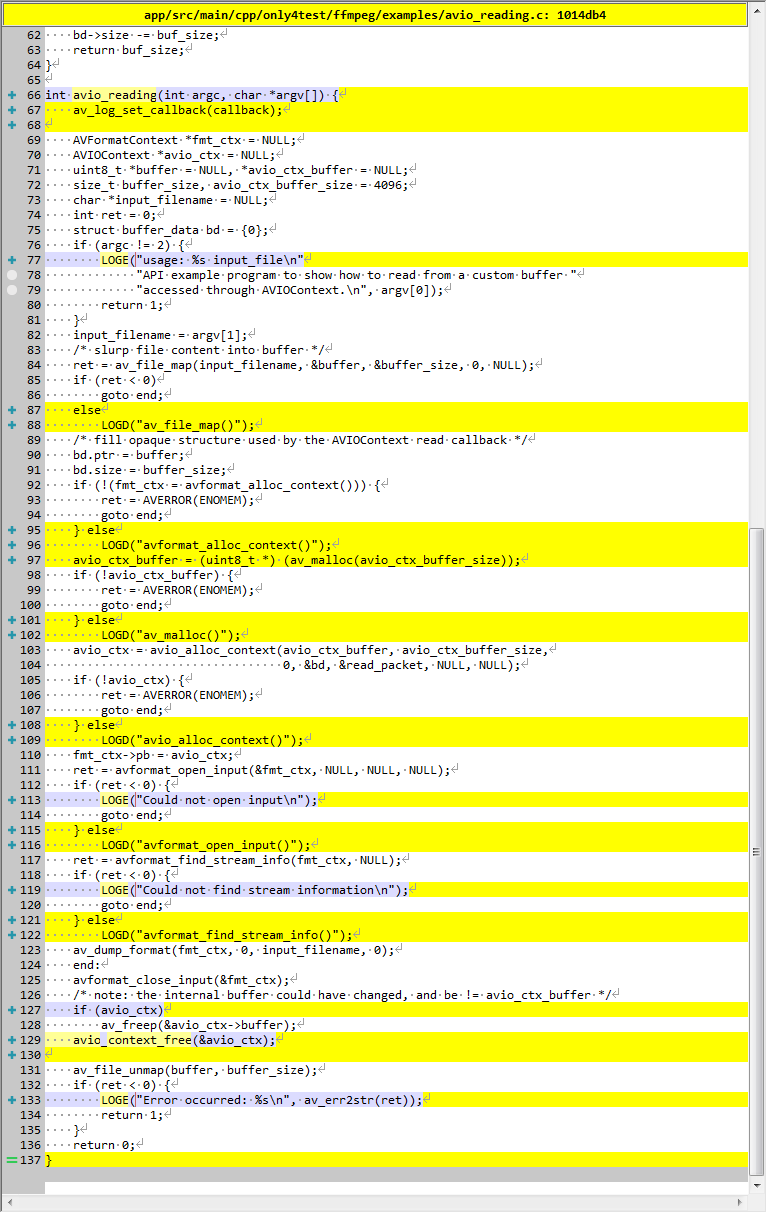

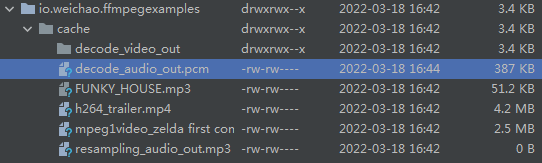

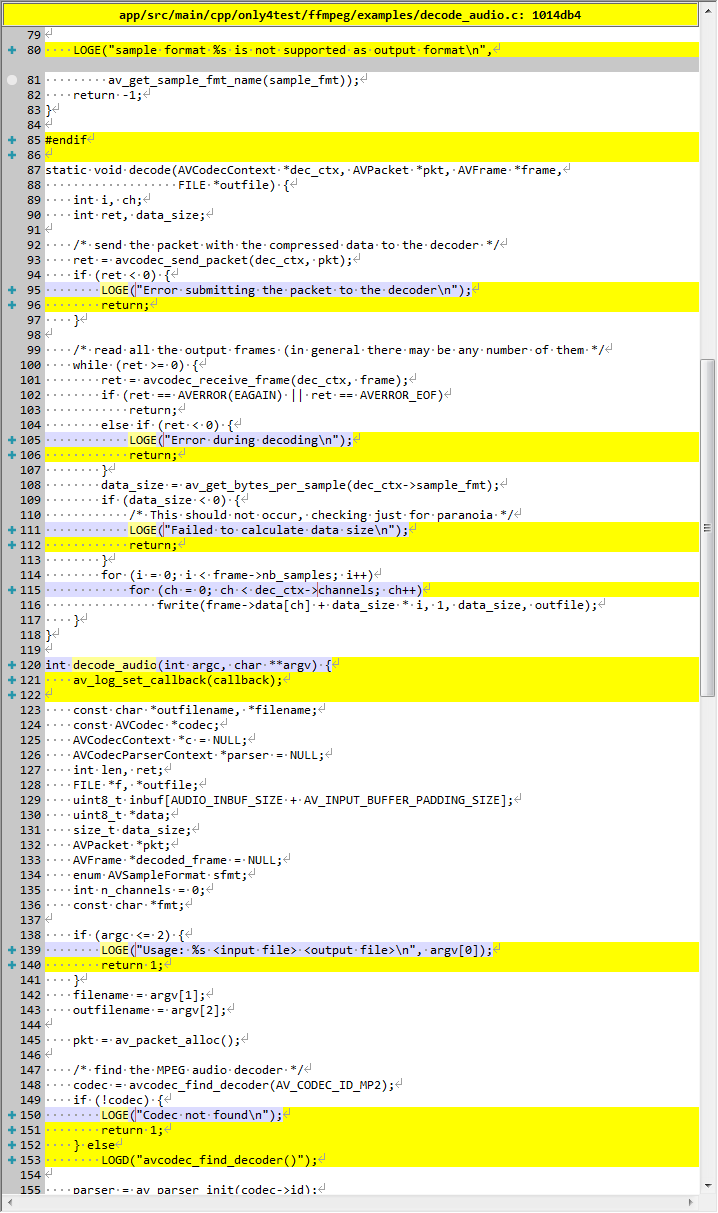

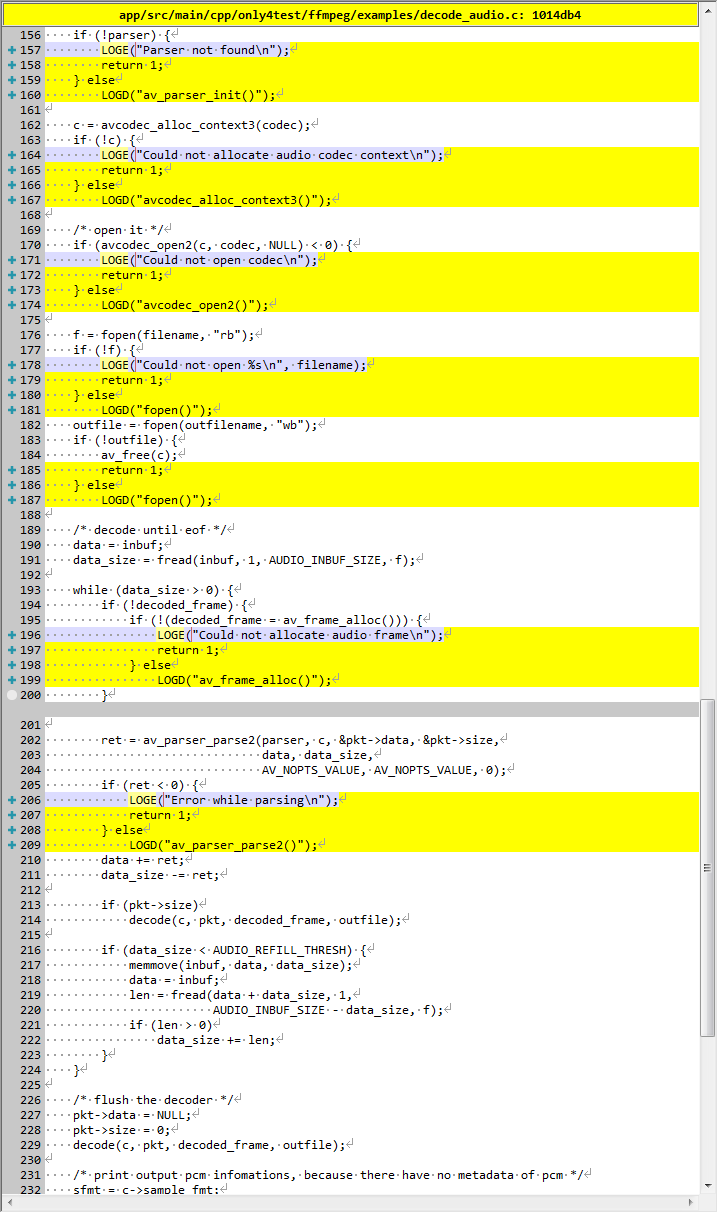

decode_audio

描述

audio decoding with libavcodec API example

使用 libavcodec API 进行音频解码示例

演示

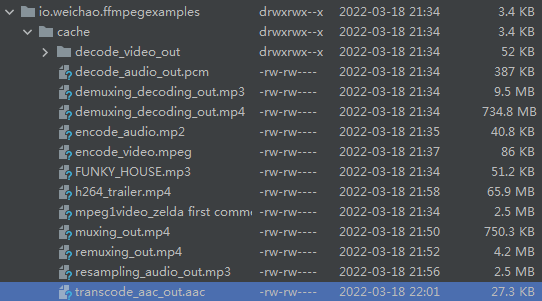

在指定位置生成文件:

源代码修改

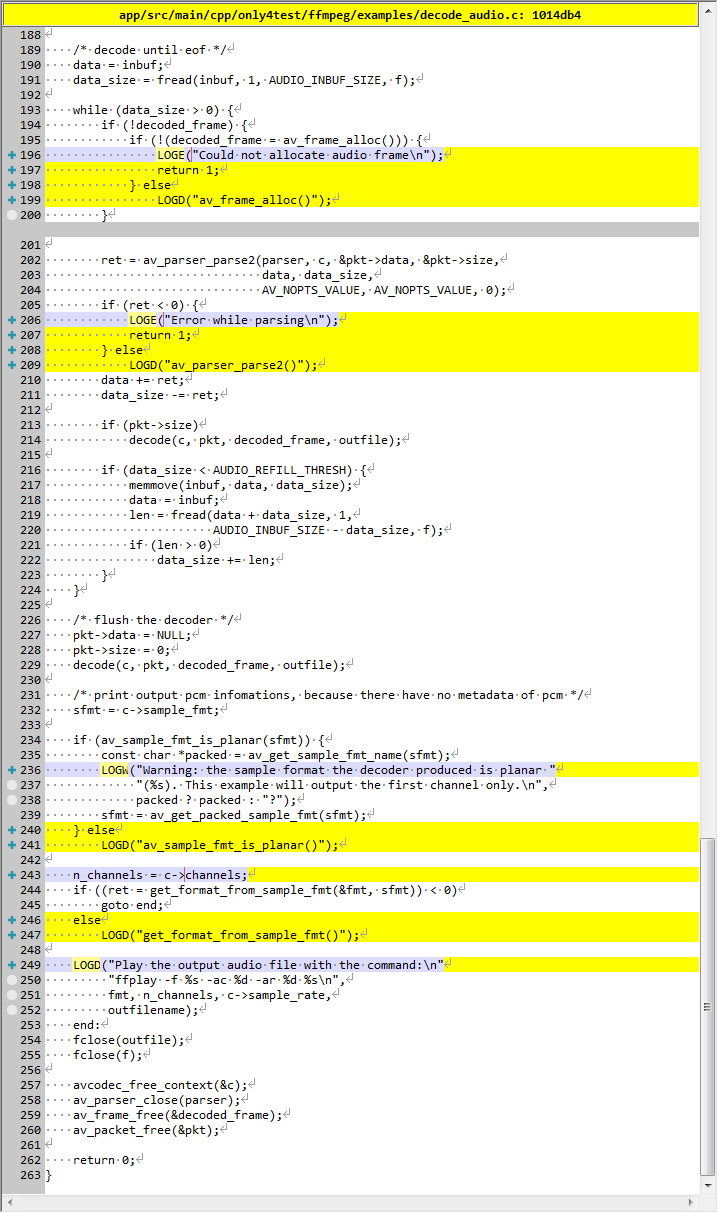

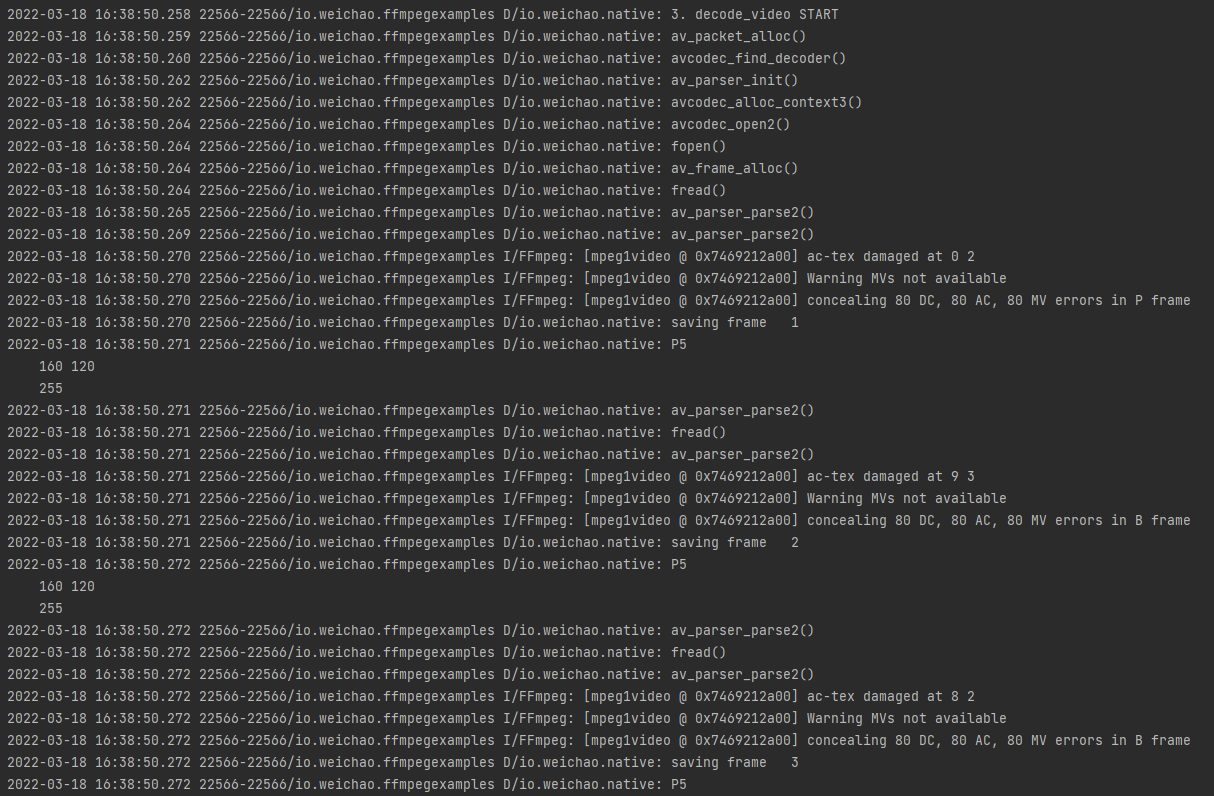

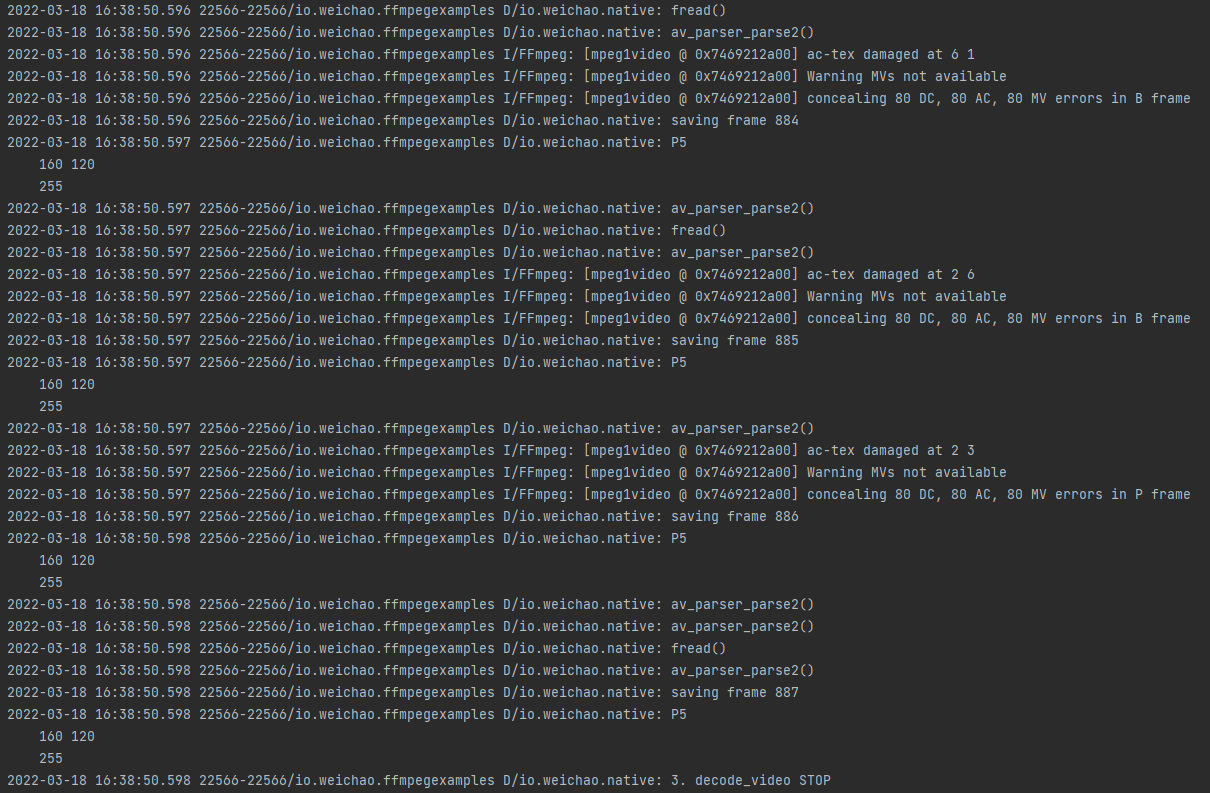

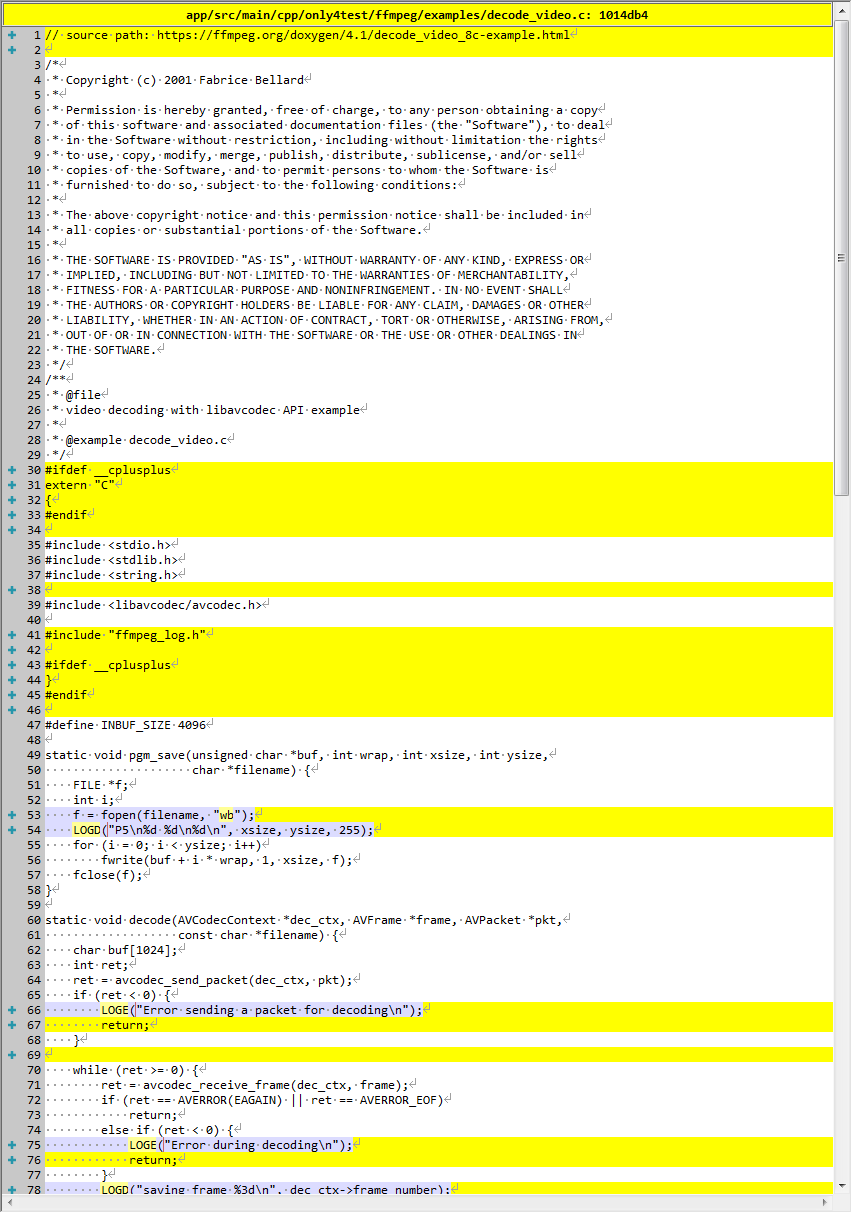

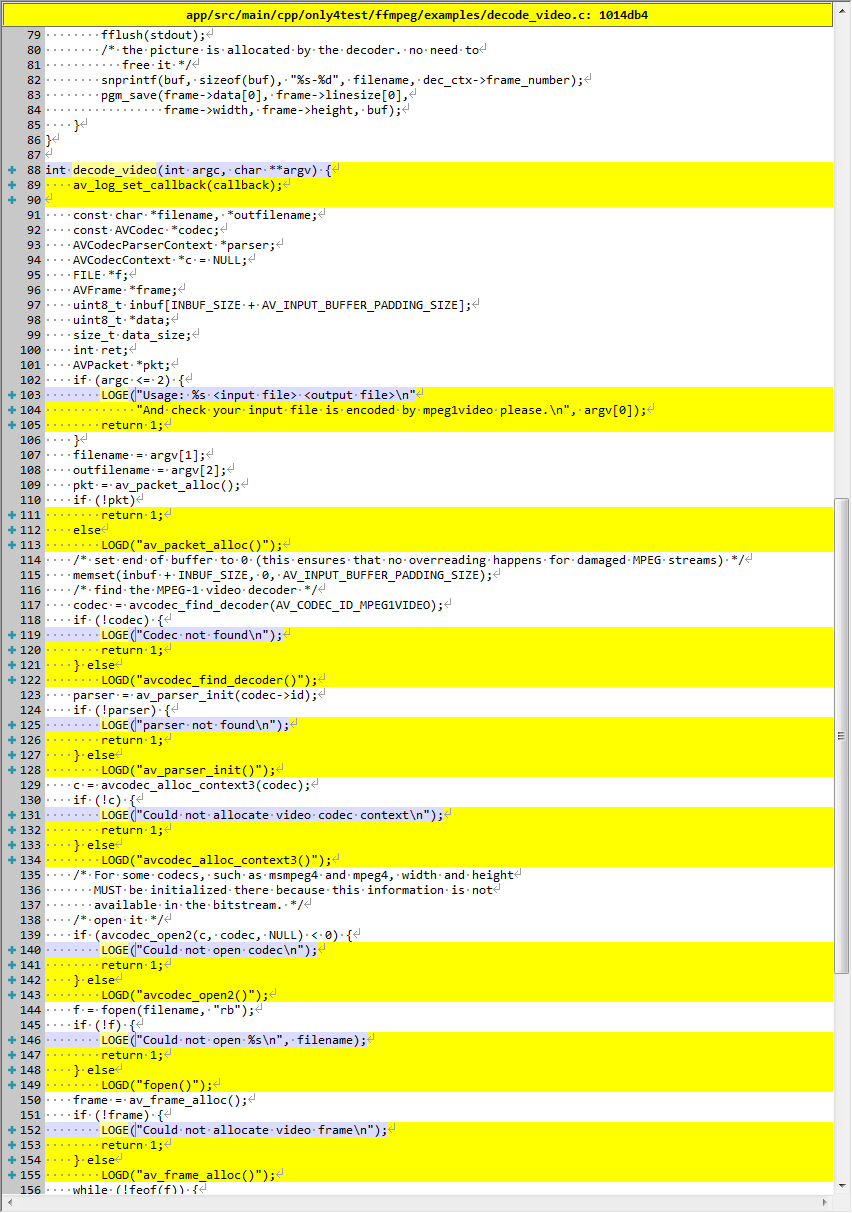

decode_video

描述

video decoding with libavcodec API example

使用 libavcodec API 进行视频解码示例

And check your input file is encoded by mpeg1video please.

请检查您的输入文件是否由 mpeg1video 编码。

演示

(省略中间 log)

在指定位置生成文件:

(省略更多文件)

源代码修改

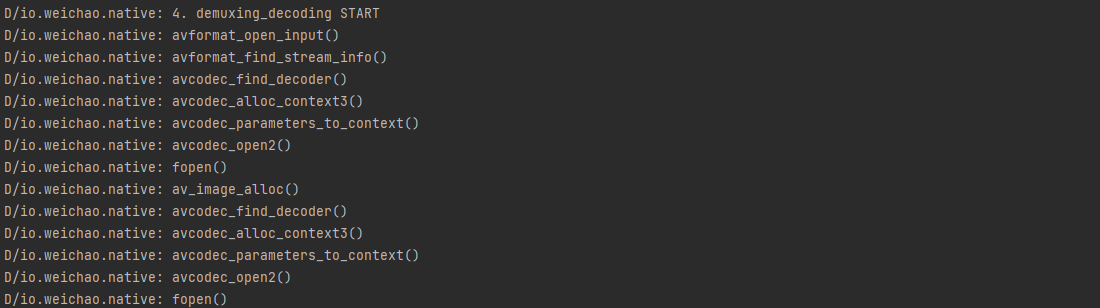

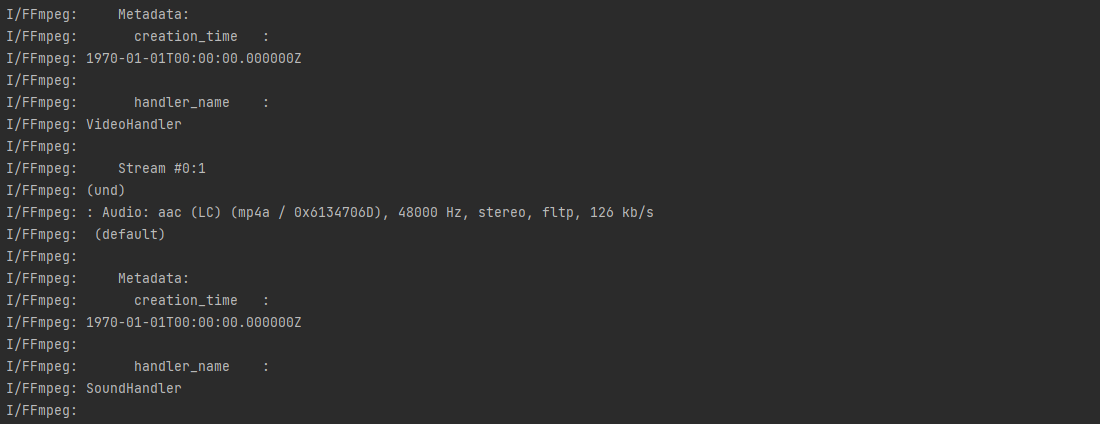

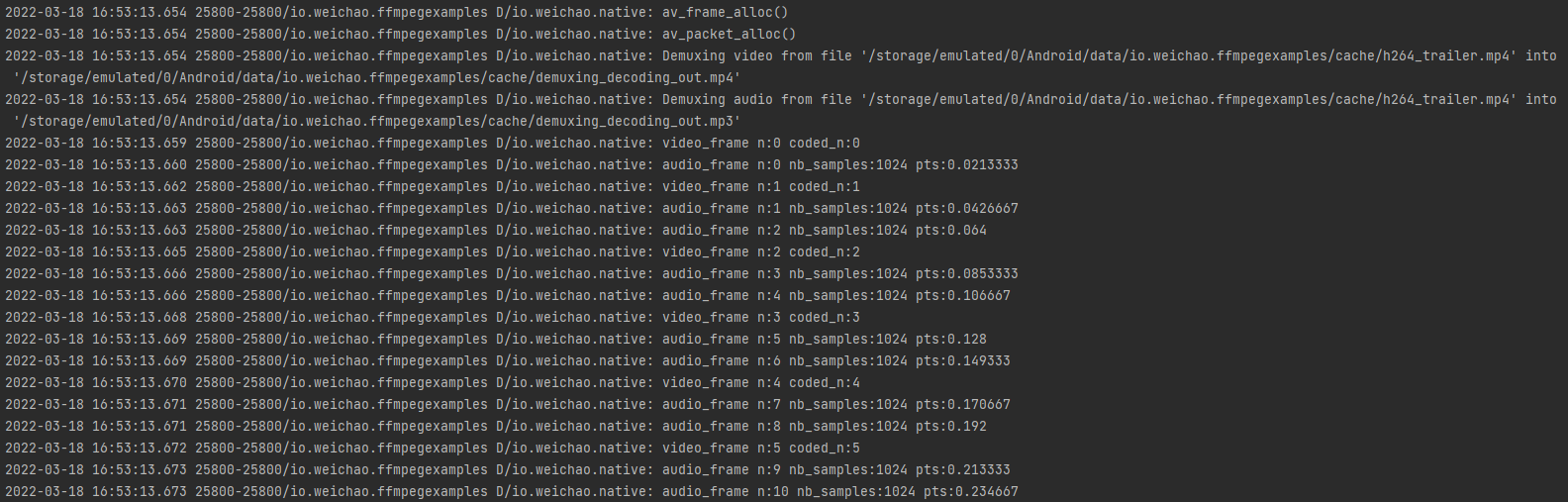

demuxing_decoding

描述

Demuxing and decoding example.

Show how to use the libavformat and libavcodec API to demux and decode audio and video data.

解复用和解码示例。

展示如何使用 libavformat 和 libavcodec API 对音频和视频数据进行解复用和解码。

API example program to show how to read frames from an input file.

This program reads frames from a file, decodes them, and writes decoded video frames to a rawvideo file named video_output_file, and decoded audio frames to a rawaudio file named audio_output_file.

API 示例程序,展示如何从输入文件中读取帧。

该程序从文件中读取帧,对其进行解码,并将解码的视频帧写入名为 video_output_file 的 rawvideo 文件,并将解码的音频帧写入名为 audio_output_file 的 rawaudio 文件。

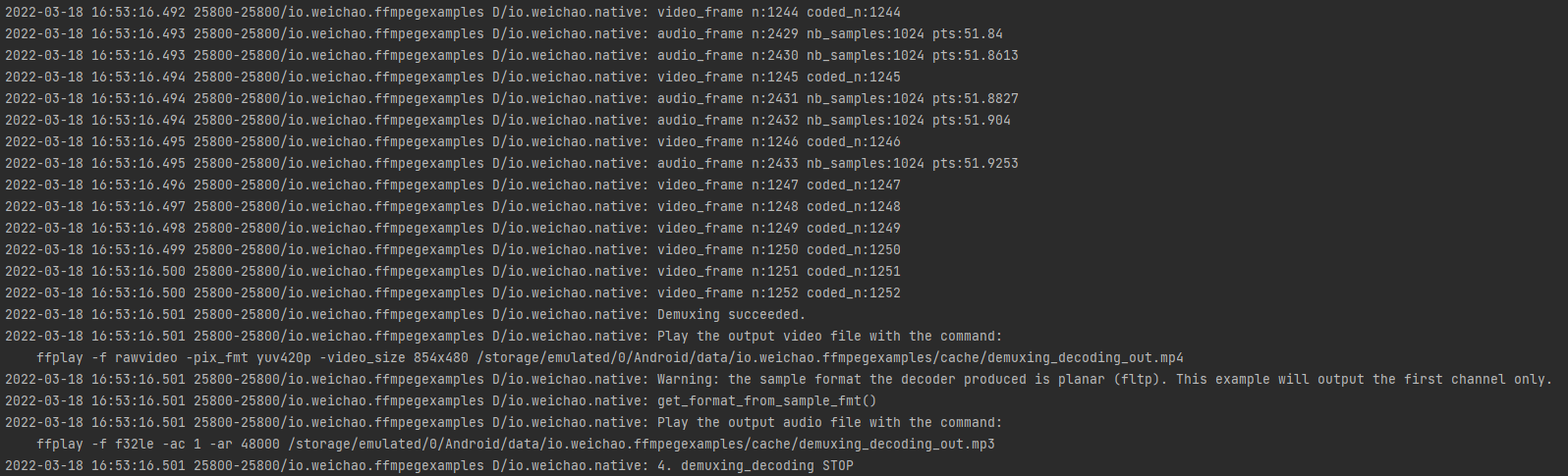

演示

(省略中间 log)

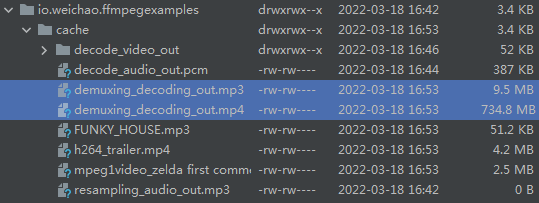

在指定位置生成文件:

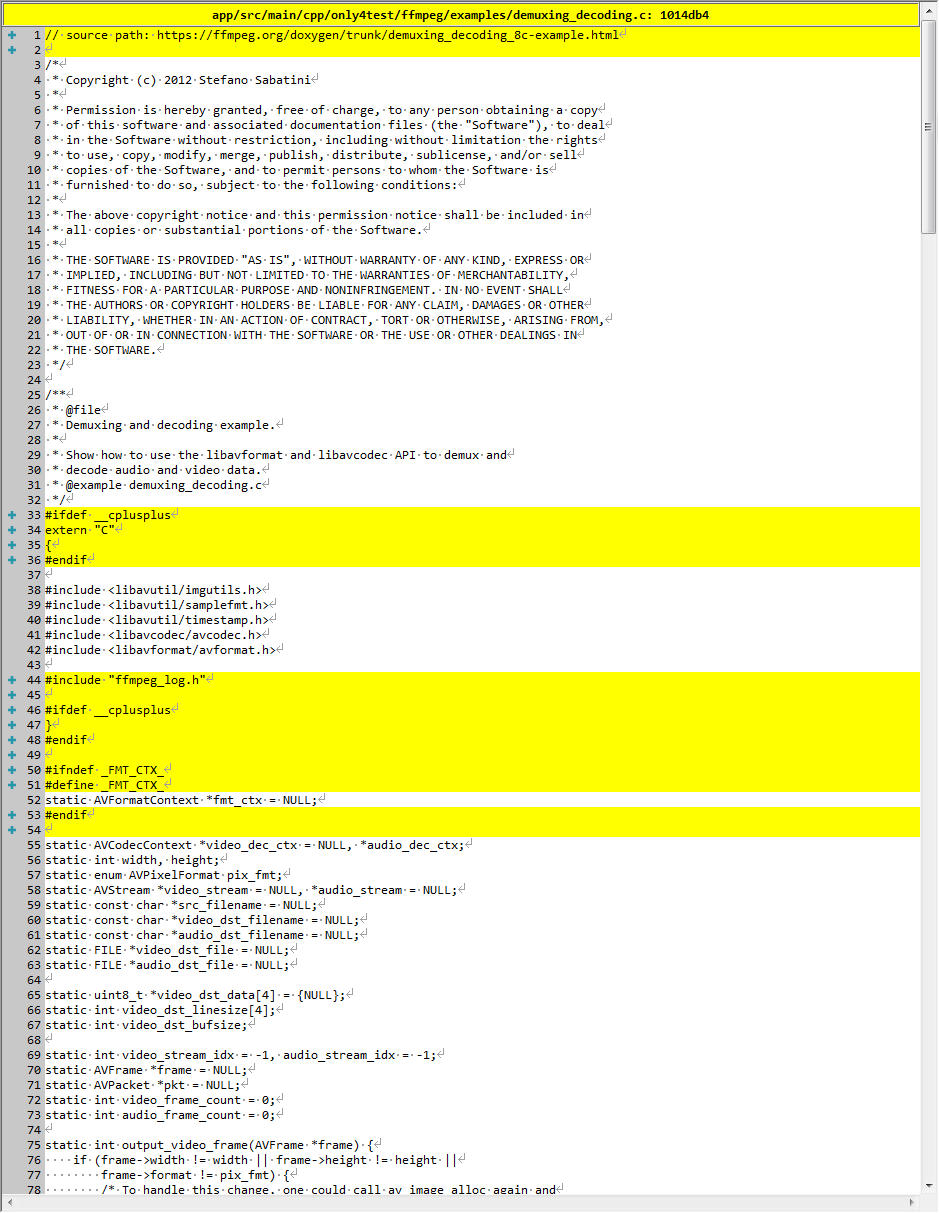

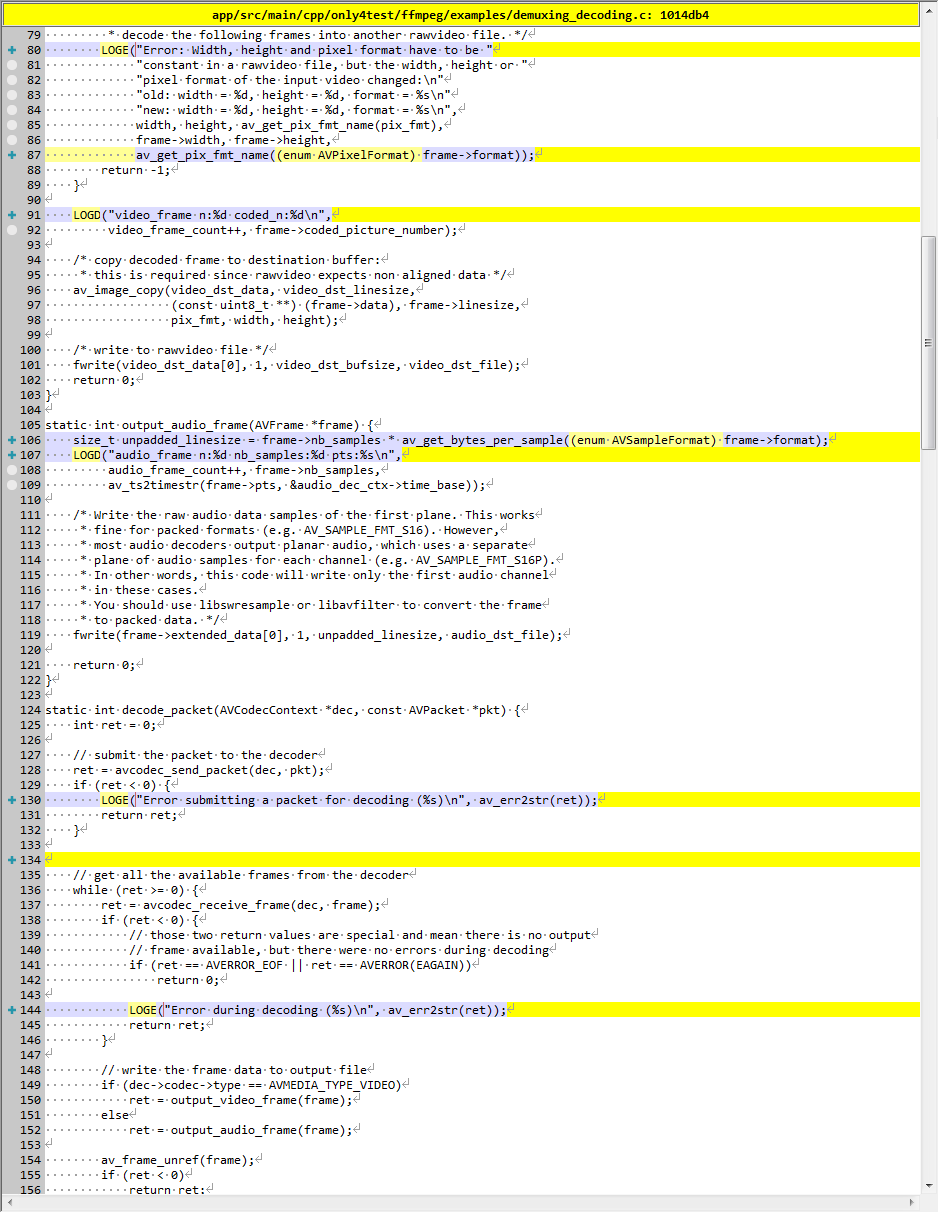

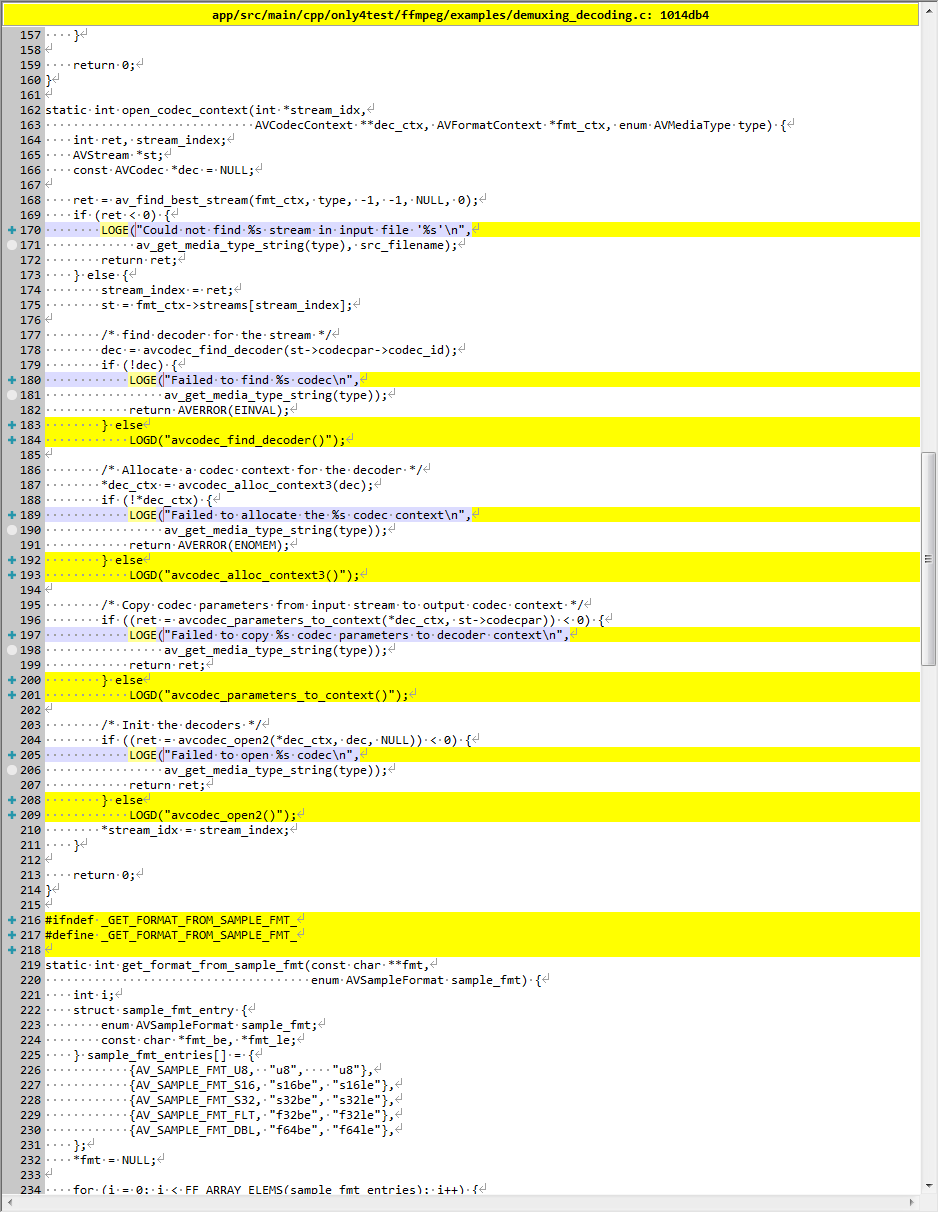

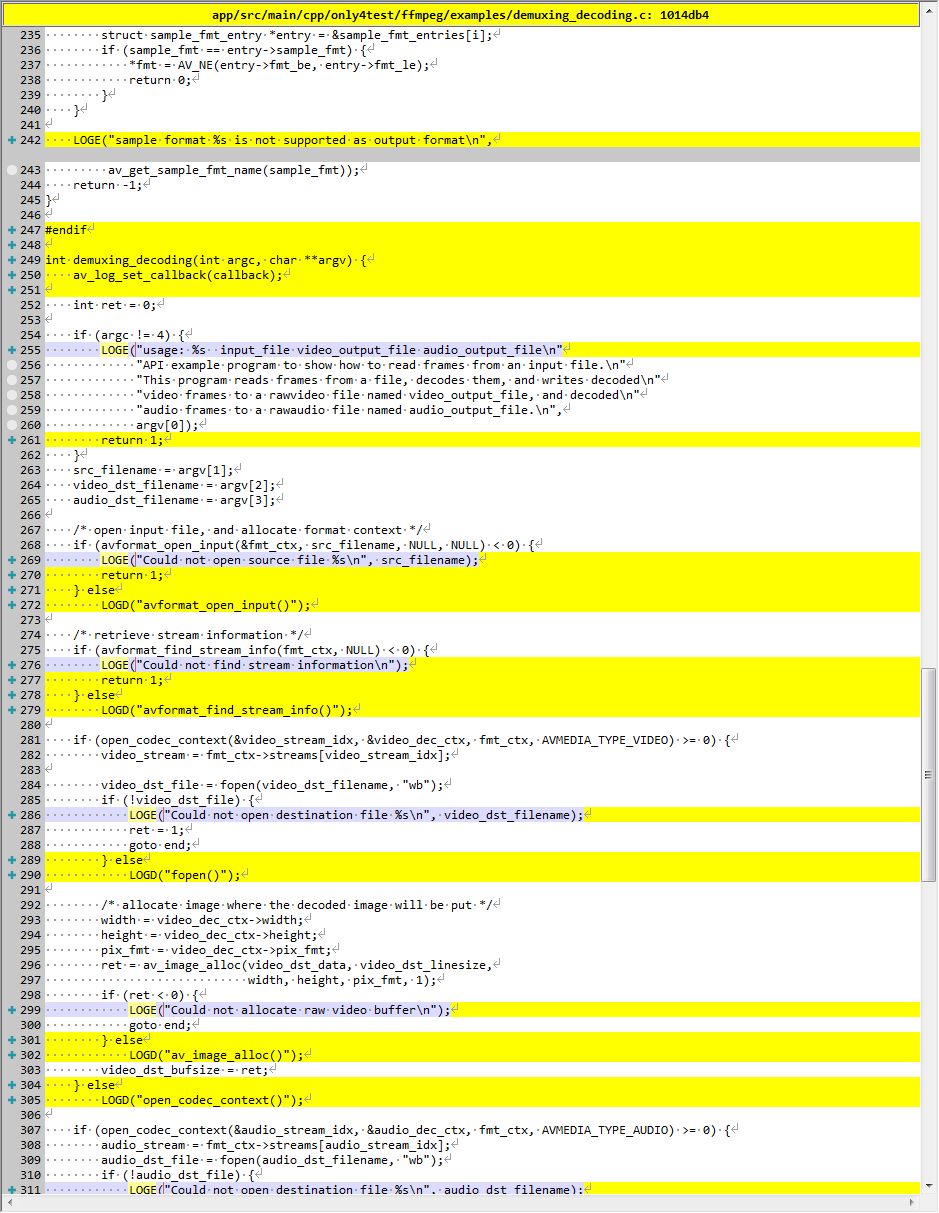

源代码修改

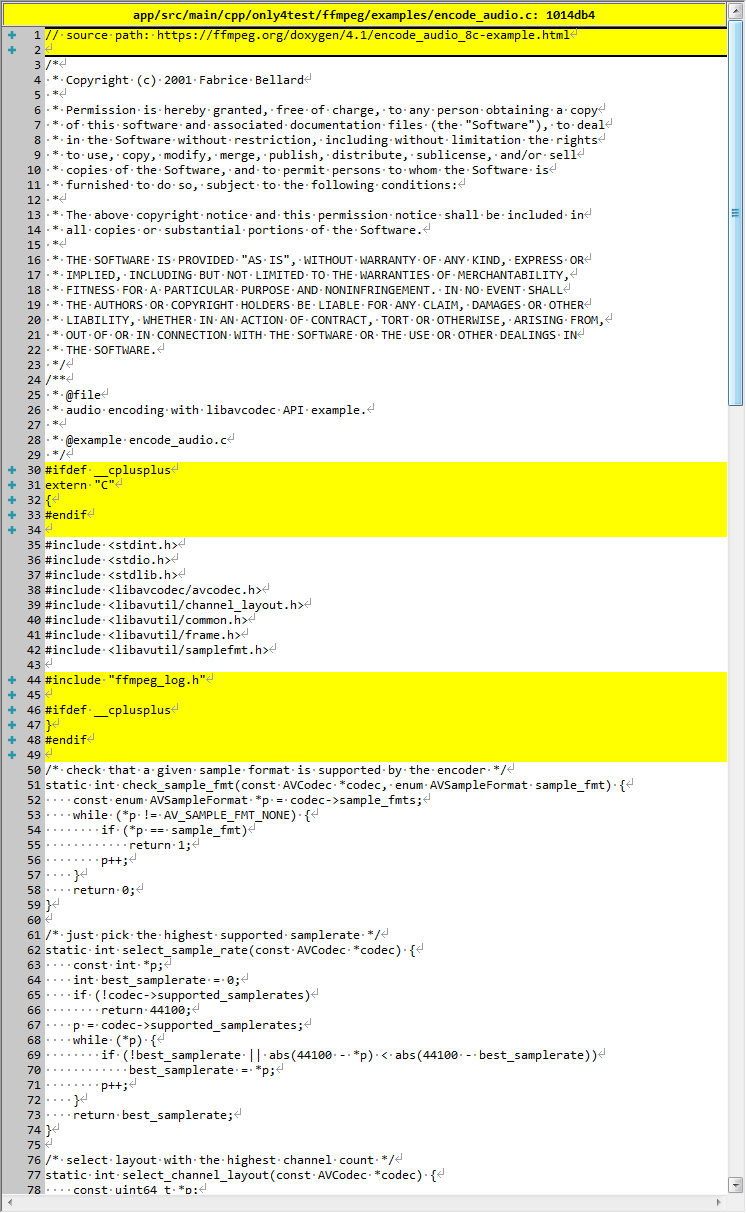

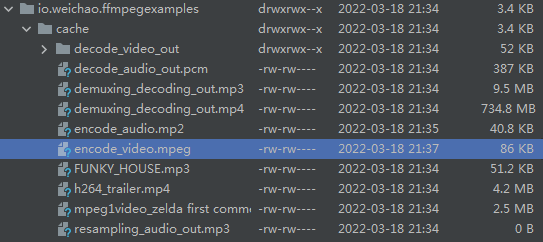

encode_audio

描述

audio encoding with libavcodec API example.

使用 libavcodec API 示例进行音频编码。

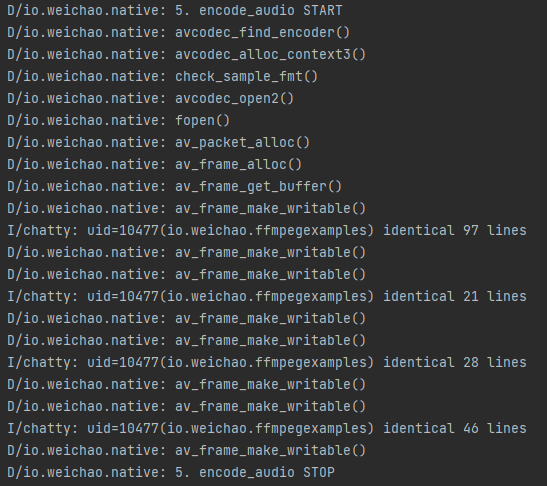

演示

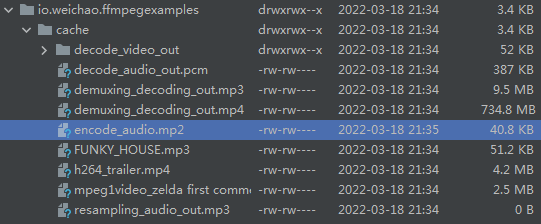

在指定位置生成文件:

源代码修改

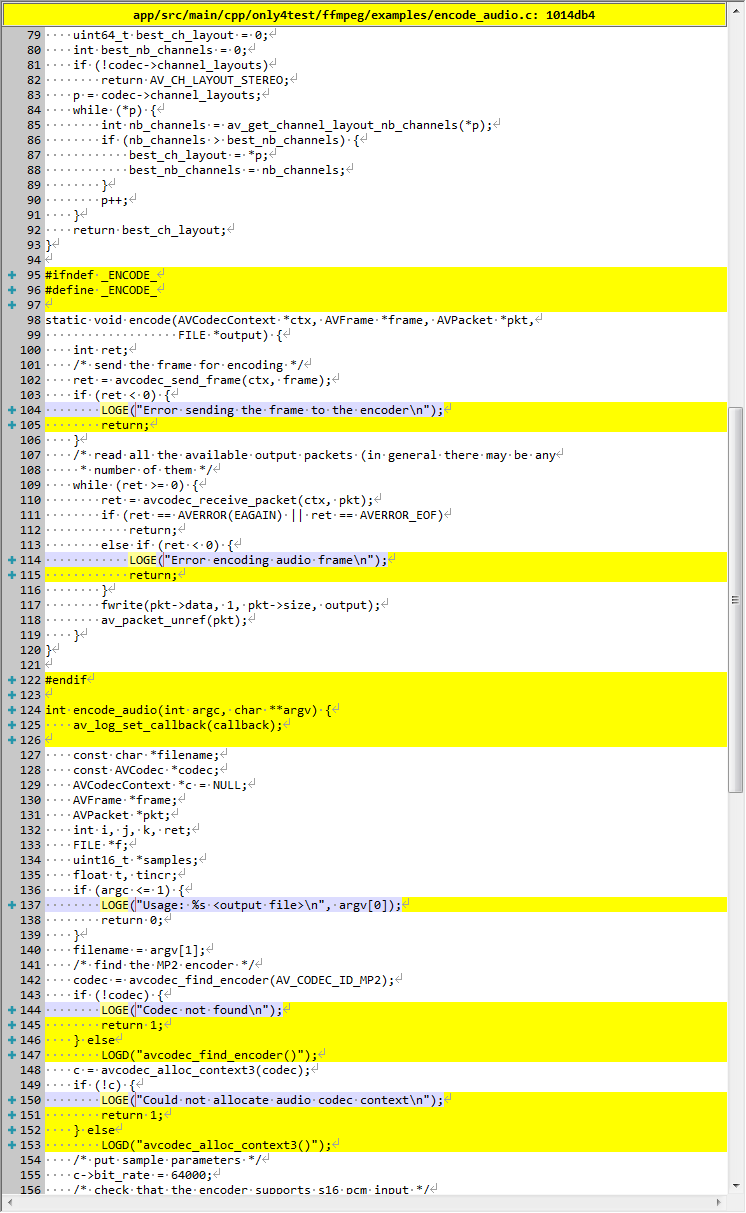

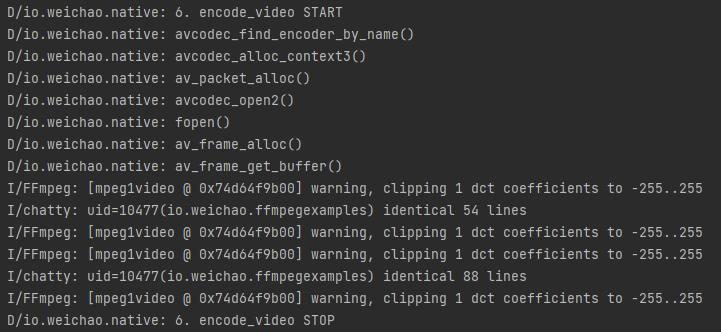

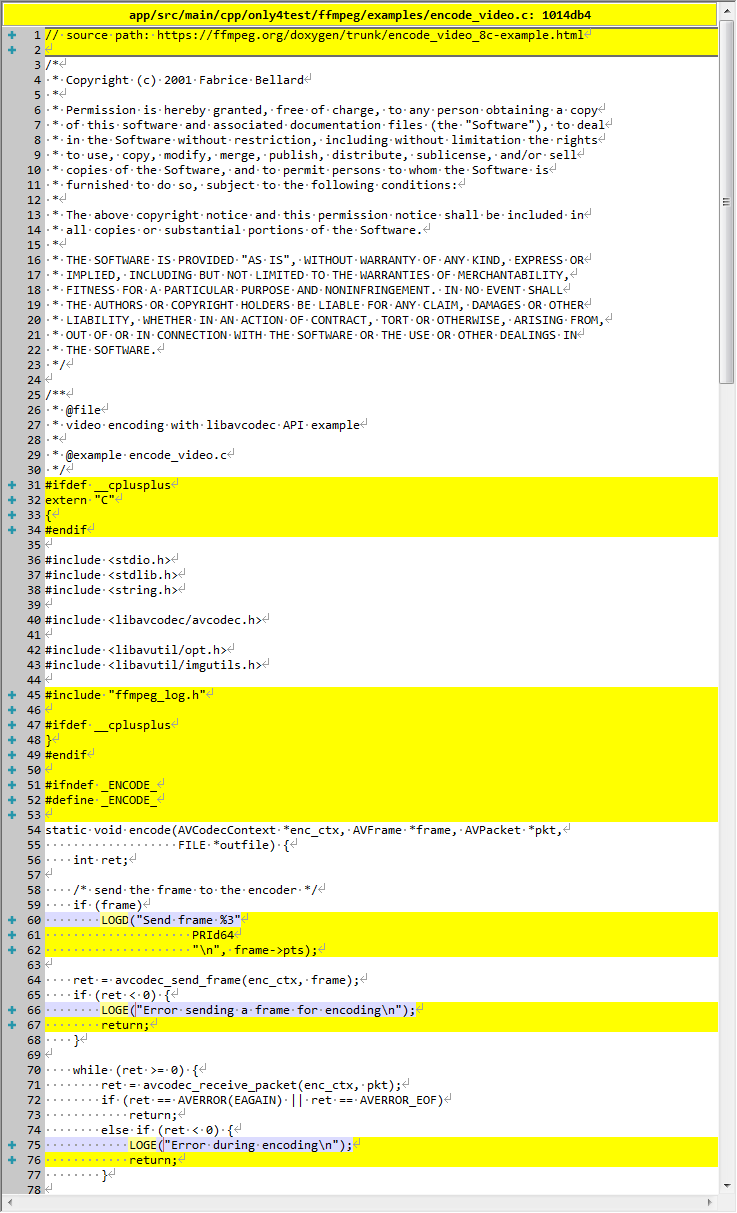

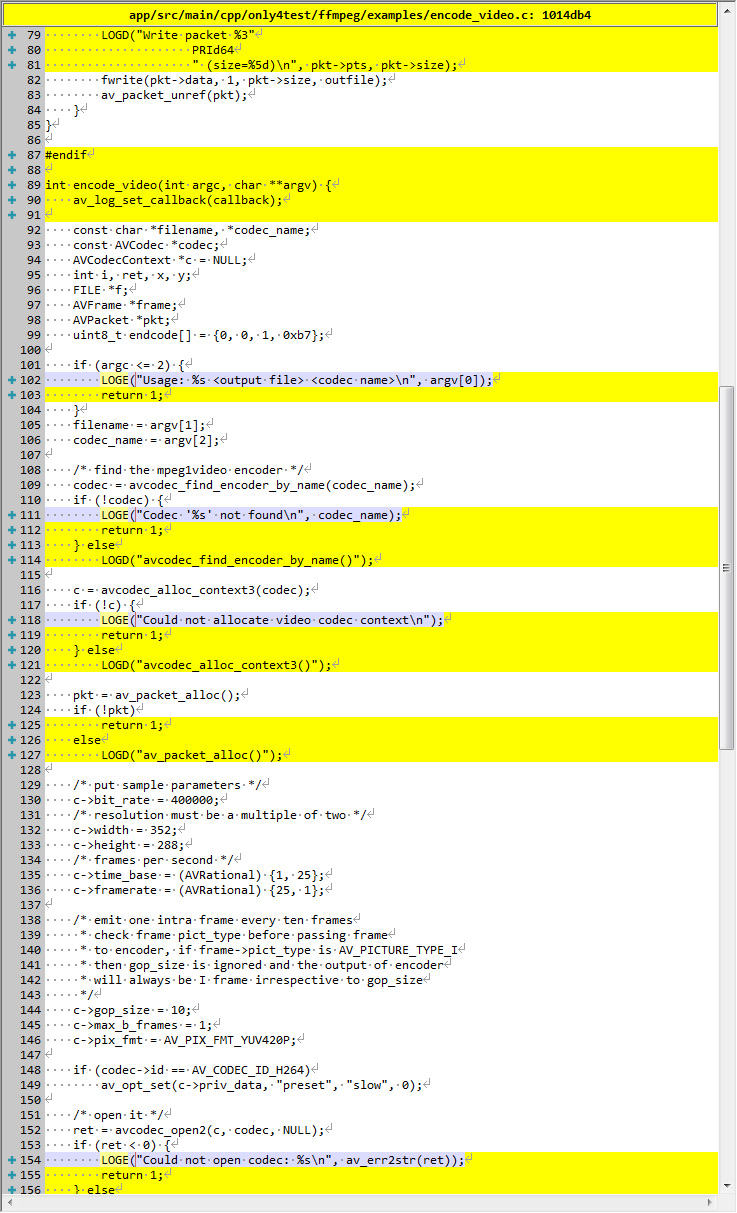

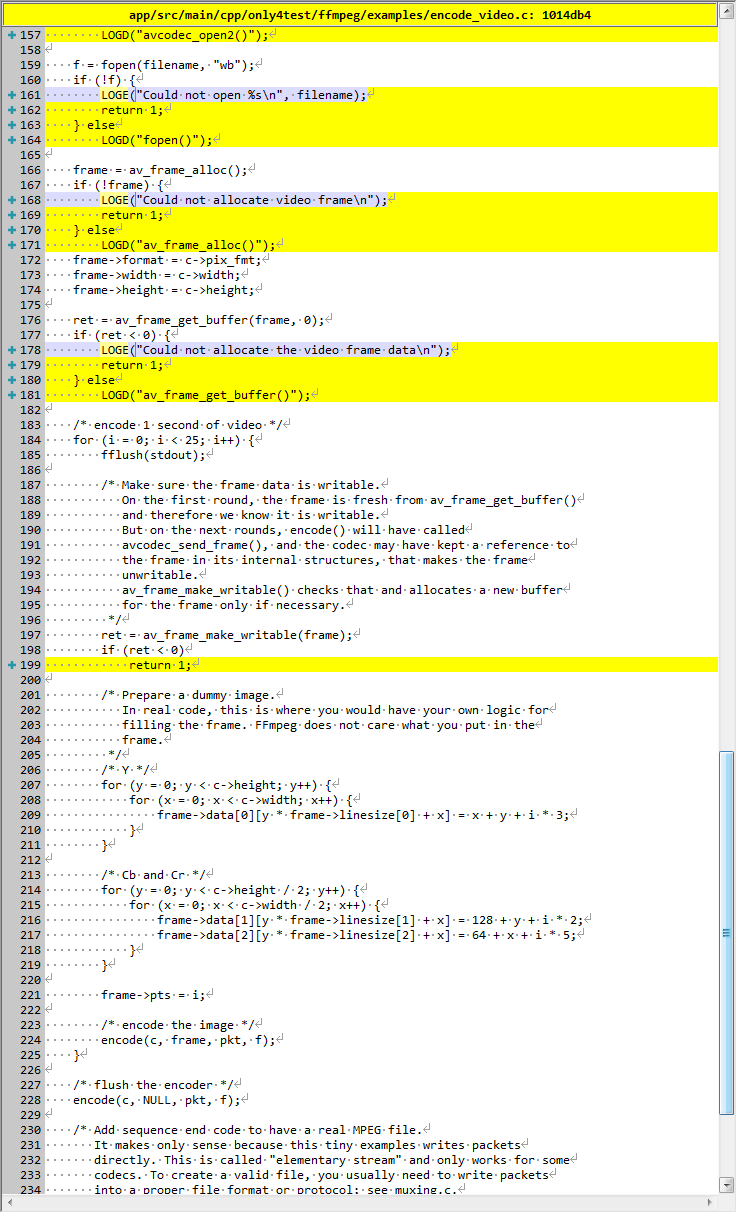

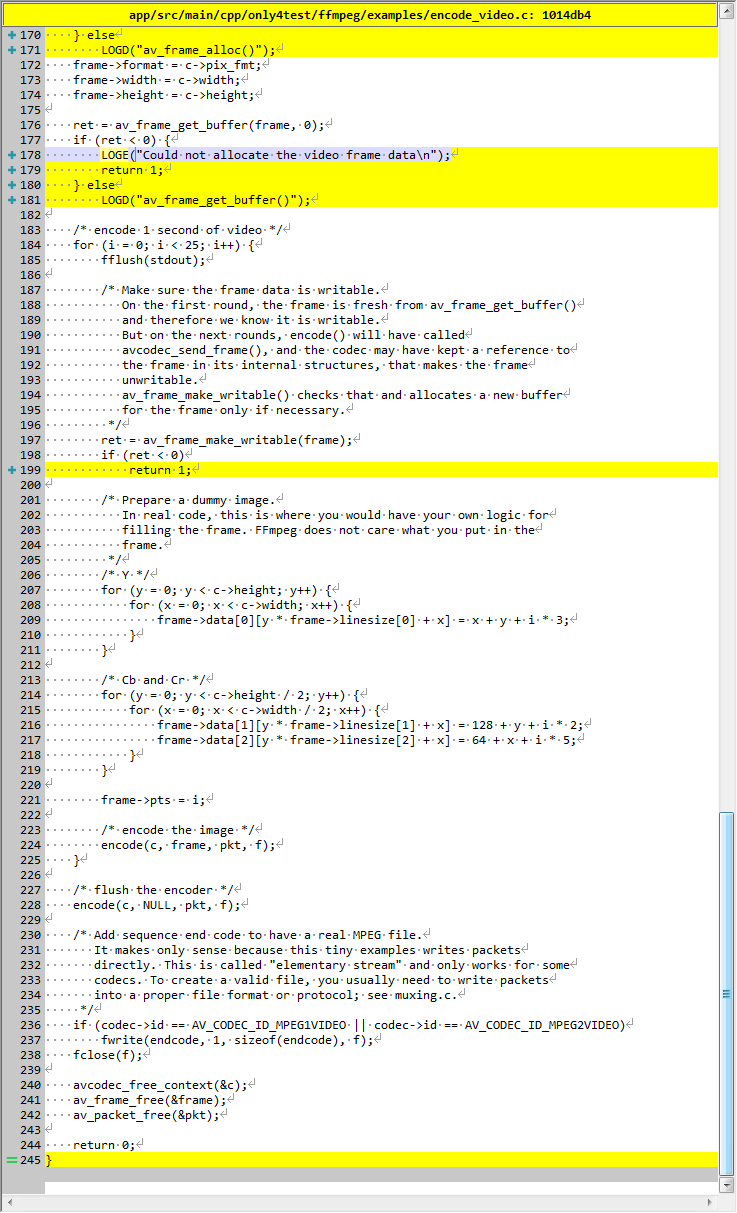

encode_video

描述

video encoding with libavcodec API example

使用 libavcodec API 进行视频编码示例

演示

在指定位置生成文件:

源代码修改

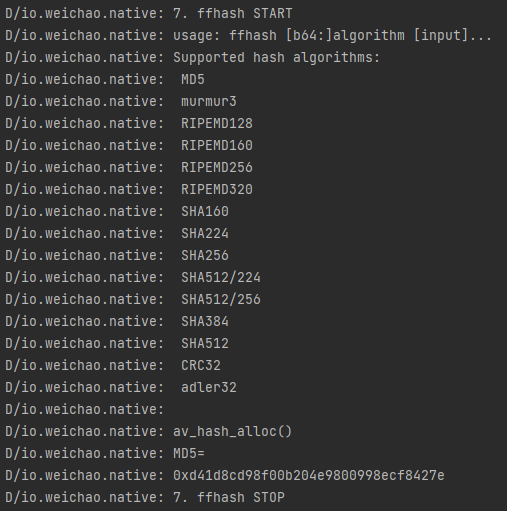

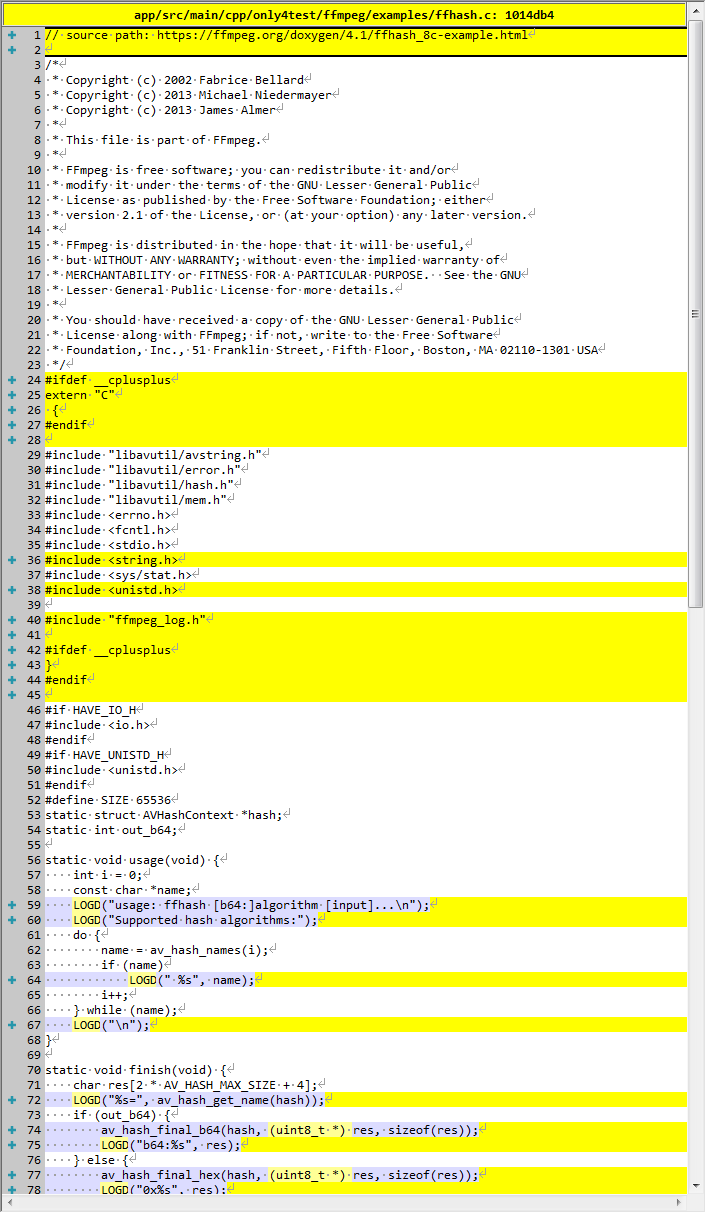

ffhash

描述

This example is a simple command line application that takes one or more arguments. It demonstrates a typical use of the hashing API with allocation, initialization, updating, and finalizing.

此示例是一个简单的命令行应用程序,它采用一个或多个参数。 它演示了哈希 API 的典型用法,包括分配、初始化、更新和完成。

演示

源代码修改

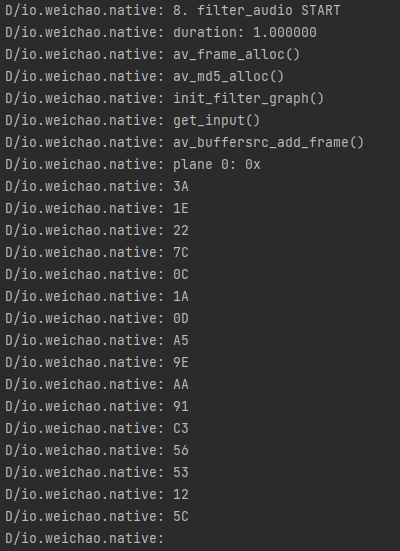

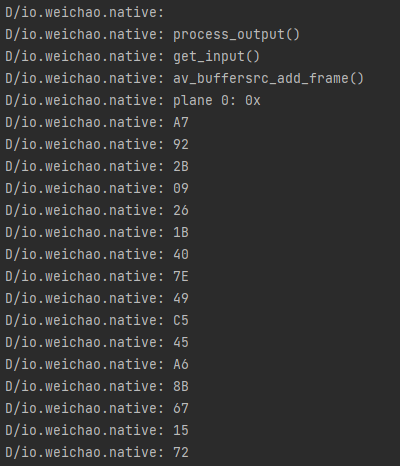

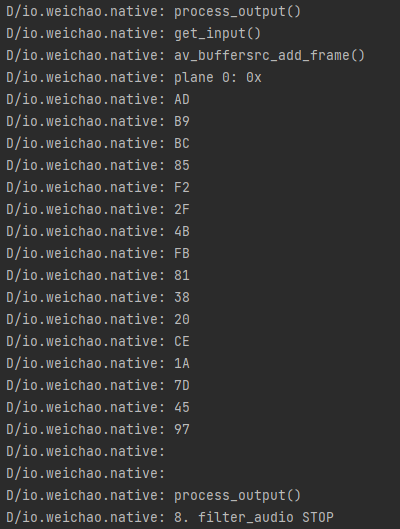

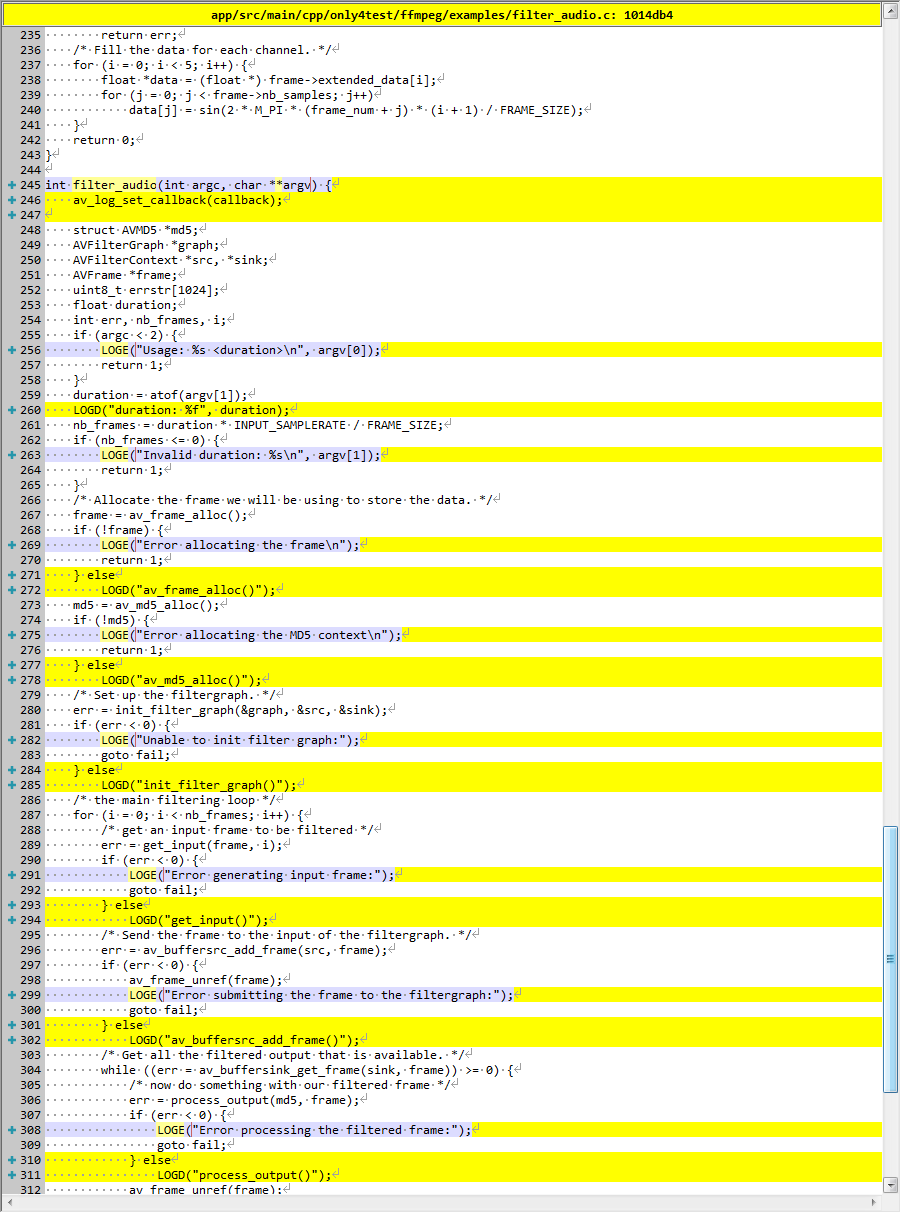

filter_audio

描述

This example will generate a sine wave audio, pass it through a simple filter chain, and then compute the MD5 checksum of the output data.

The filter chain it uses is: (input) -> abuffer -> volume -> aformat -> abuffersink -> (output)

abuffer: This provides the endpoint where you can feed the decoded samples.

volume: In this example we hardcode it to 0.90.

aformat: This converts the samples to the samplefreq, channel layout, and sample format required by the audio device.

abuffersink: This provides the endpoint where you can read the samples after they have passed through the filter chain.

这个例子将生成一个正弦波音频,通过一个简单的过滤器链,然后计算输出数据的 MD5 校验和。

它使用的过滤器链是:(input) -> abuffer -> volume -> aformat -> abuffersink -> (output)

abuffer:这提供了您可以提供解码样本的端点。

volume:在本例中,我们将其硬编码为 0.90。

aformat:这会将样本转换为音频设备所需的样本频率、通道布局和样本格式。

abuffersink:这提供了端点,您可以在样本通过过滤器链后读取样本。

演示

(省略中间 log)

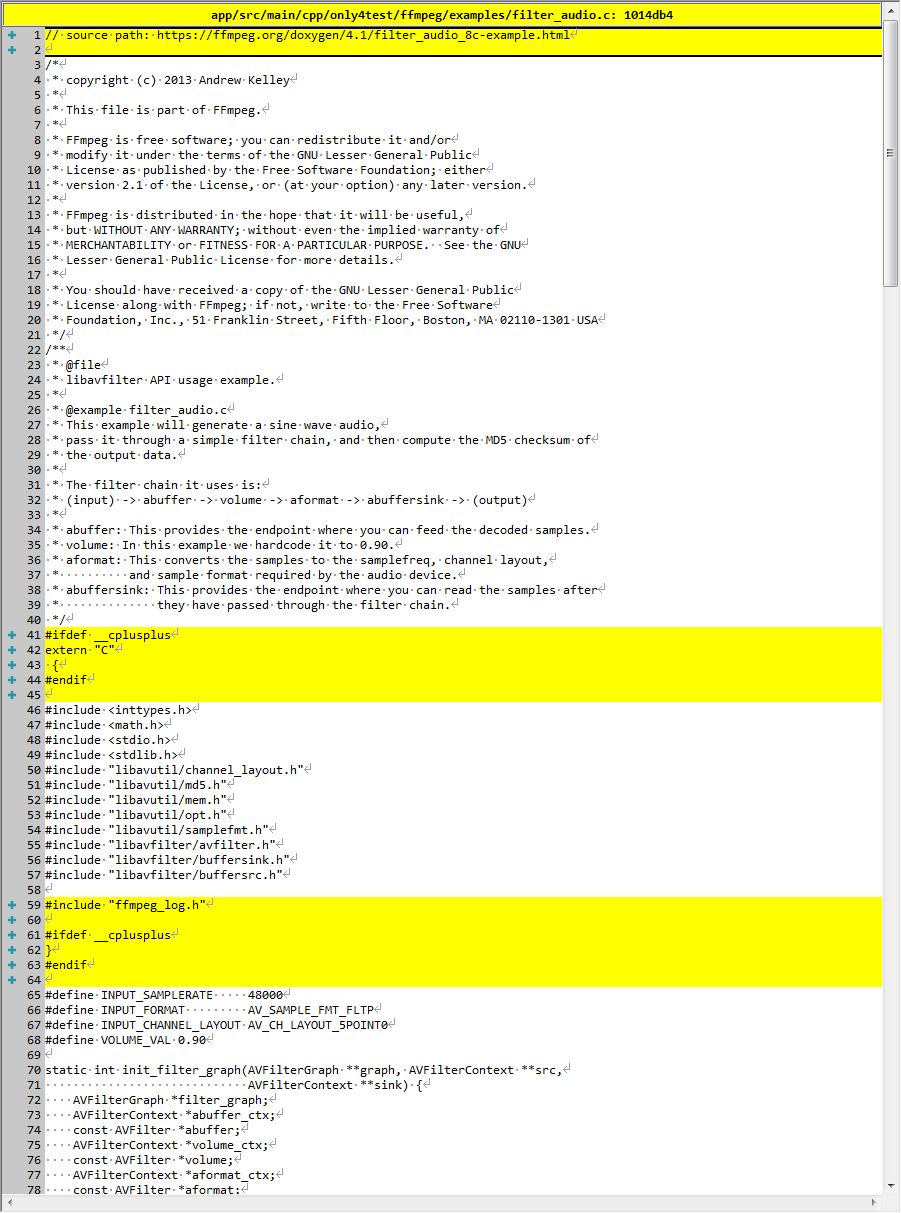

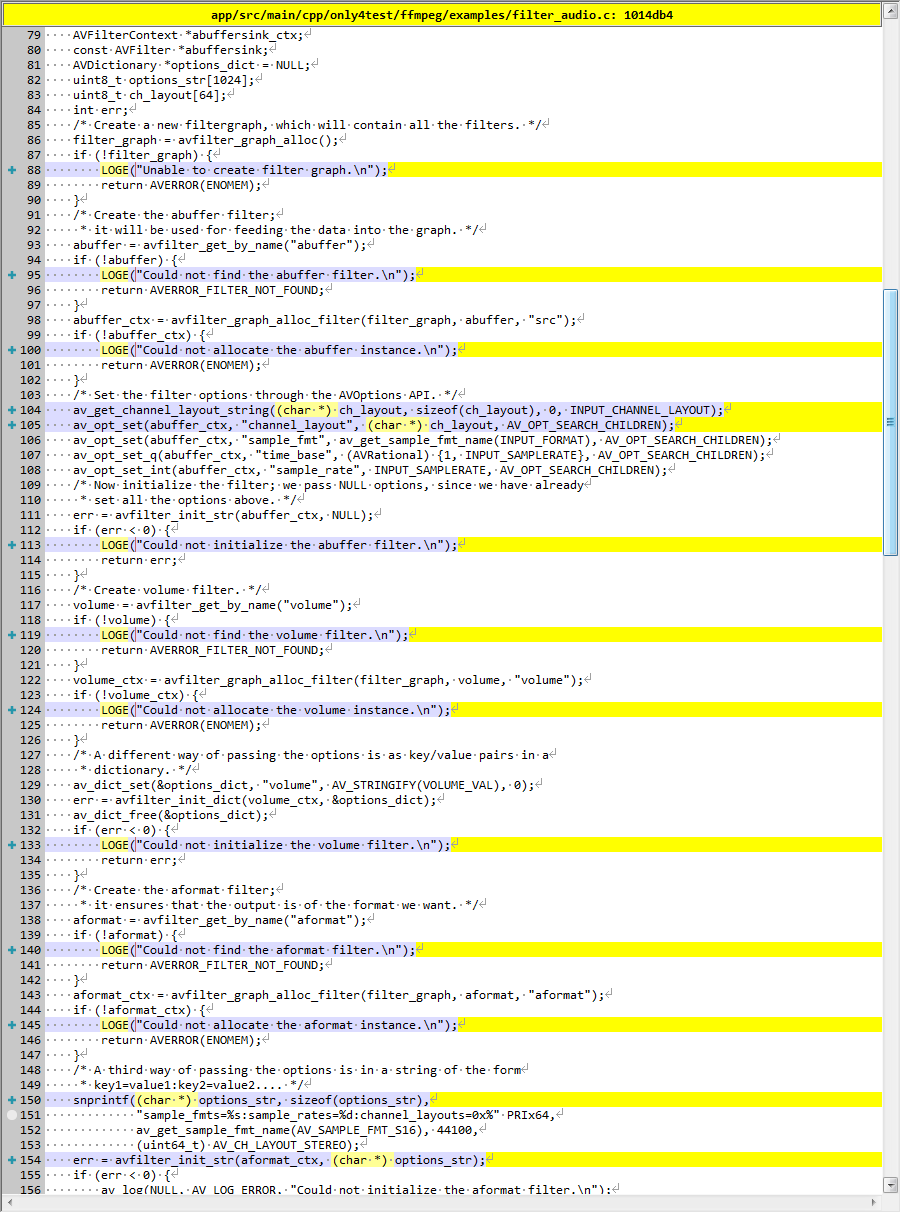

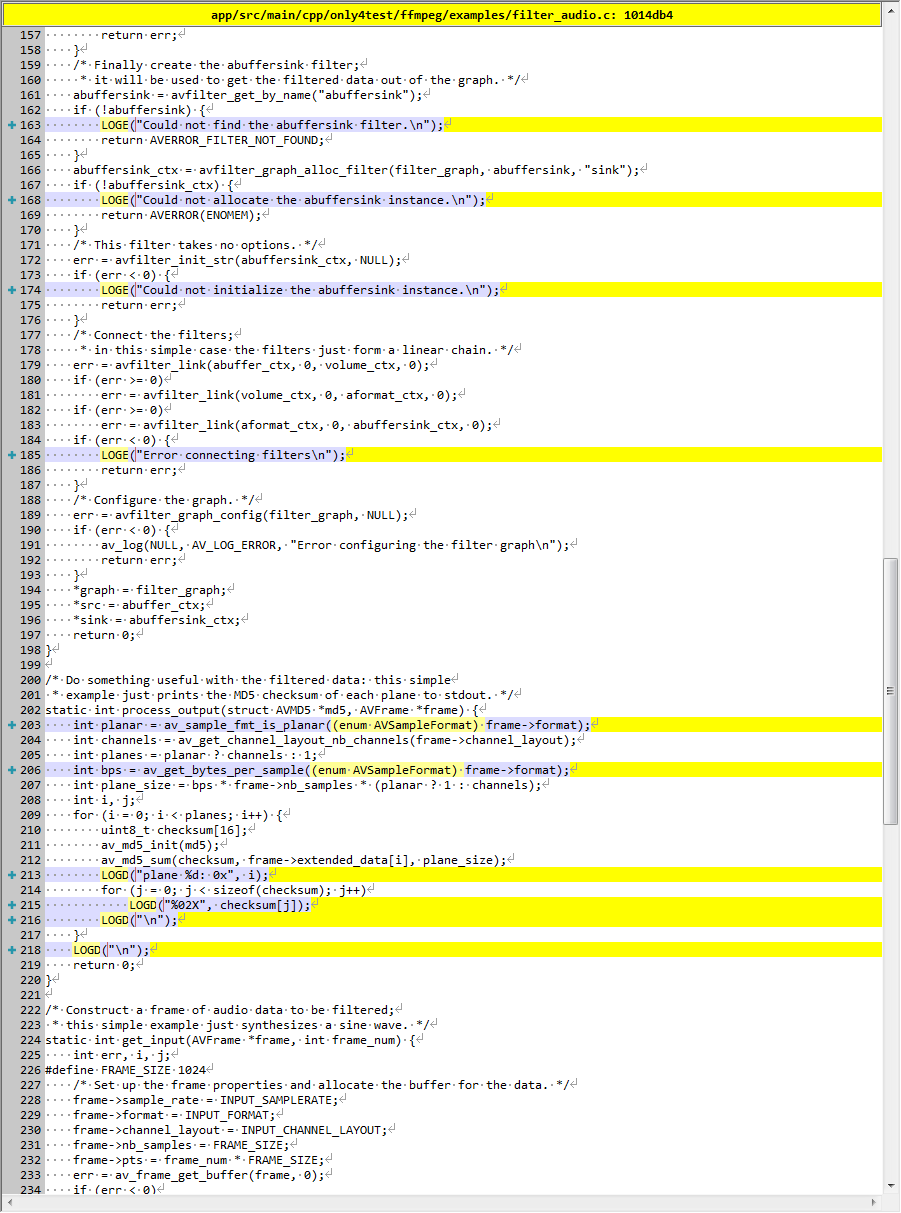

源代码修改

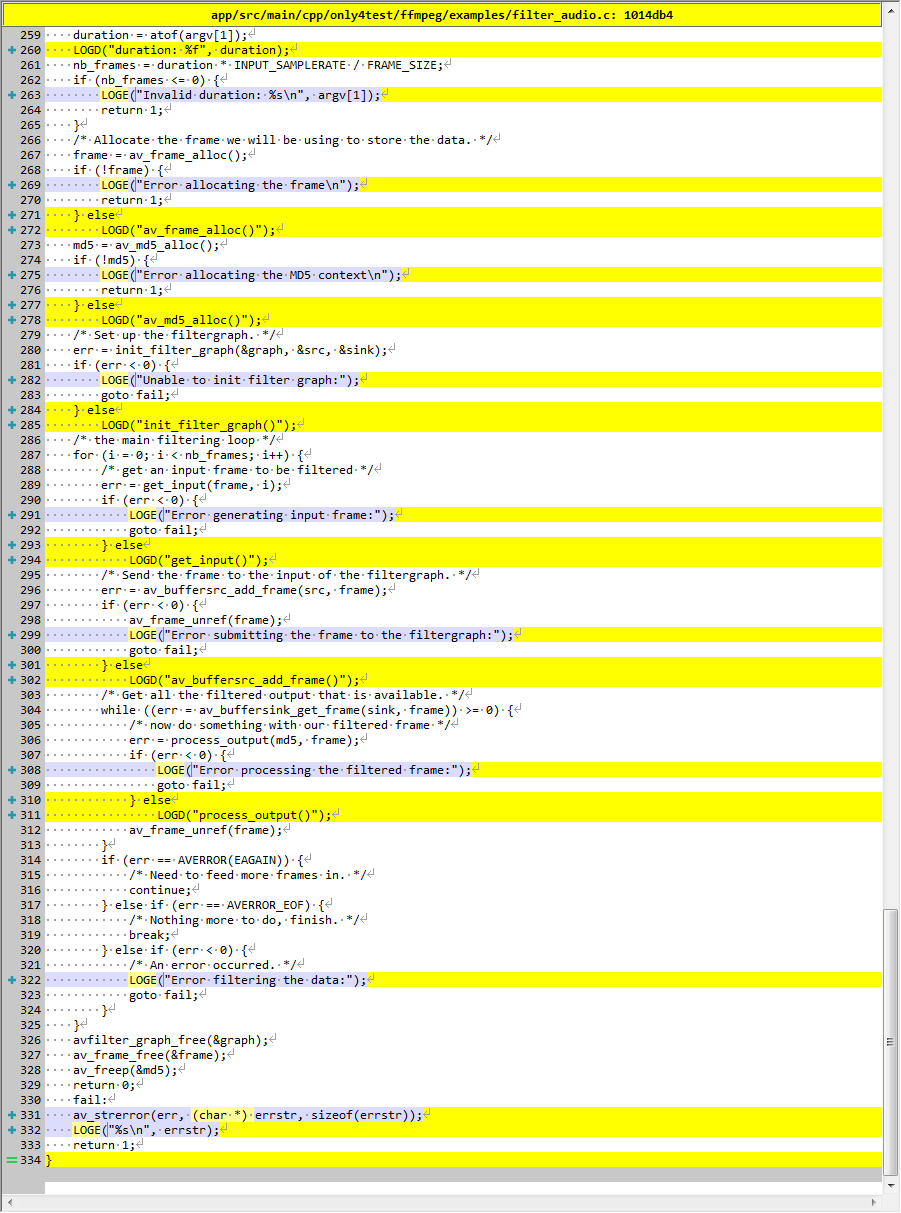

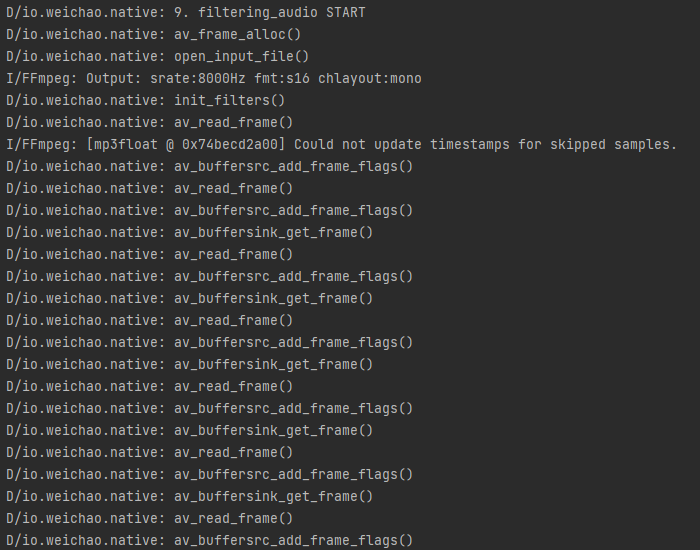

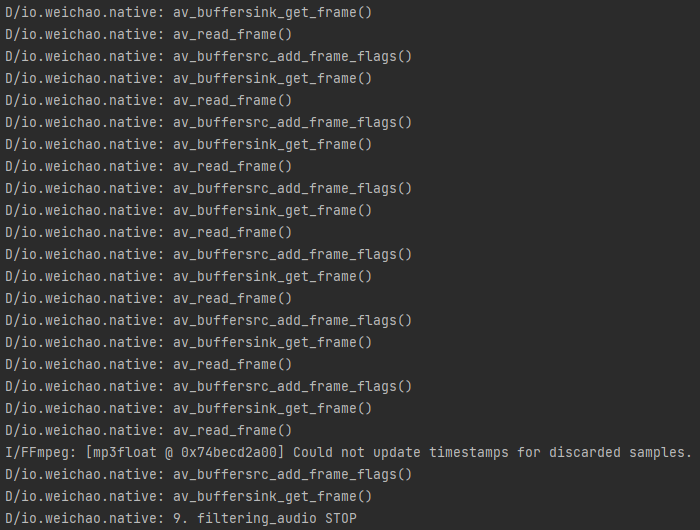

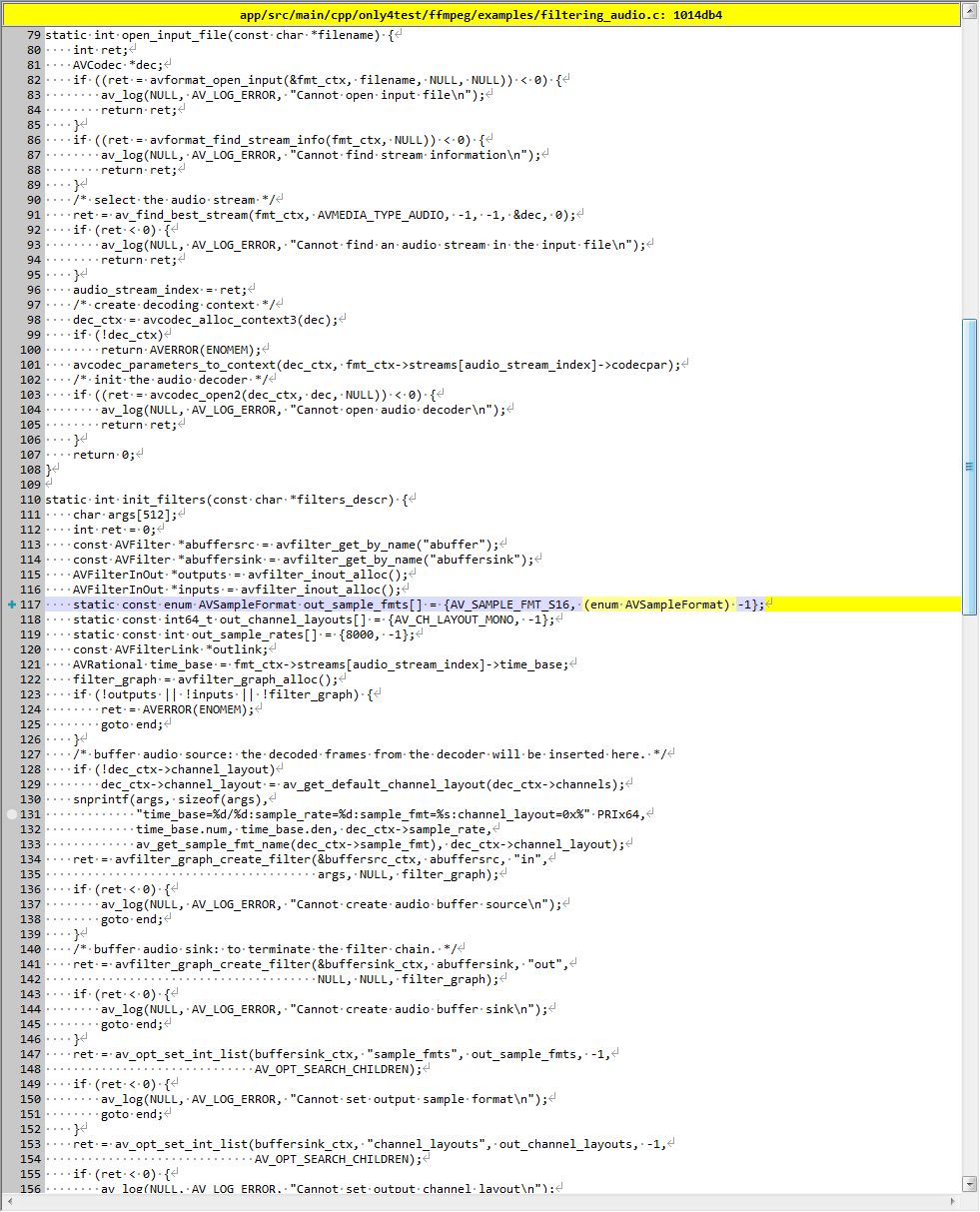

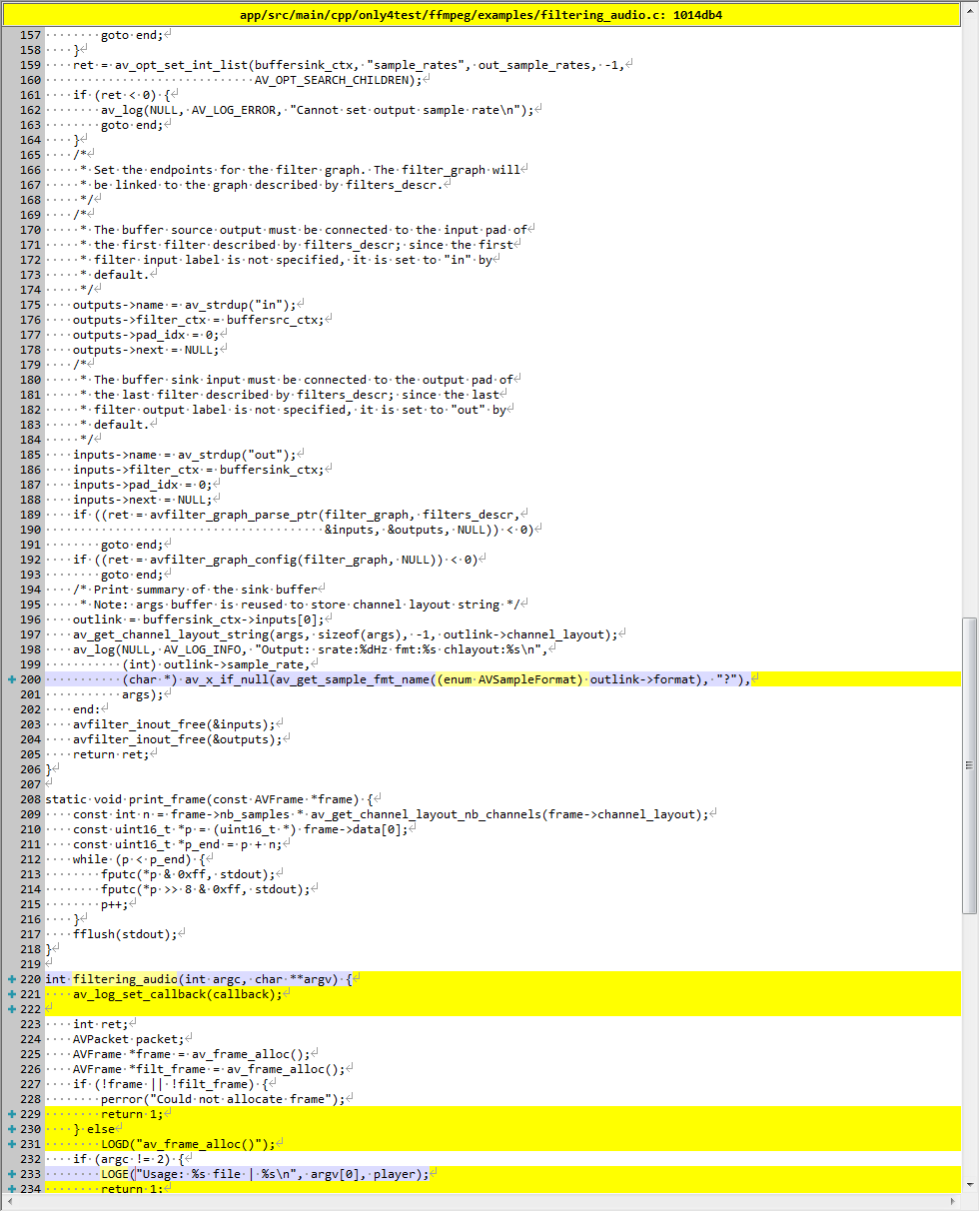

filtering_audio

描述

API example for audio decoding and filtering

用于音频解码和过滤的 API 示例

演示

(省略中间 log)

源代码修改

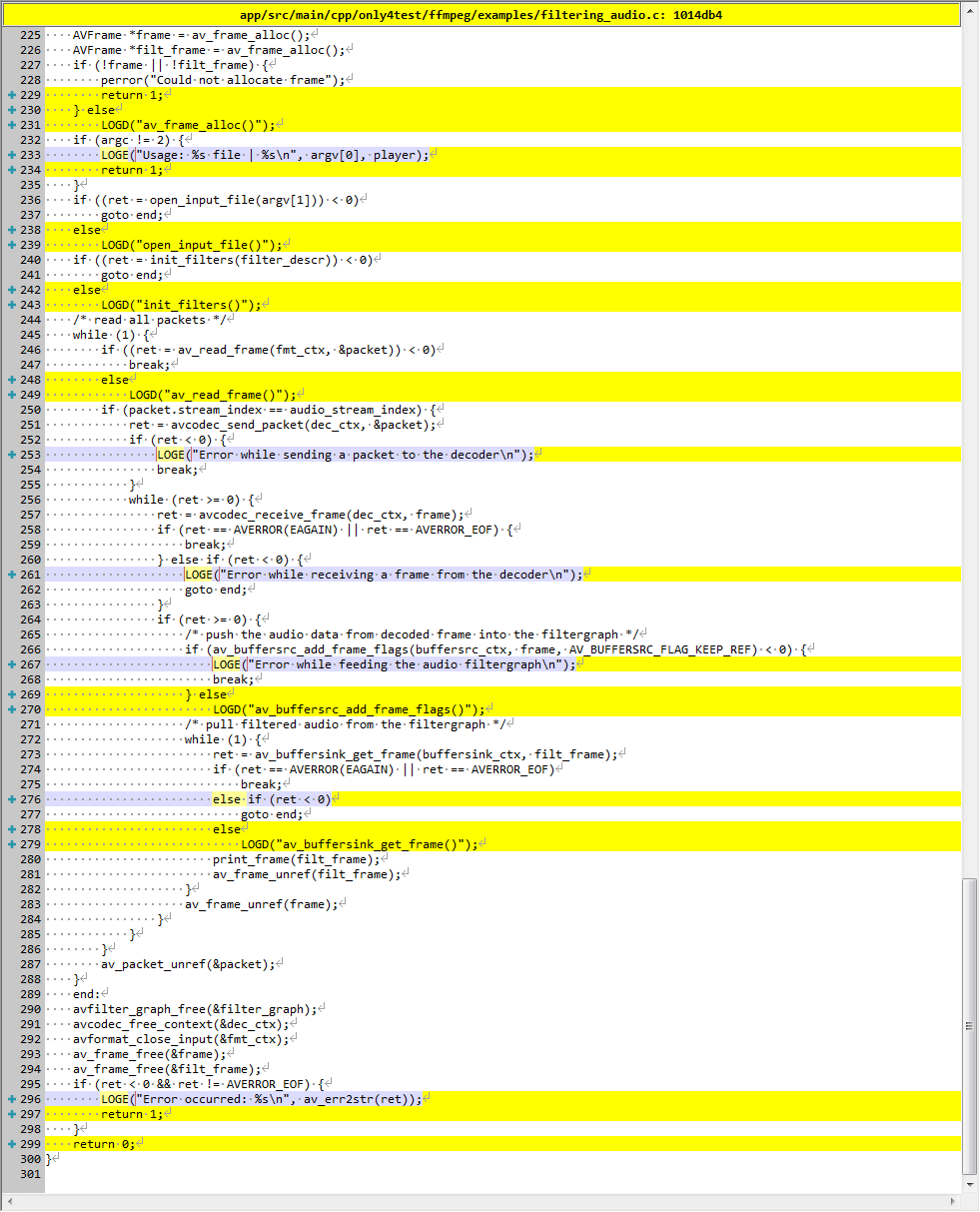

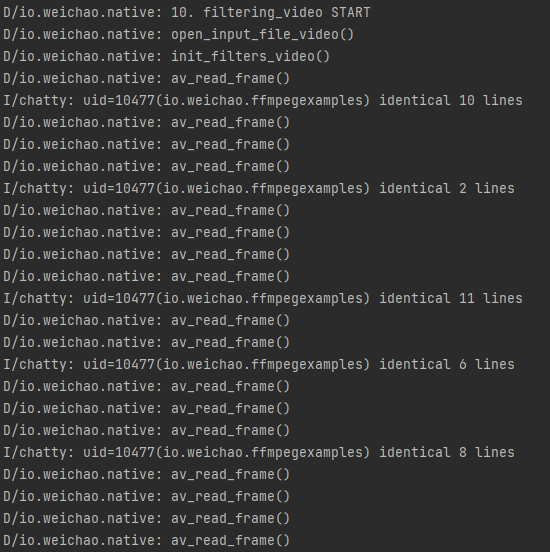

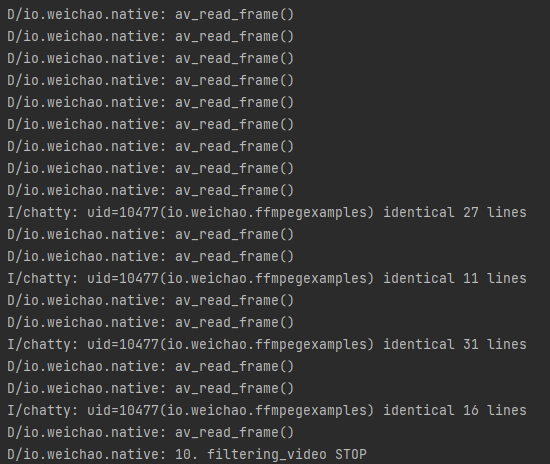

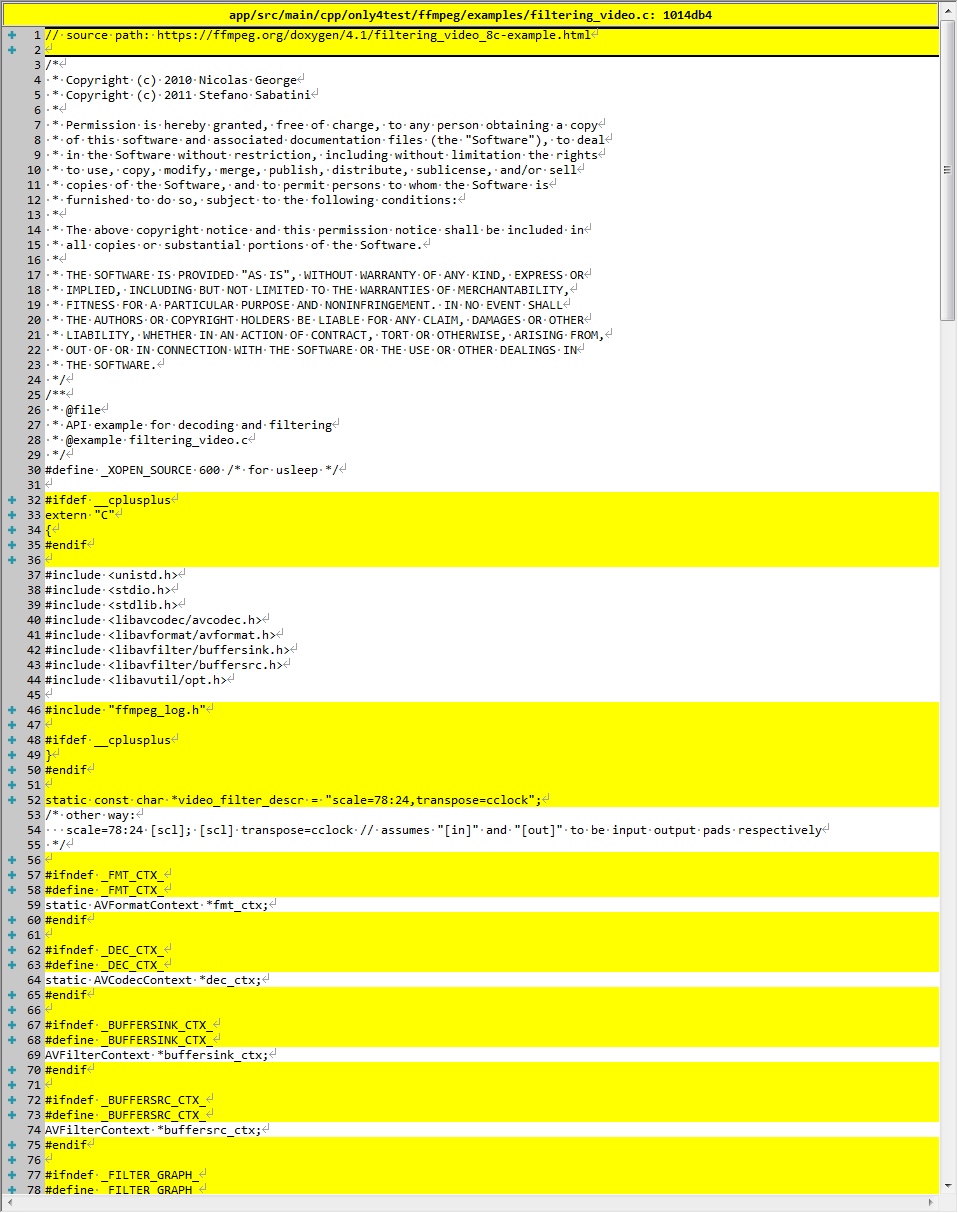

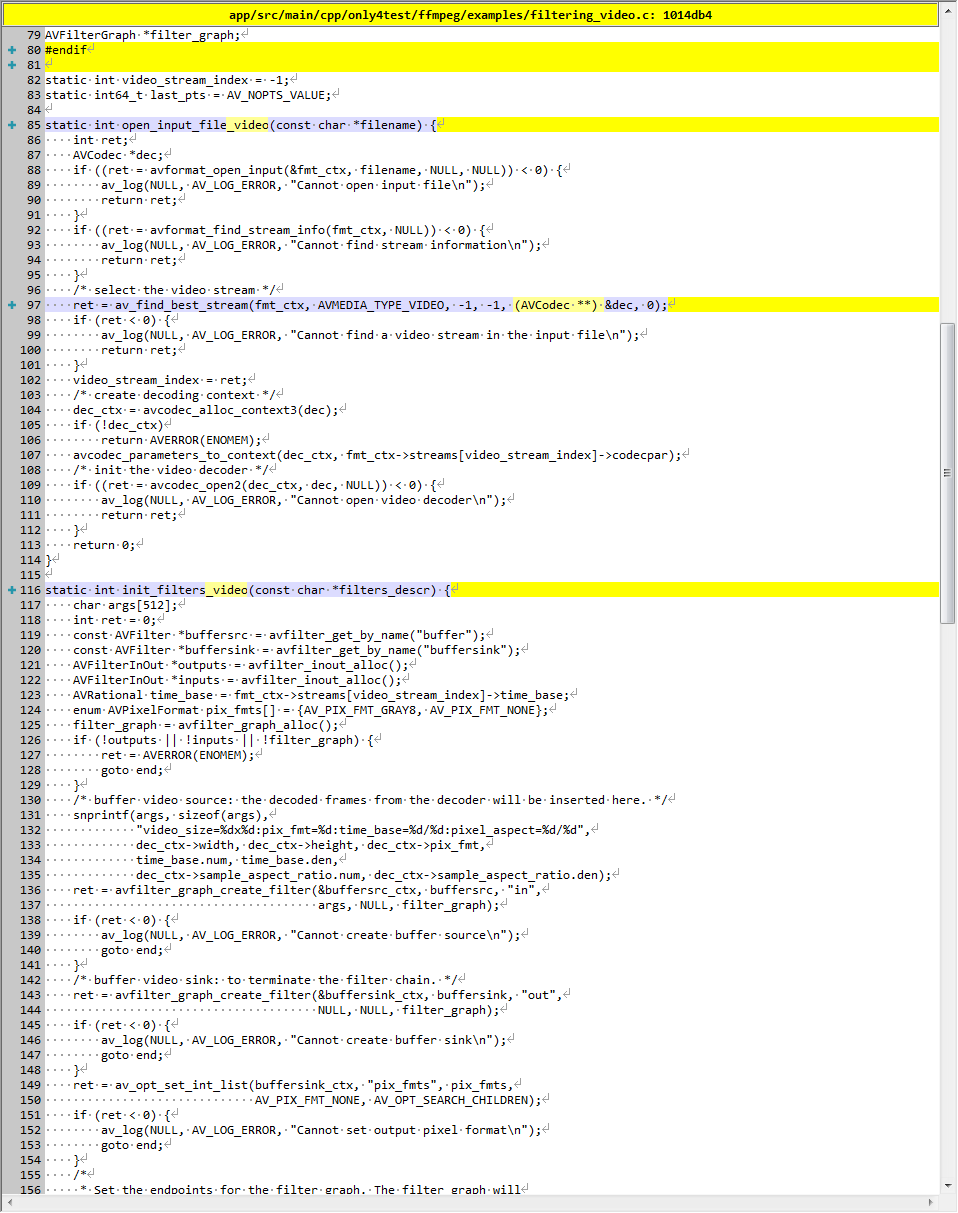

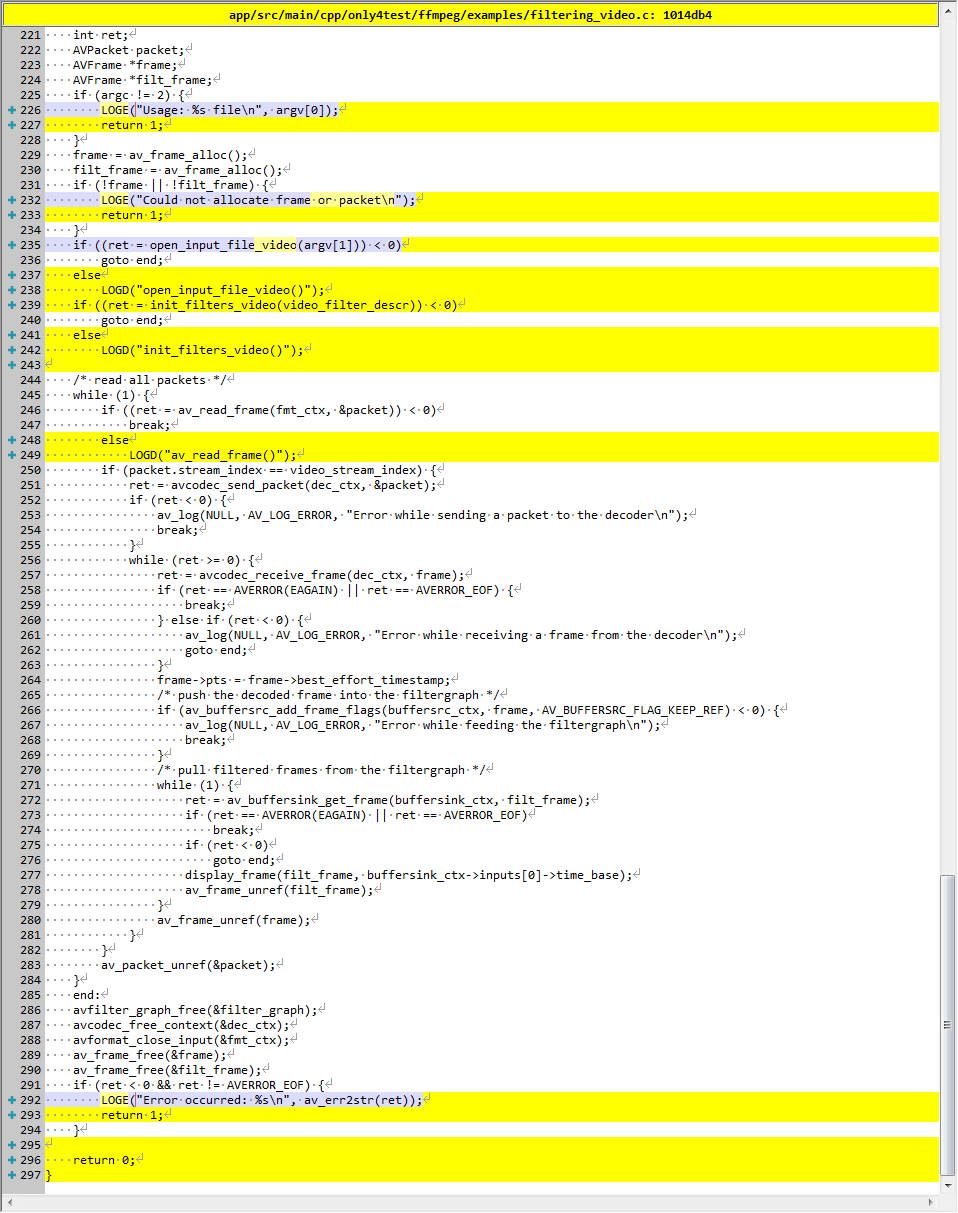

filtering_video

描述

API example for decoding and filtering

用于解码和过滤的 API 示例

演示

(省略中间 log)

源代码修改

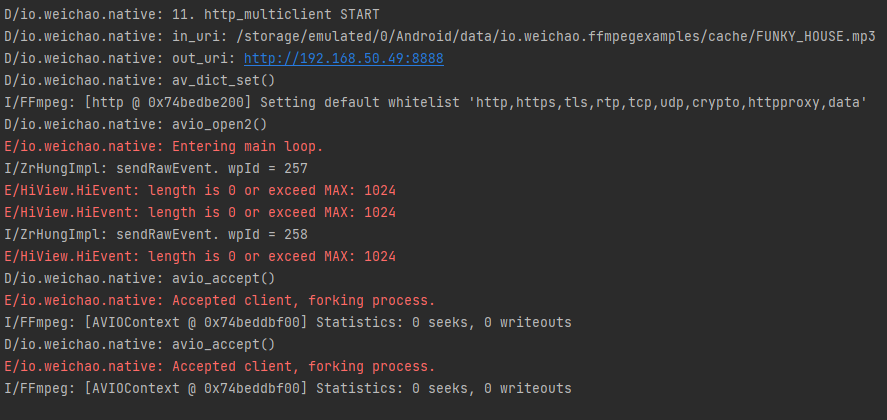

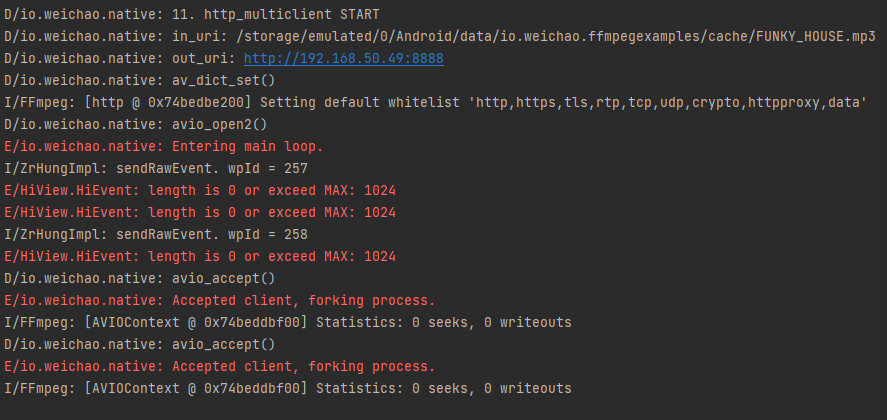

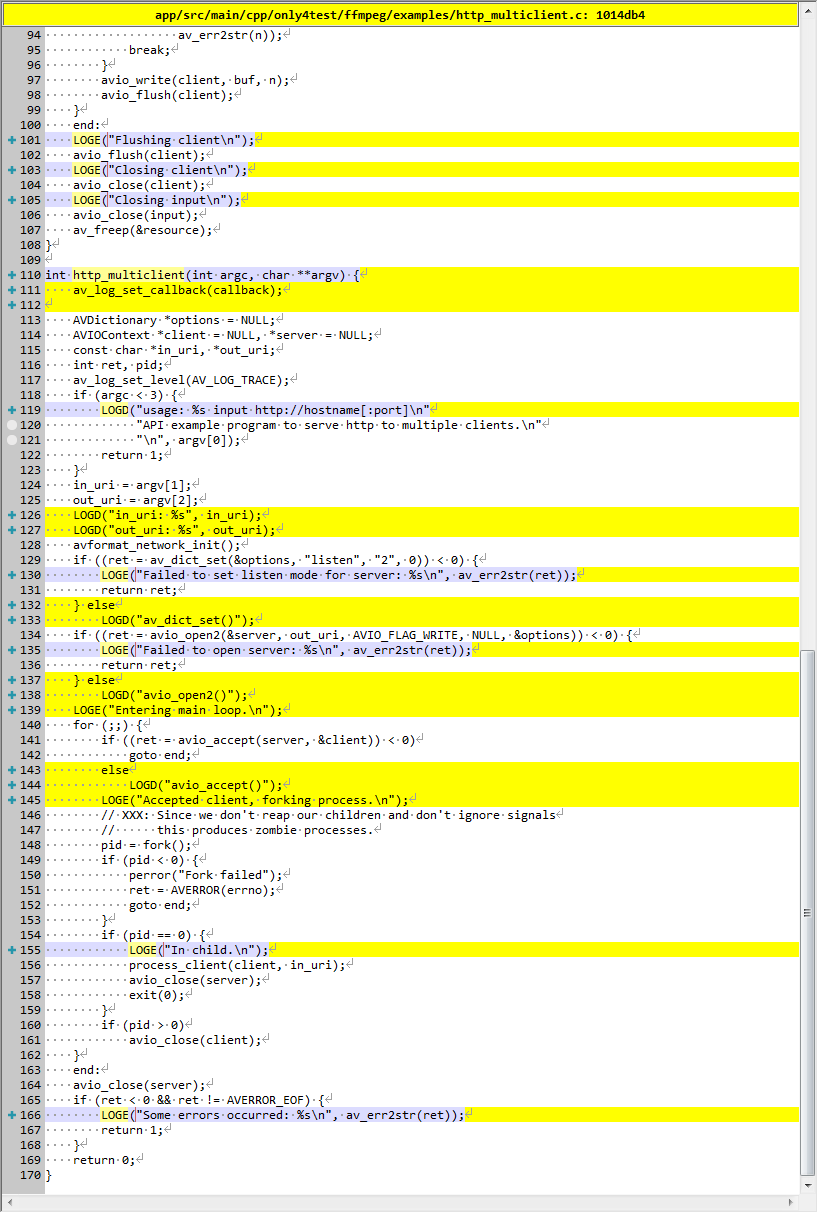

http_multiclient

描述

libavformat multi-client network API usage example.

This example will serve a file without decoding or demuxing it over http.Multiple clients can connect and will receive the same file.

libavformat 多客户端网络 API 使用示例。

此示例将提供一个文件,而无需通过 http 对其进行解码或解复用。多个客户端可以连接并接收相同的文件。

API example program to serve http to multiple clients.

为多个客户端提供 http 服务的 API 示例程序。

演示

1、确定 Android 设备的 IP,例如:192.168.50.49

2、运行代码,将 Android 设备作为 http 的服务端开启 8888 端口,文件位置为 /storage/emulated/0/Android/data/io.weichao.ffmpegexamples/cache/FUNKY_HOUSE.mp3

3、在同一子网内的其他设备可以通过 http://192.168.50.49:8888/?resource=/storage/emulated/0/Android/data/io.weichao.ffmpegexamples/cache/FUNKY_HOUSE.mp3 下载文件

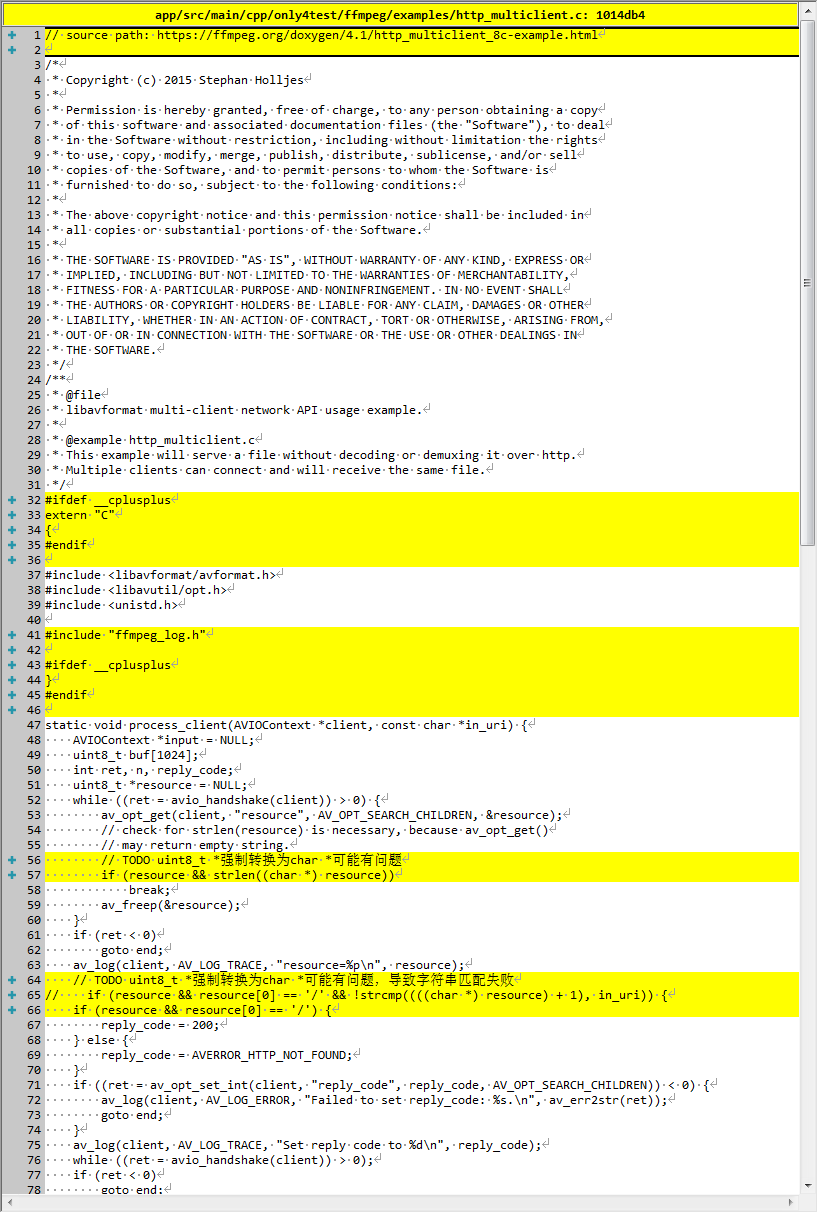

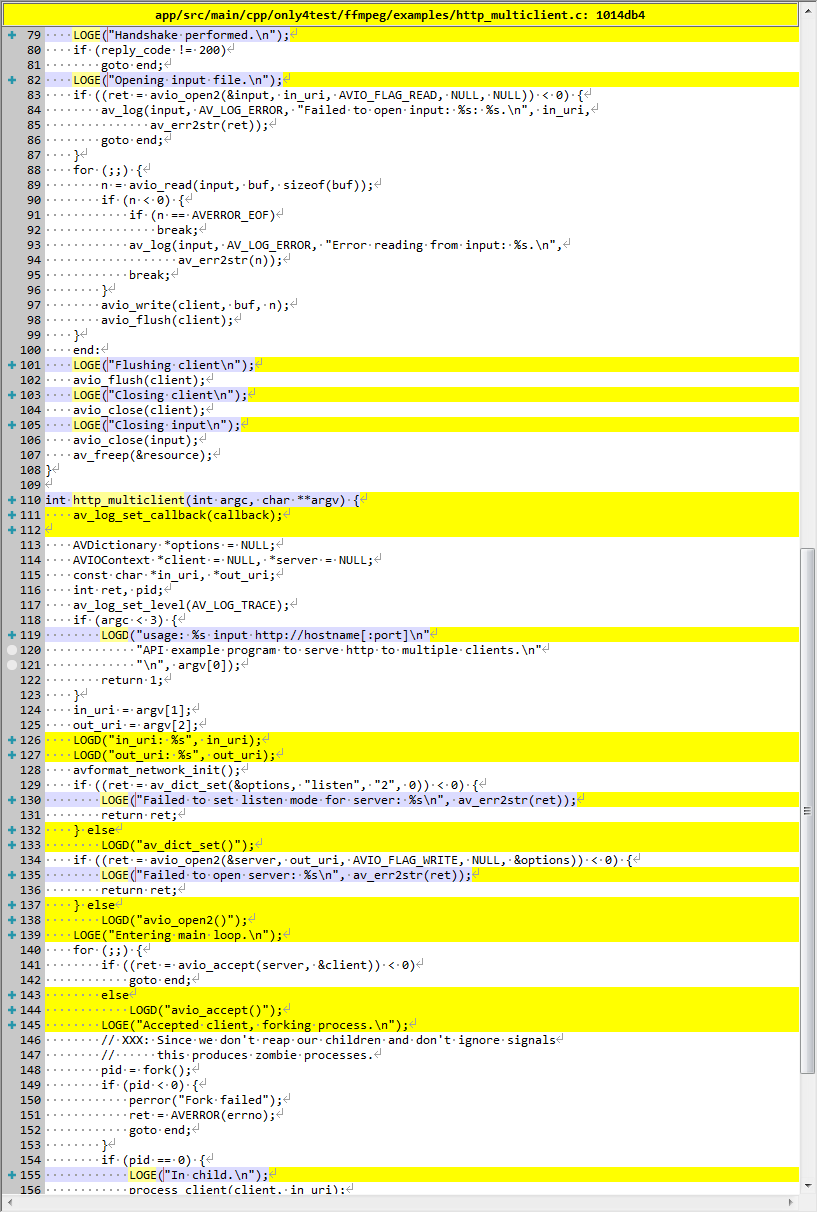

源代码修改

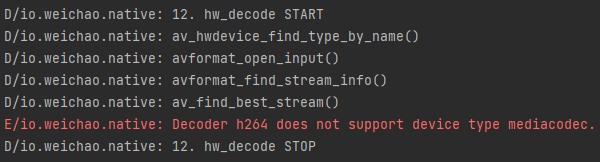

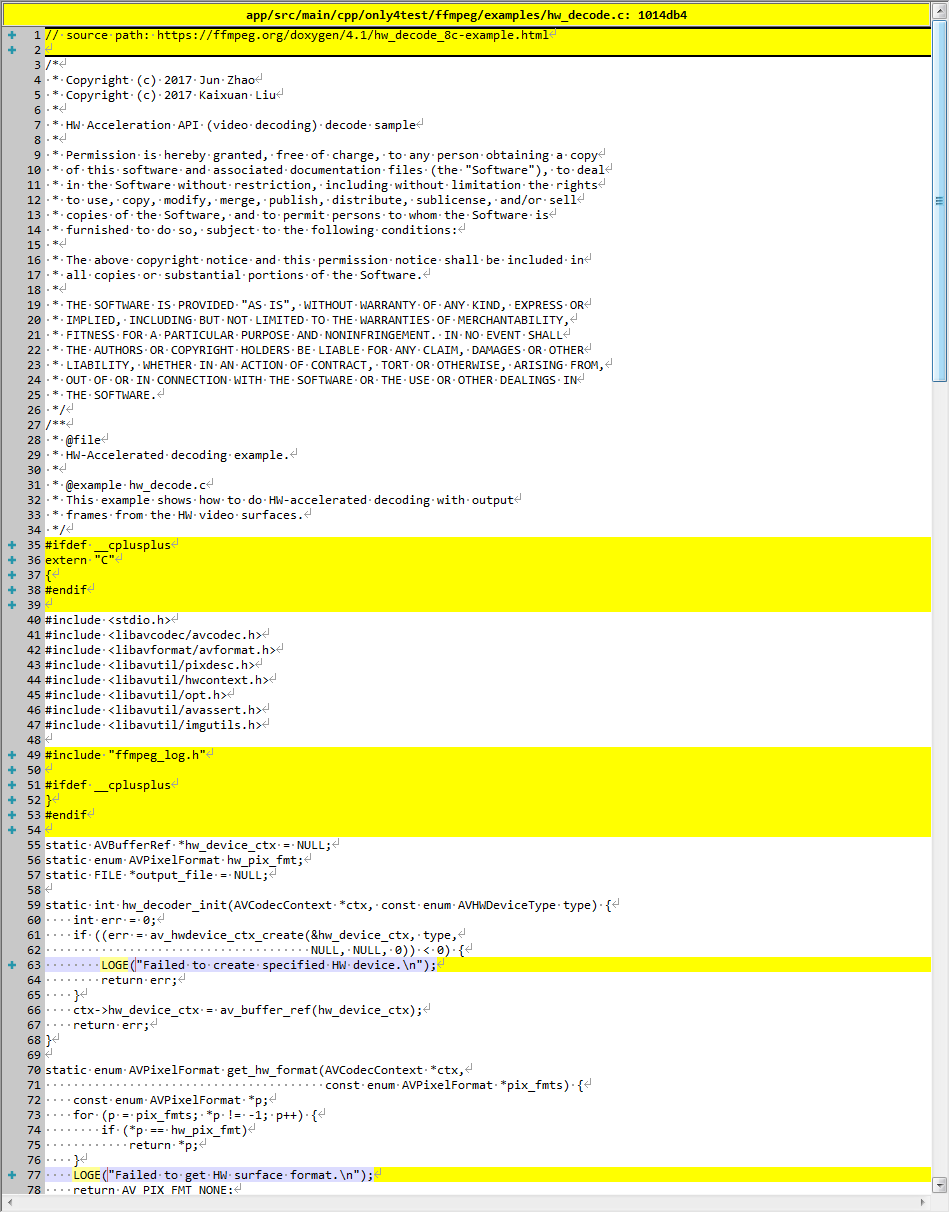

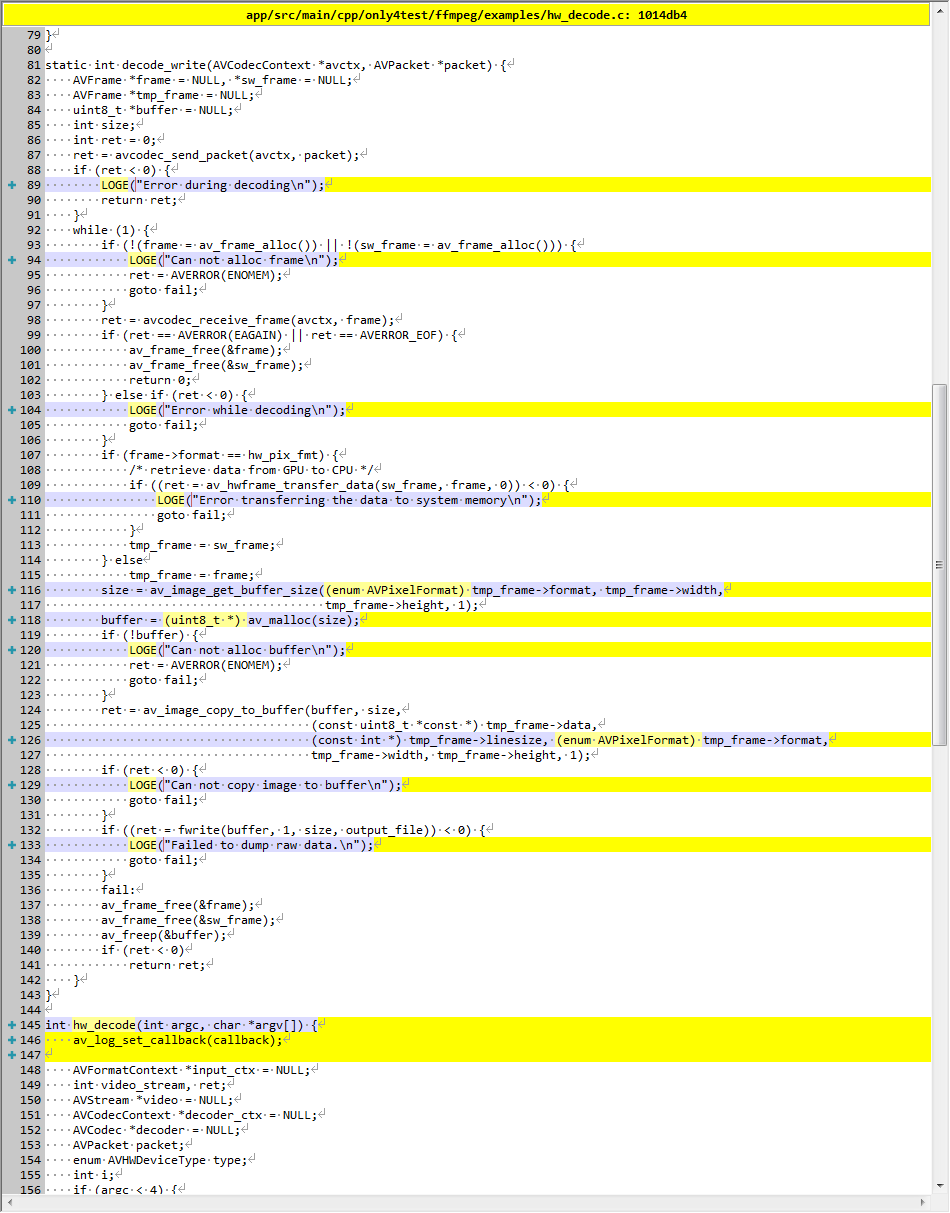

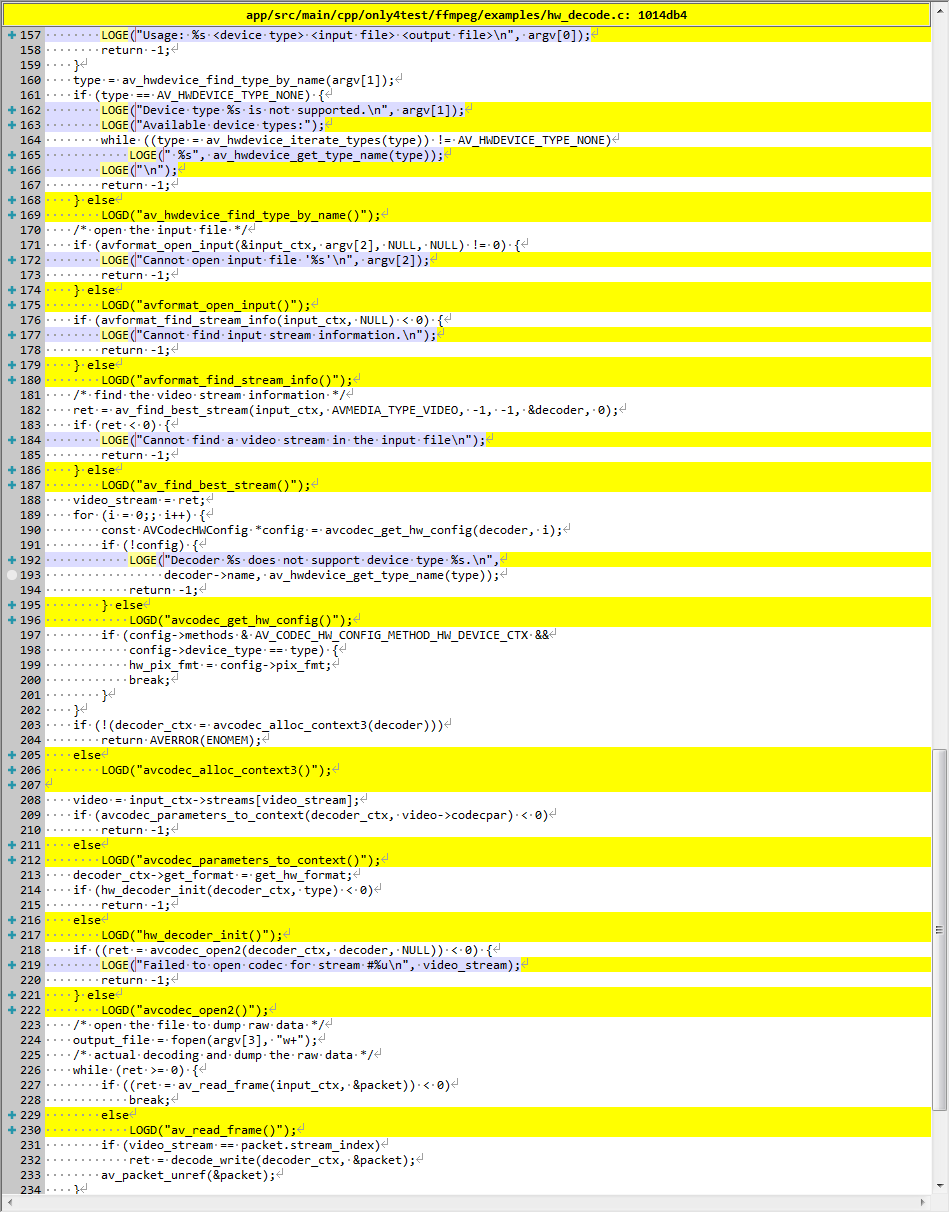

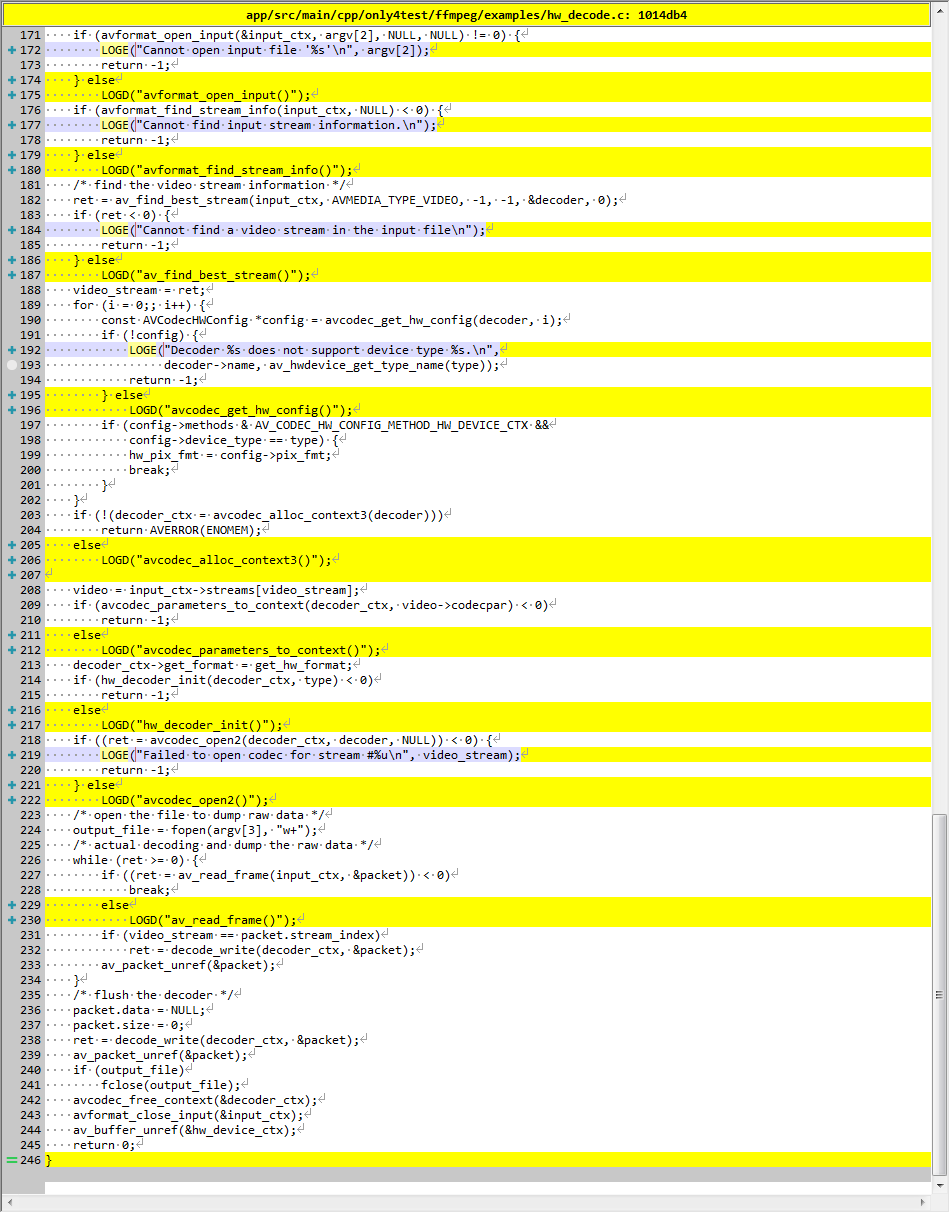

hw_decode TODO

描述

HW-Accelerated decoding example.

This example shows how to do HW-accelerated decoding with output frames from the HW video surfaces.

硬件加速解码示例。

此示例说明如何使用来自硬件视频表面的输出帧进行硬件加速解码。

演示

TODO 未测试成功

源代码修改

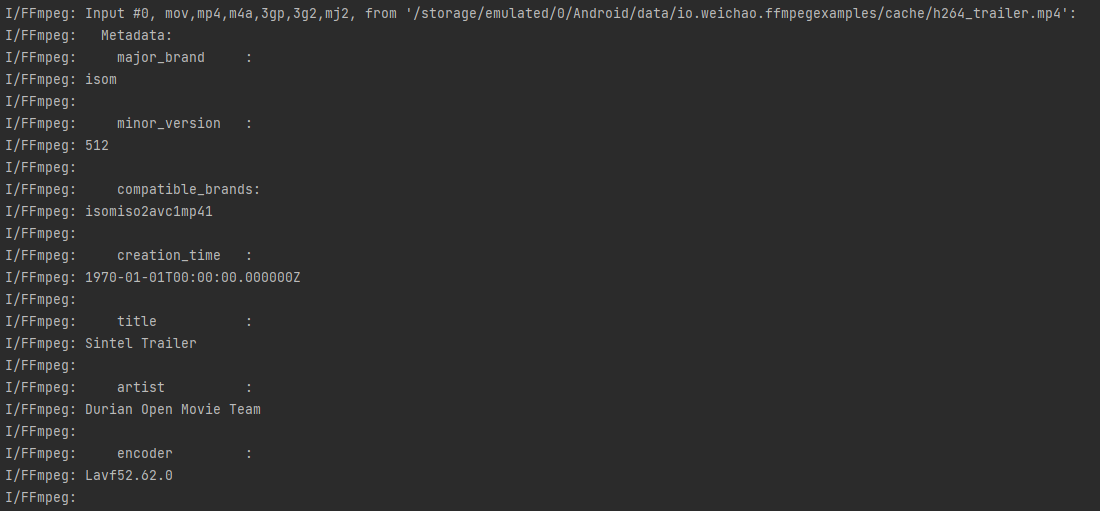

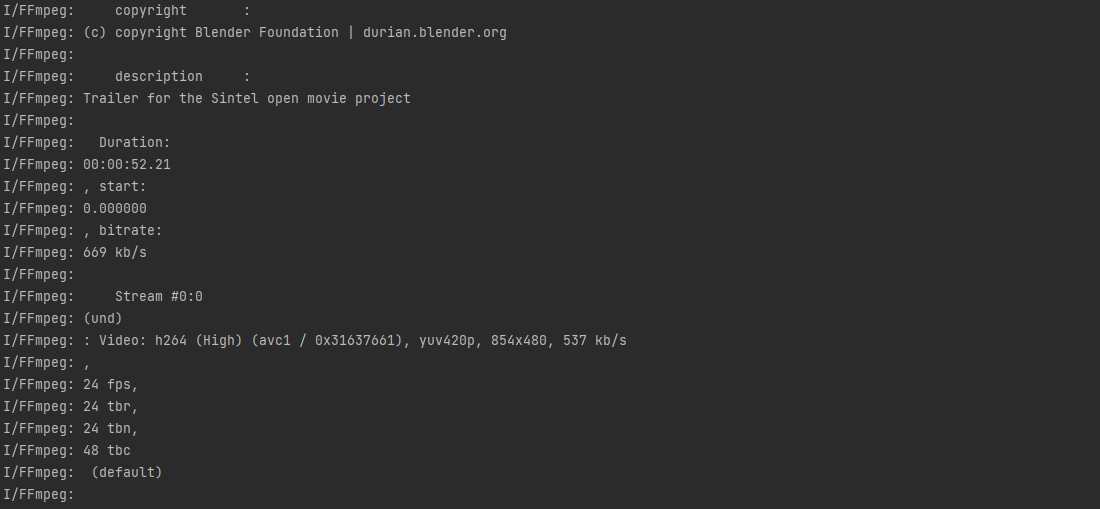

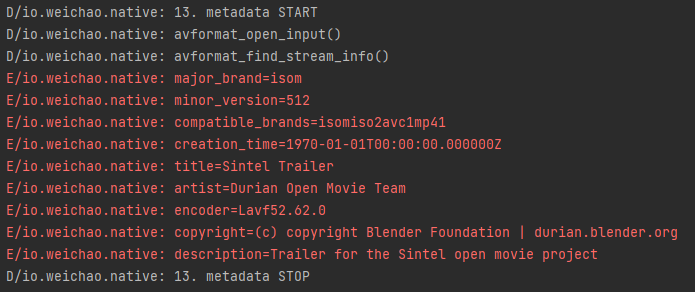

metadata

描述

Shows how the metadata API can be used in application programs.

展示如何在应用程序中使用元数据 API。

example program to demonstrate the use of the libavformat metadata API.

示例程序来演示 libavformat 元数据 API 的使用。

演示

源代码修改

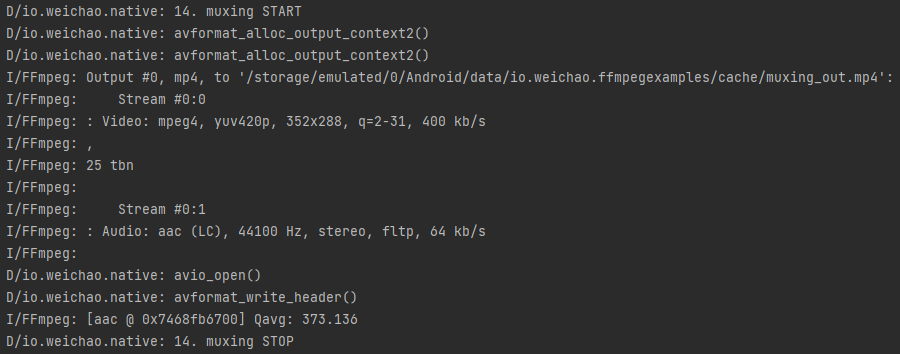

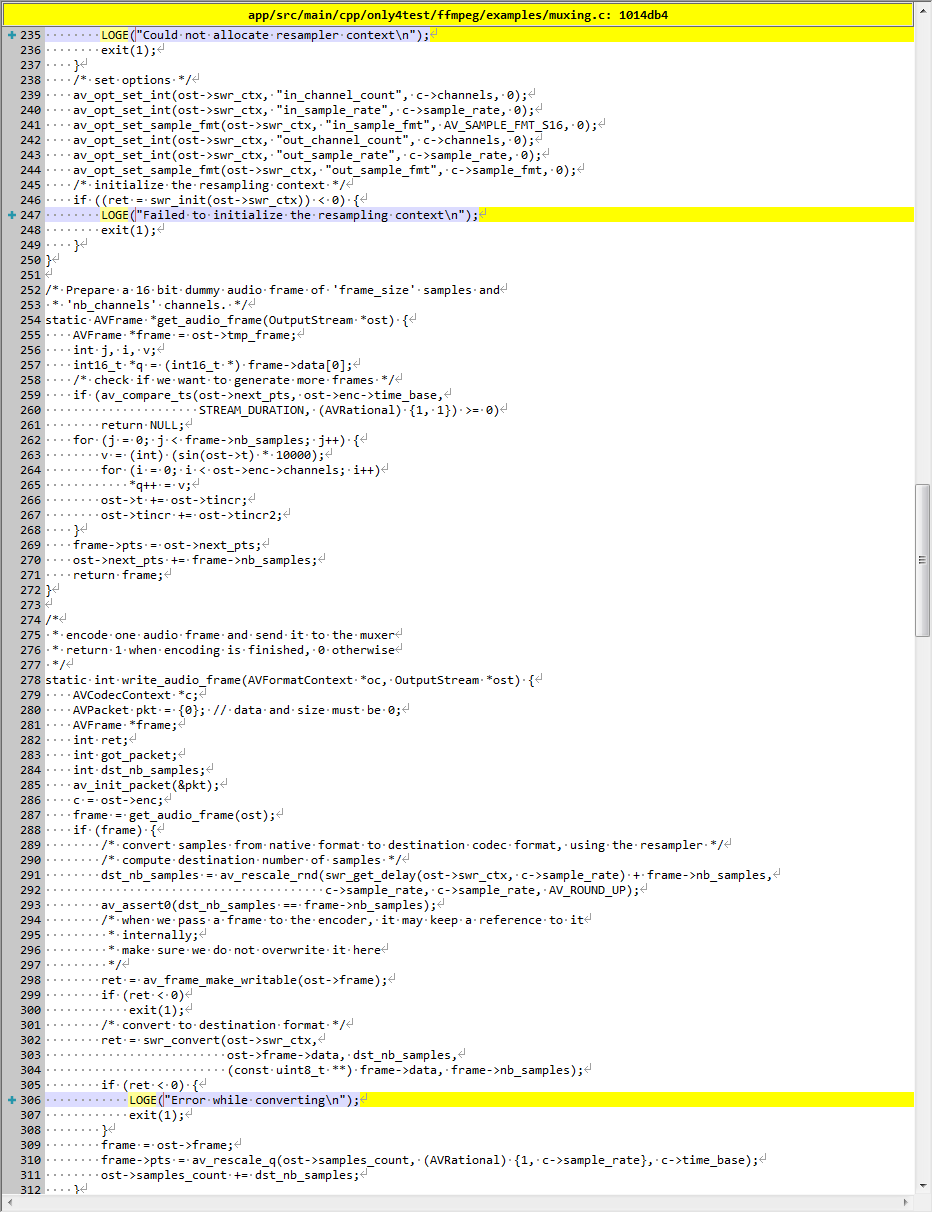

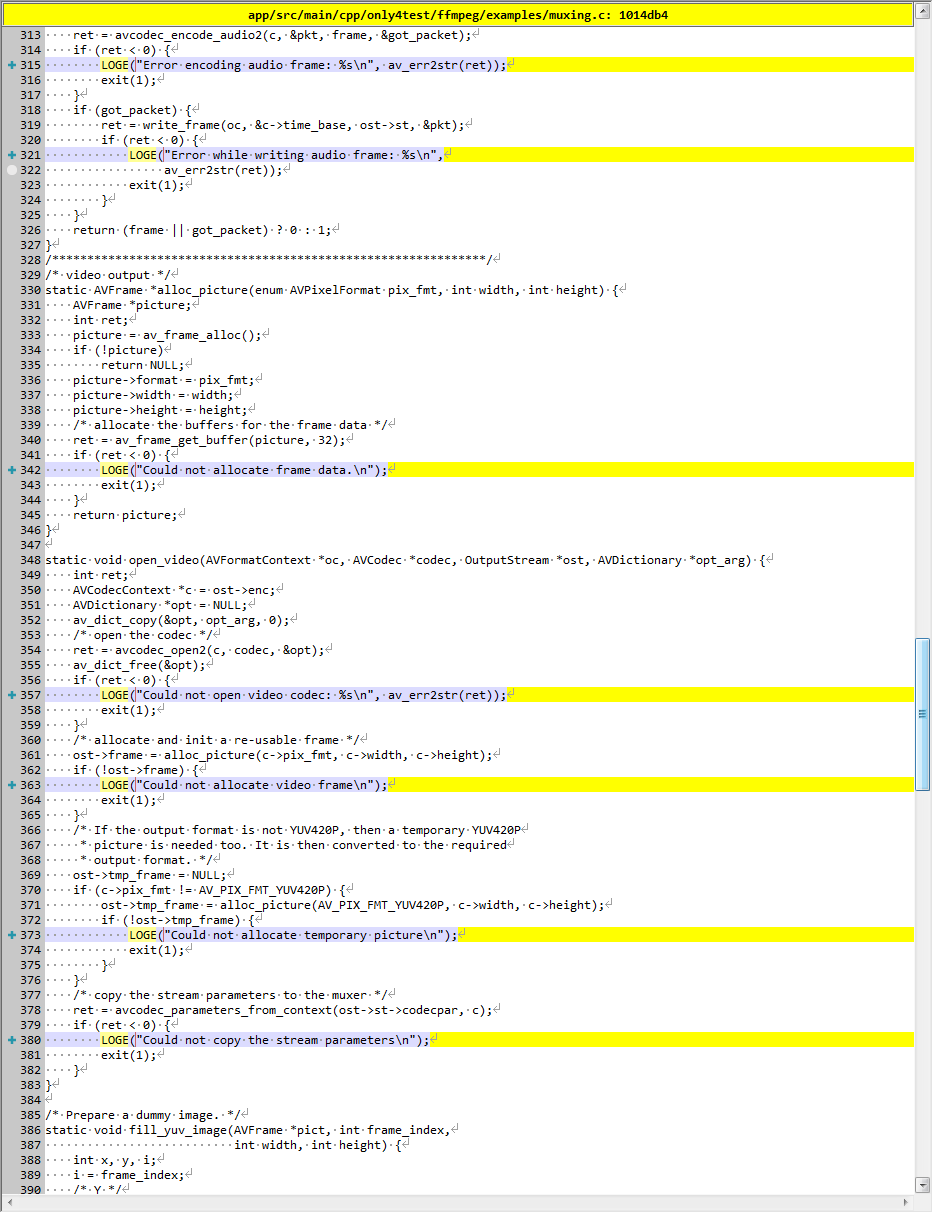

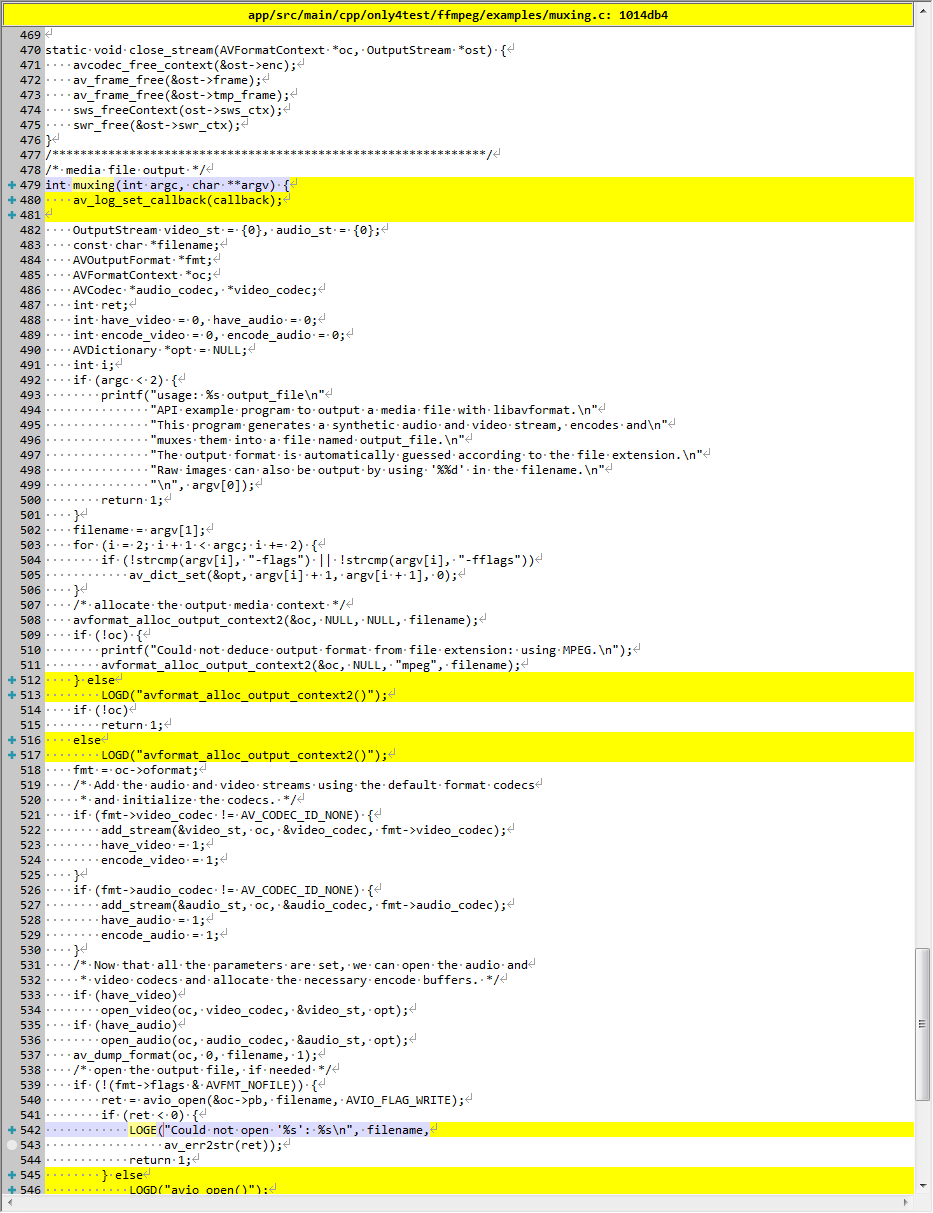

muxing

描述

libavformat API example.

Output a media file in any supported libavformat format. The default codecs are used.

libavformat API 示例。

以任何受支持的 libavformat 格式输出媒体文件。 使用默认编解码器。

API example program to output a media file with libavformat.

This program generates a synthetic audio and video stream, encodes and muxes them into a file named output_file.

The output format is automatically guessed according to the file extension.

Raw images can also be output by using ‘%%d’ in the filename.

使用 libavformat 输出媒体文件的 API 示例程序。

该程序生成合成的音频和视频流,将它们编码并复用到一个名为 output_file 的文件中。

根据文件扩展名自动猜测输出格式。

也可以通过在文件名中使用“%%d”来输出原始图像。

演示

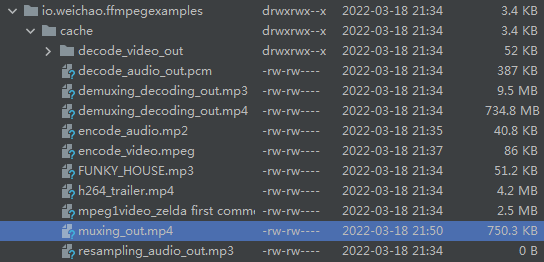

在指定位置生成文件:

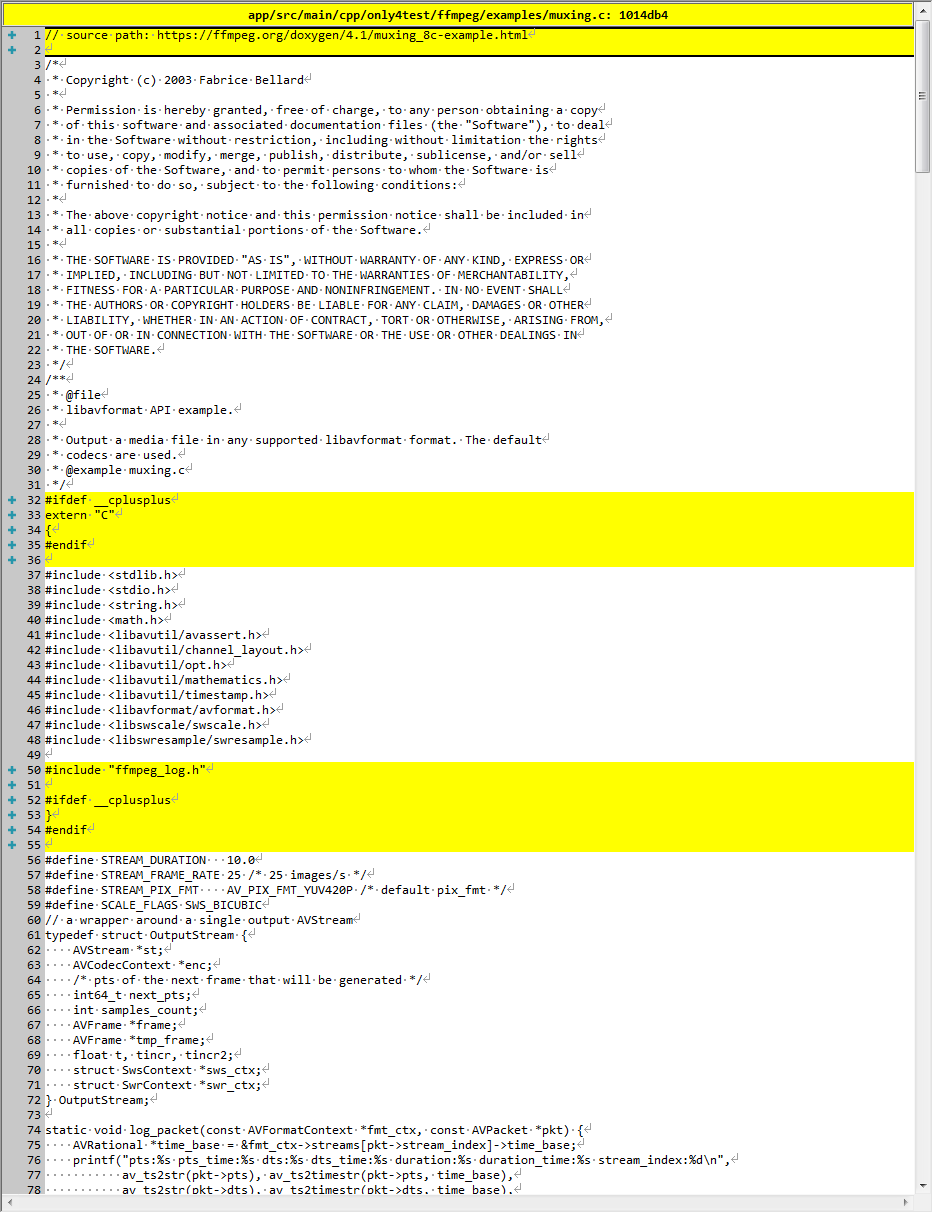

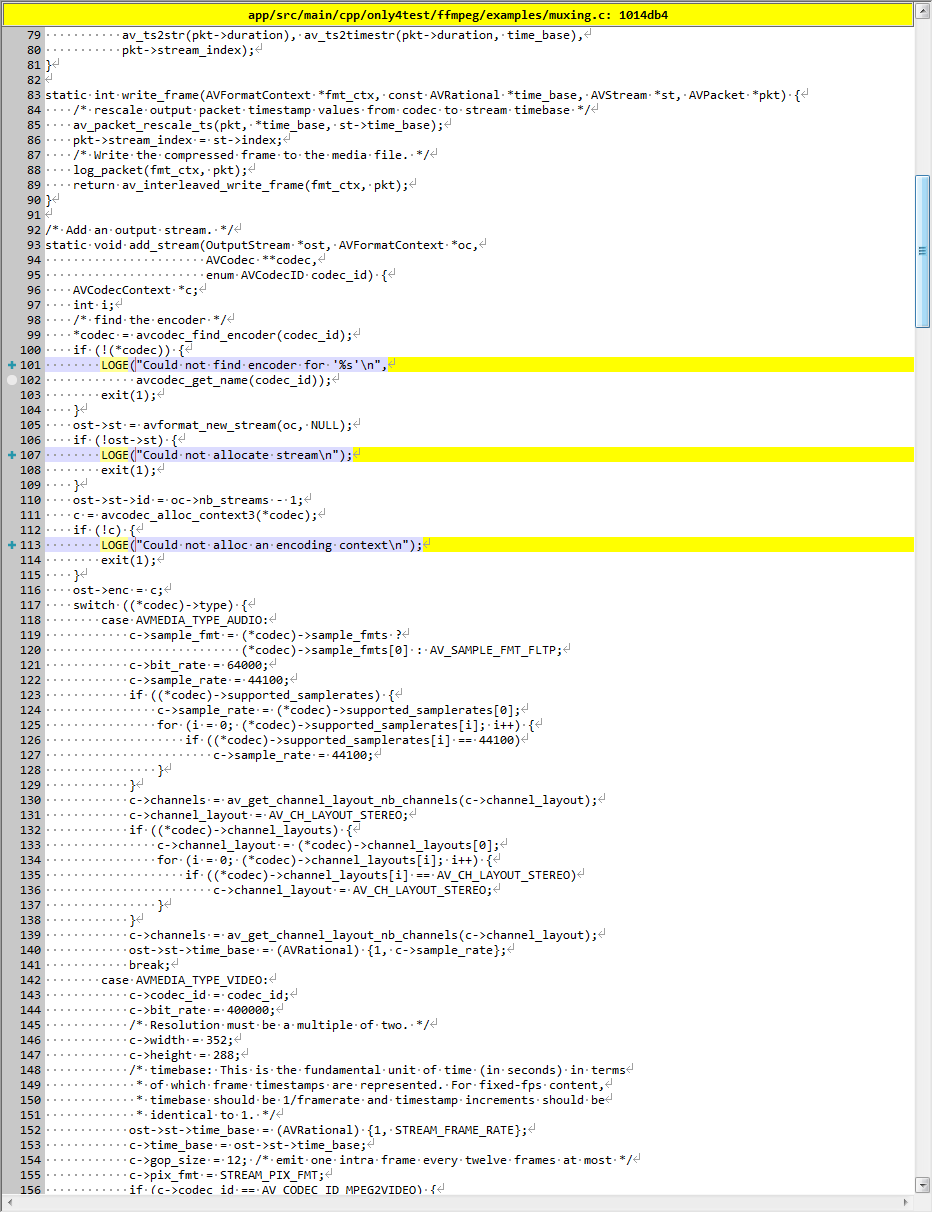

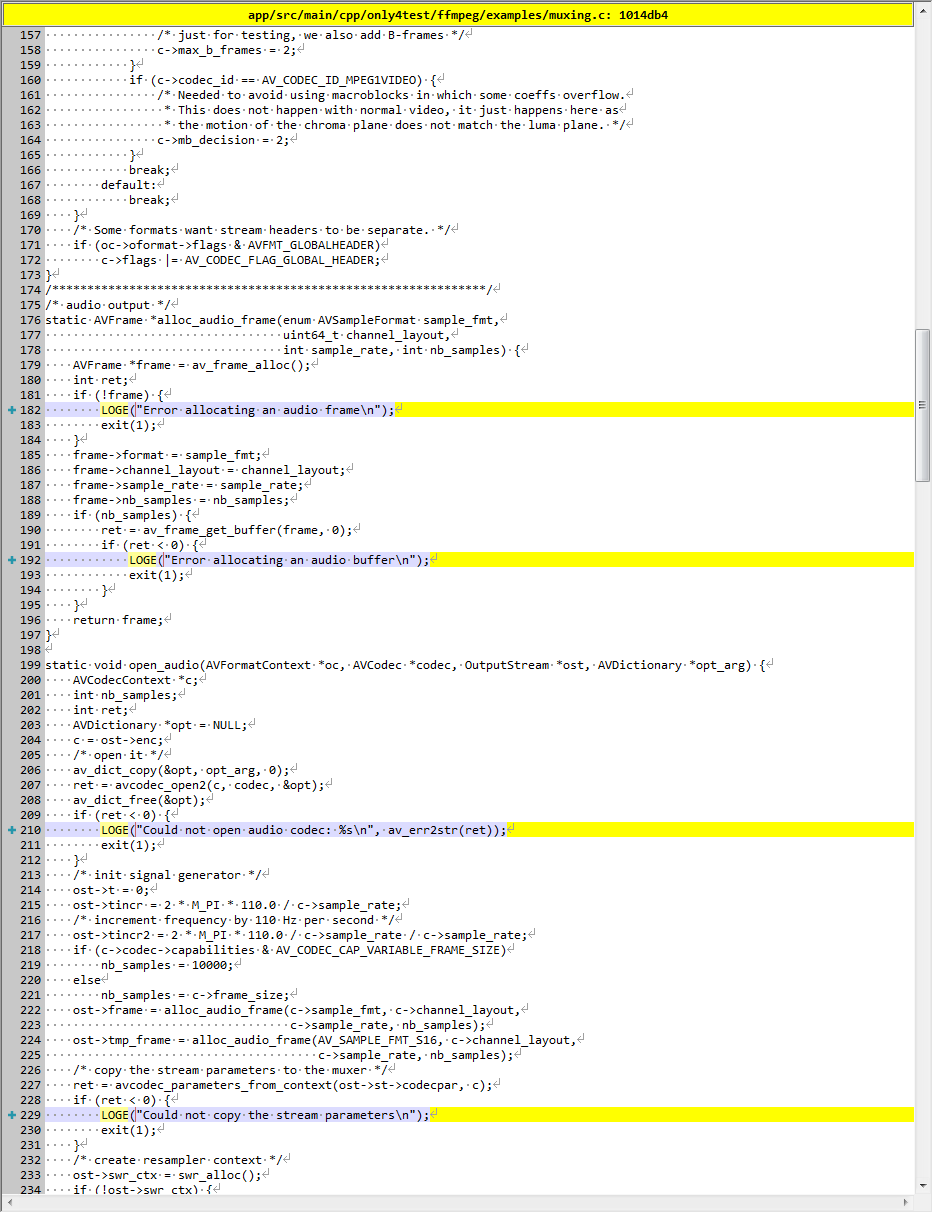

源代码修改

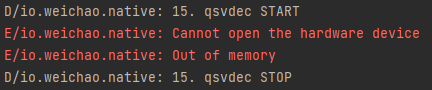

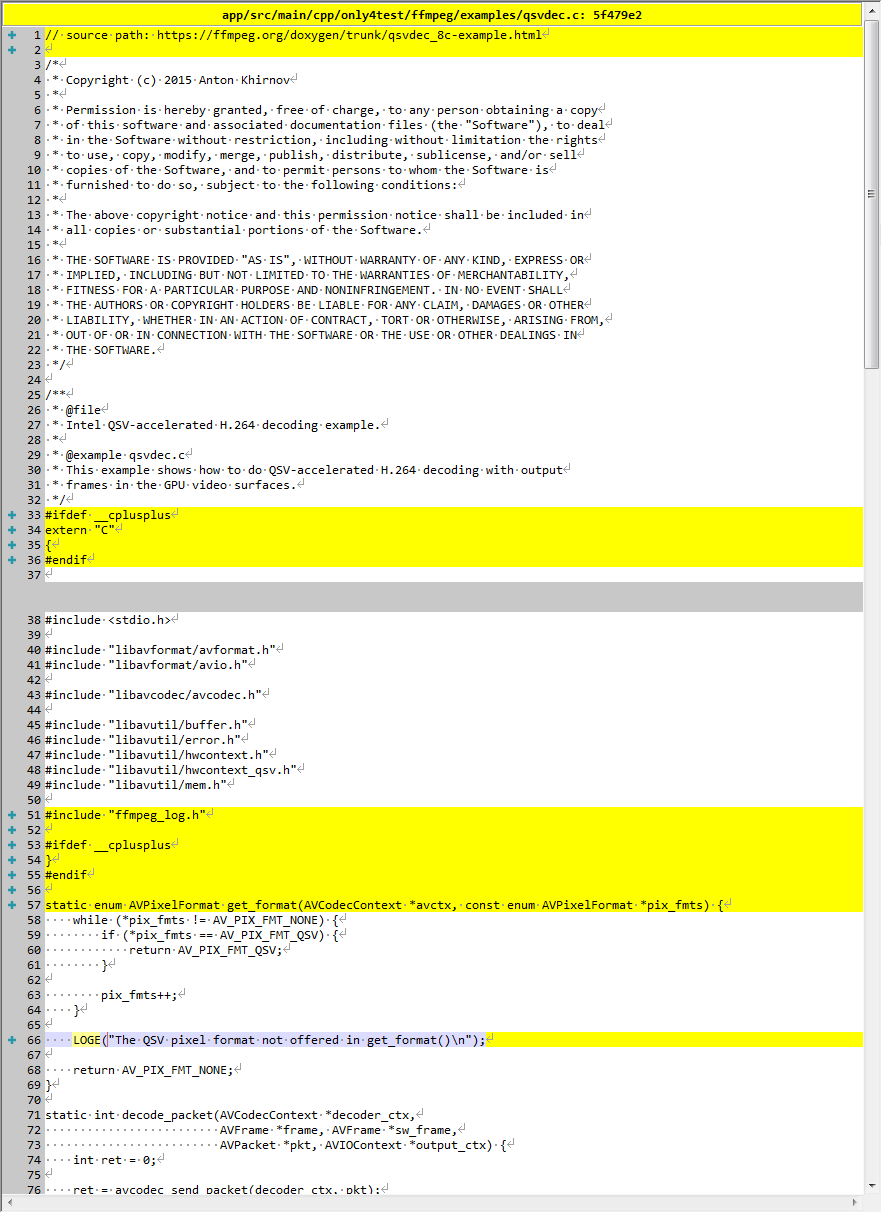

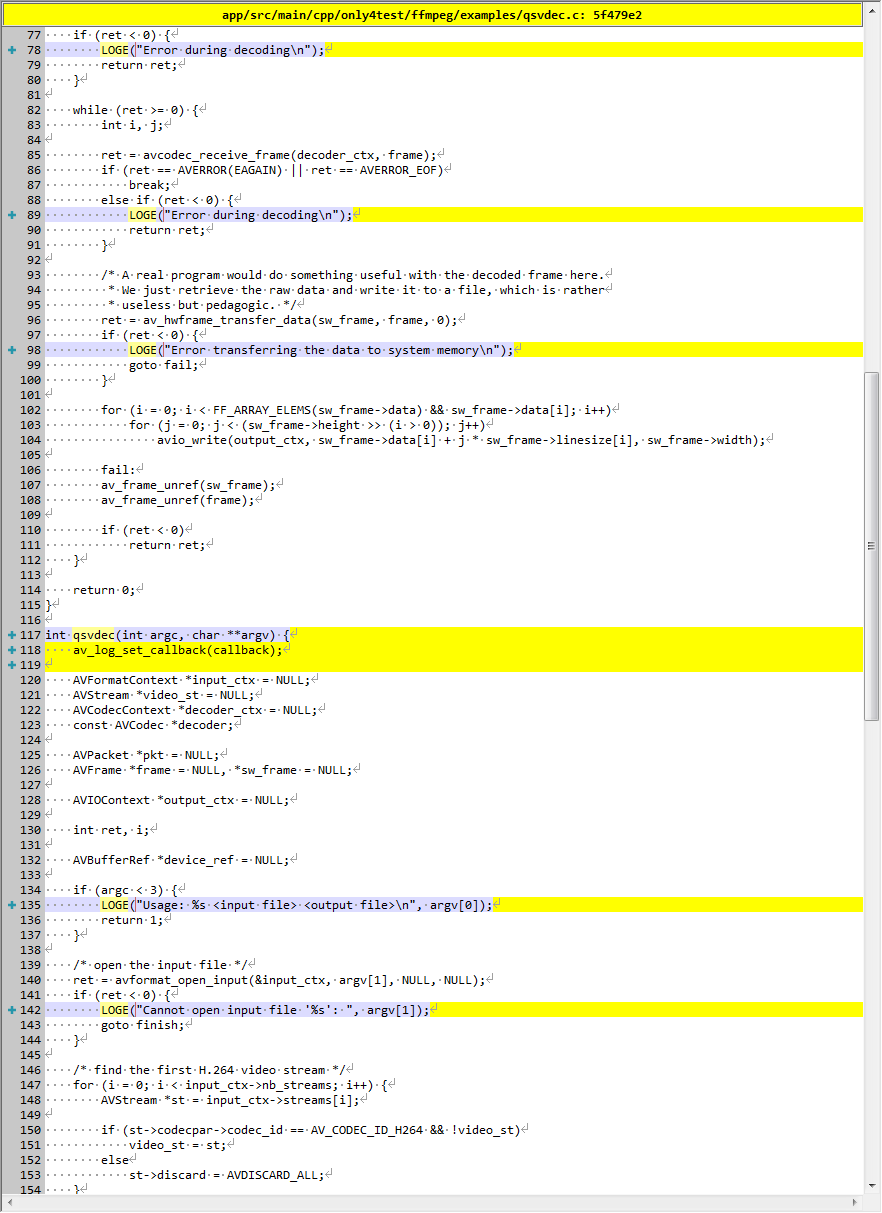

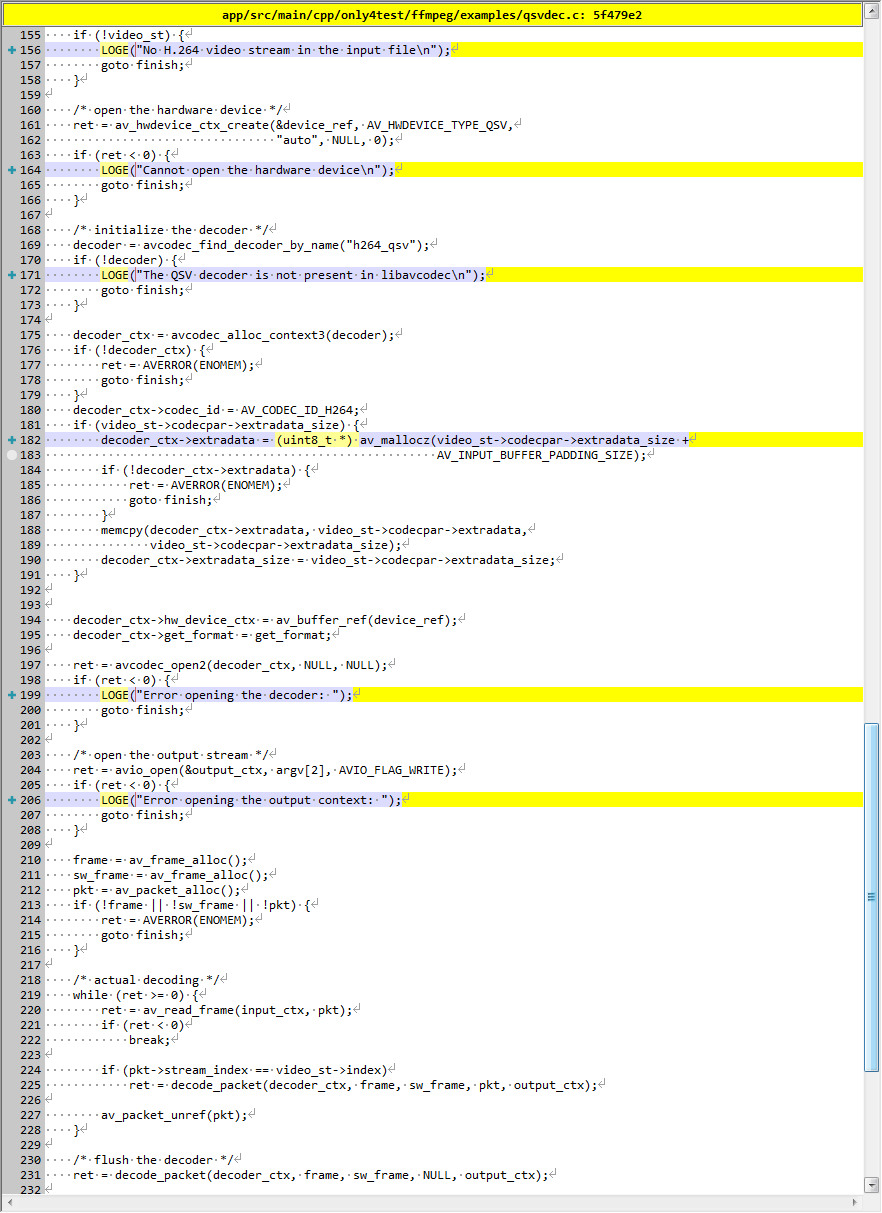

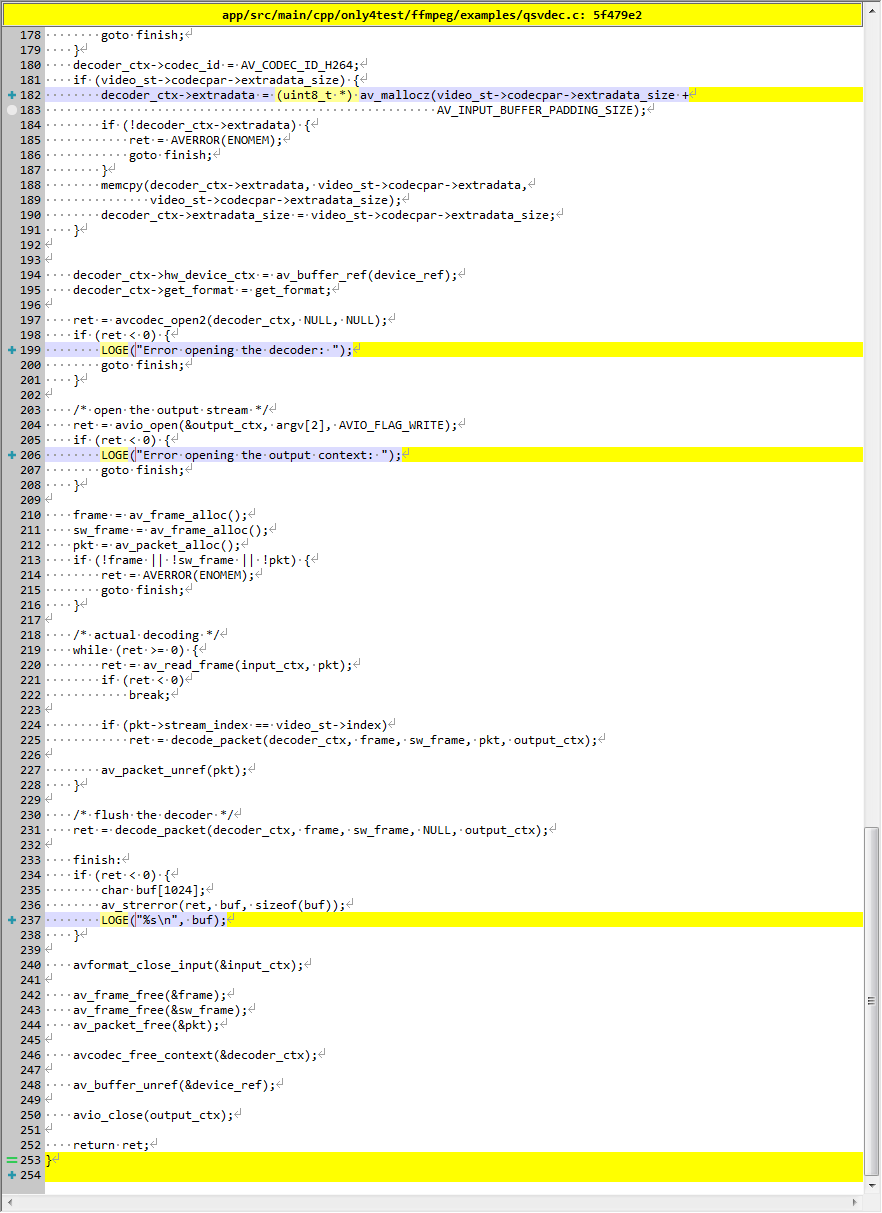

qsvdec TODO

描述

This example shows how to do QSV-accelerated H.264 decoding with output frames in the GPU video surfaces.

此示例说明如何使用 GPU 视频表面中的输出帧进行 QSV 加速 H.264 解码。

演示

QSV = Intel®Quick Sync Video

TODO 未测试成功,提示缺少"libavutil/hwcontext_qsv.h",因为生成 so 时未编译 qsv

源代码修改

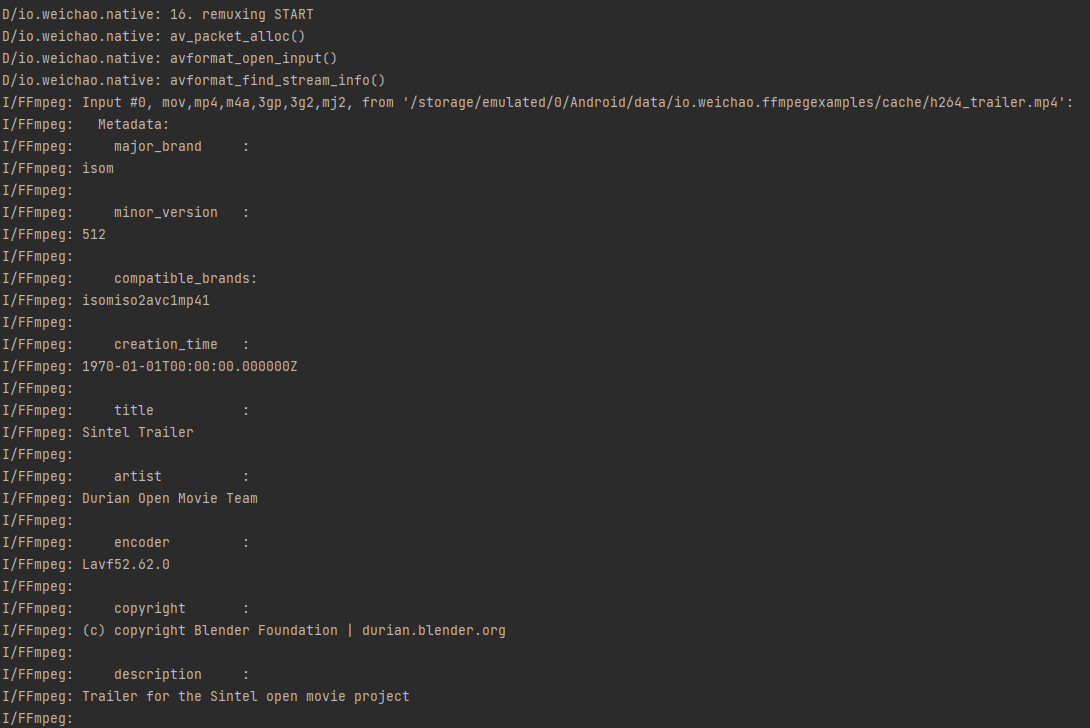

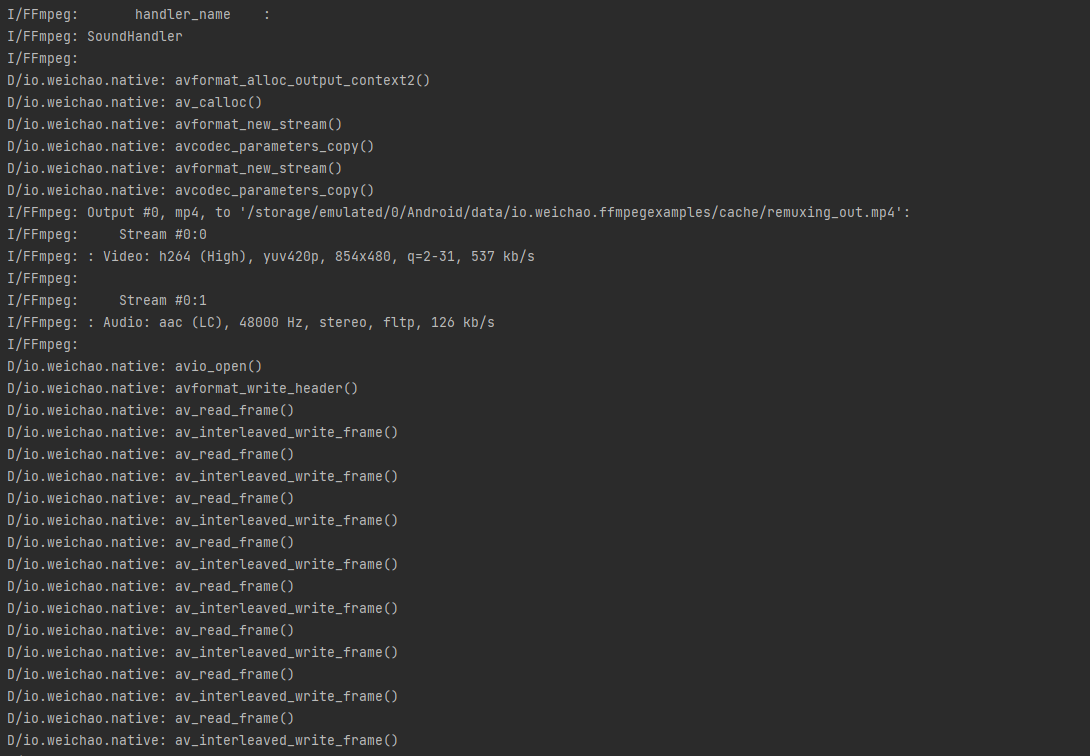

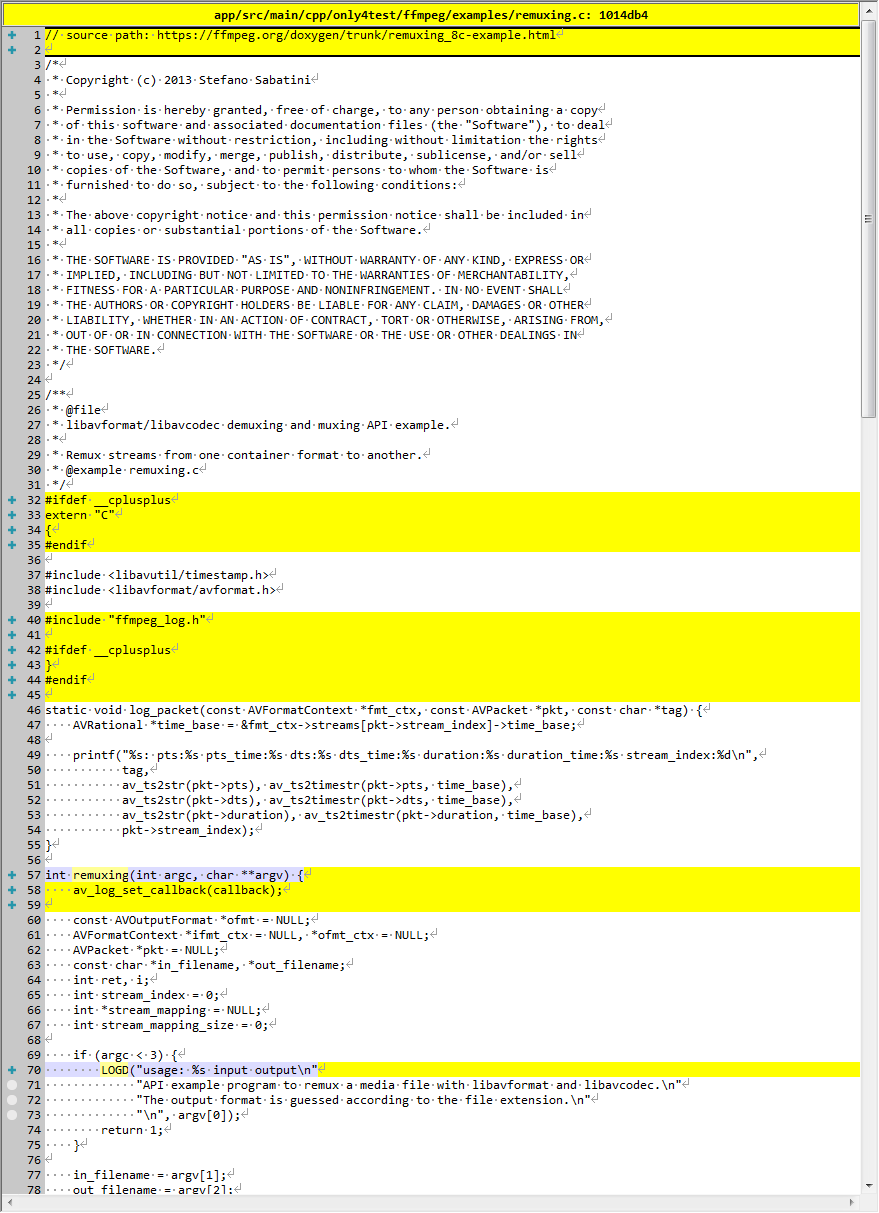

remuxing

描述

libavformat/libavcodec demuxing and muxing API example.

Remux streams from one container format to another.

libavformat/libavcodec 解复用和复用 API 示例。

Remux 从一种容器格式流式传输到另一种容器格式。

API example program to remux a media file with libavformat and libavcodec.

The output format is guessed according to the file extension.

使用 libavformat 和 libavcodec 重新混合媒体文件的 API 示例程序。

根据文件扩展名猜测输出格式。

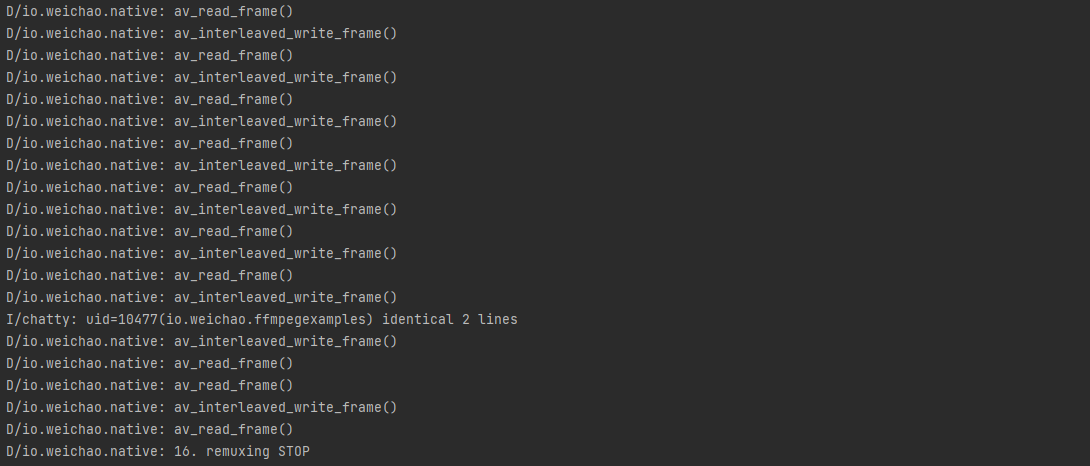

演示

(省略中间 log)

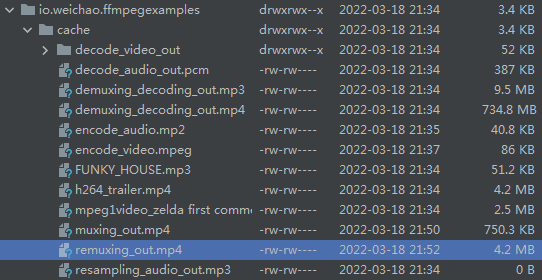

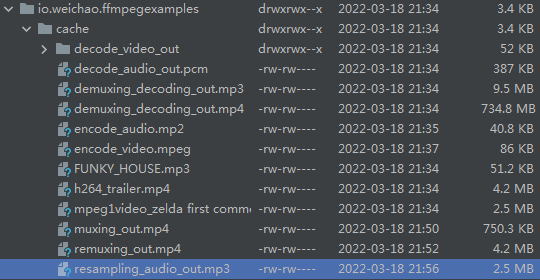

在指定位置生成文件:

源代码修改

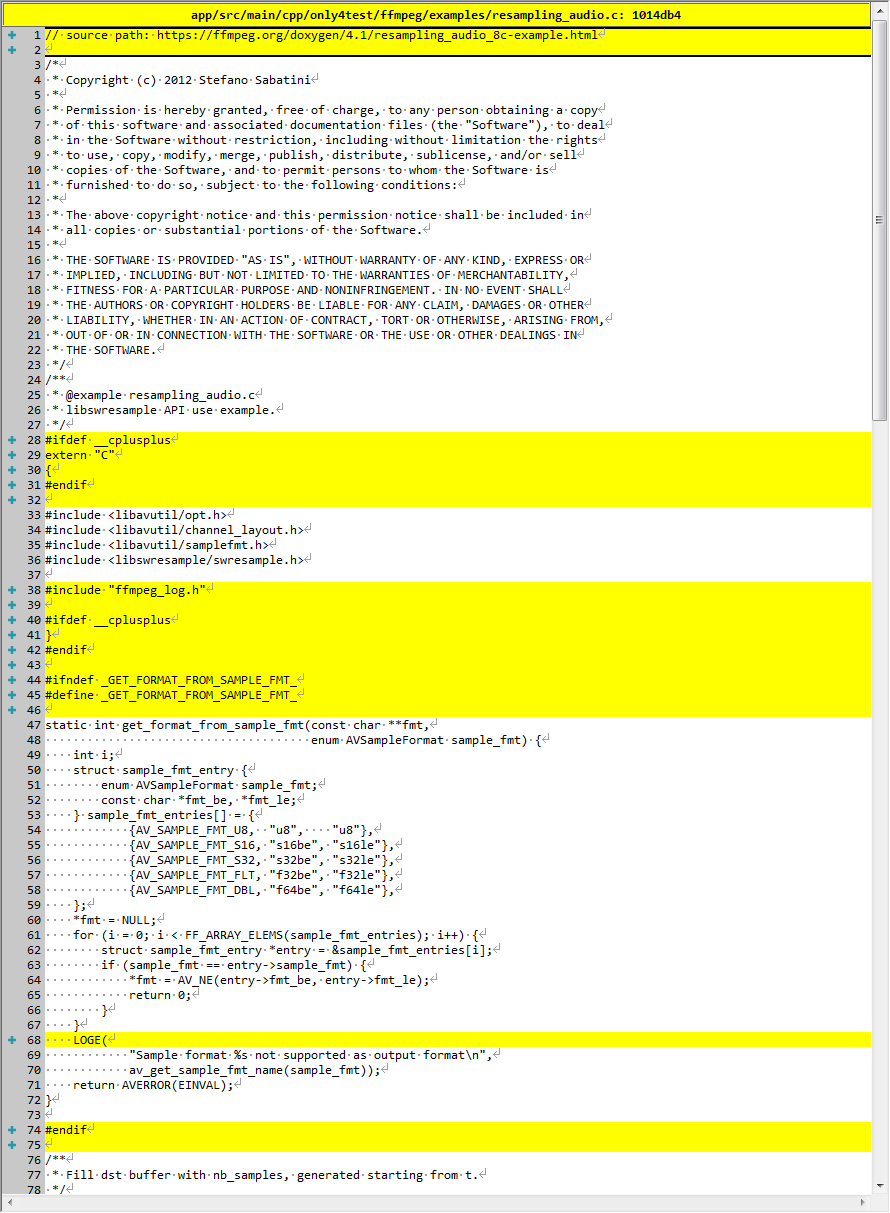

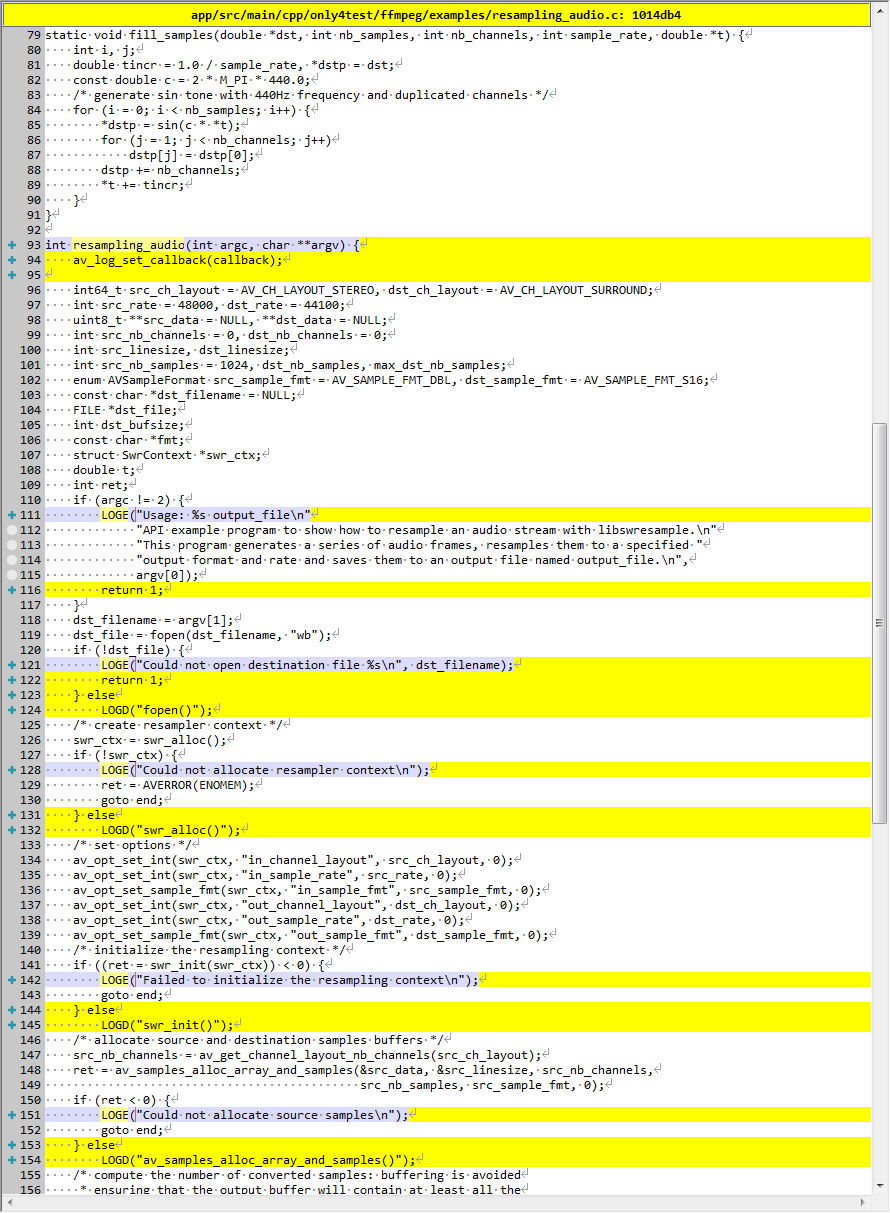

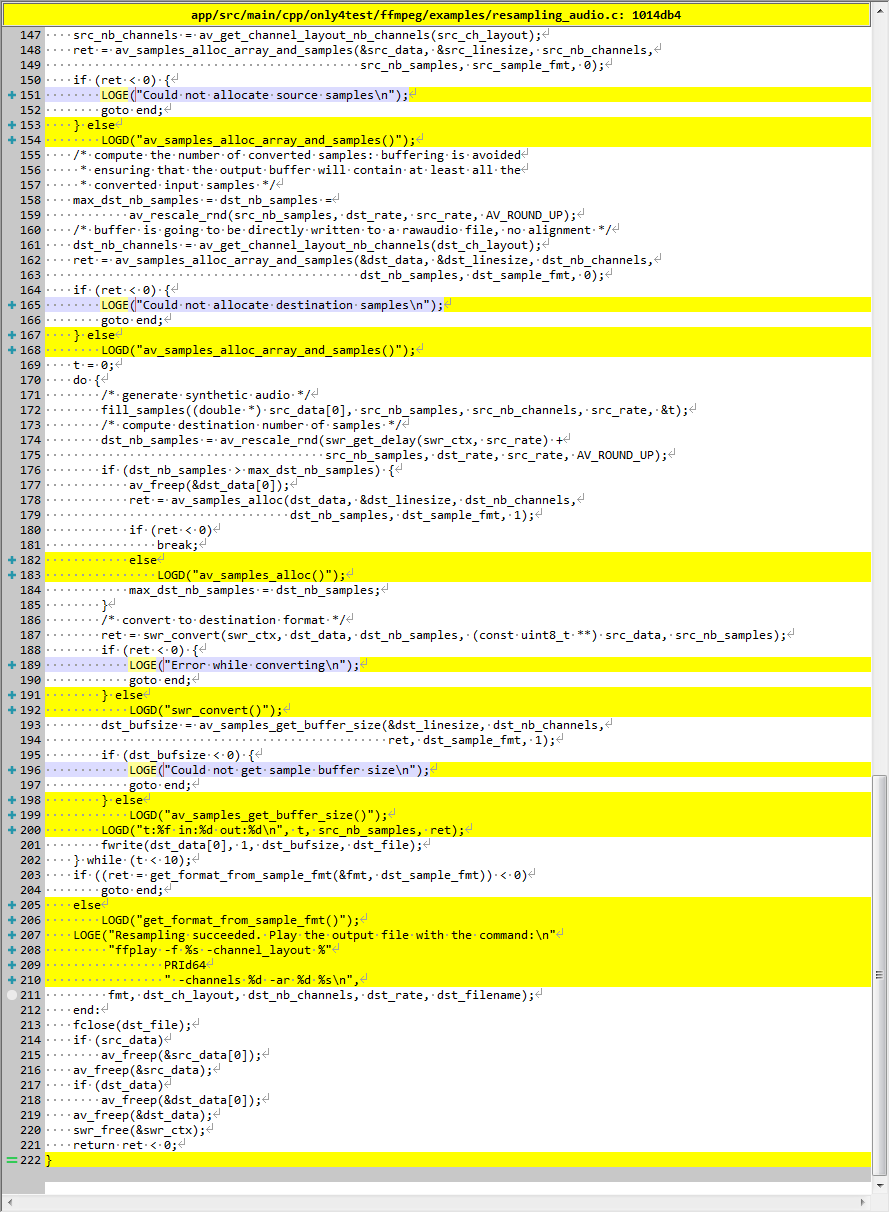

resampling_audio

描述

libswresample API use example.

libswresample API 使用示例。

API example program to show how to resample an audio stream with libswresample.

This program generates a series of audio frames, resamples them to a specified output format and rate and saves them to an output file named output_file.

API 示例程序,展示如何使用 libswresample 重新采样音频流。

该程序生成一系列音频帧,将它们重新采样为指定的输出格式和速率,并将它们保存到名为 output_file 的输出文件中。

演示

(省略中间 log)

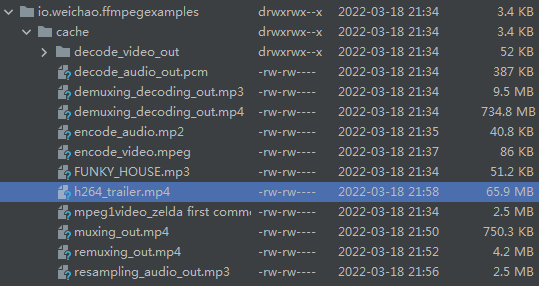

在指定位置生成文件:

源代码修改

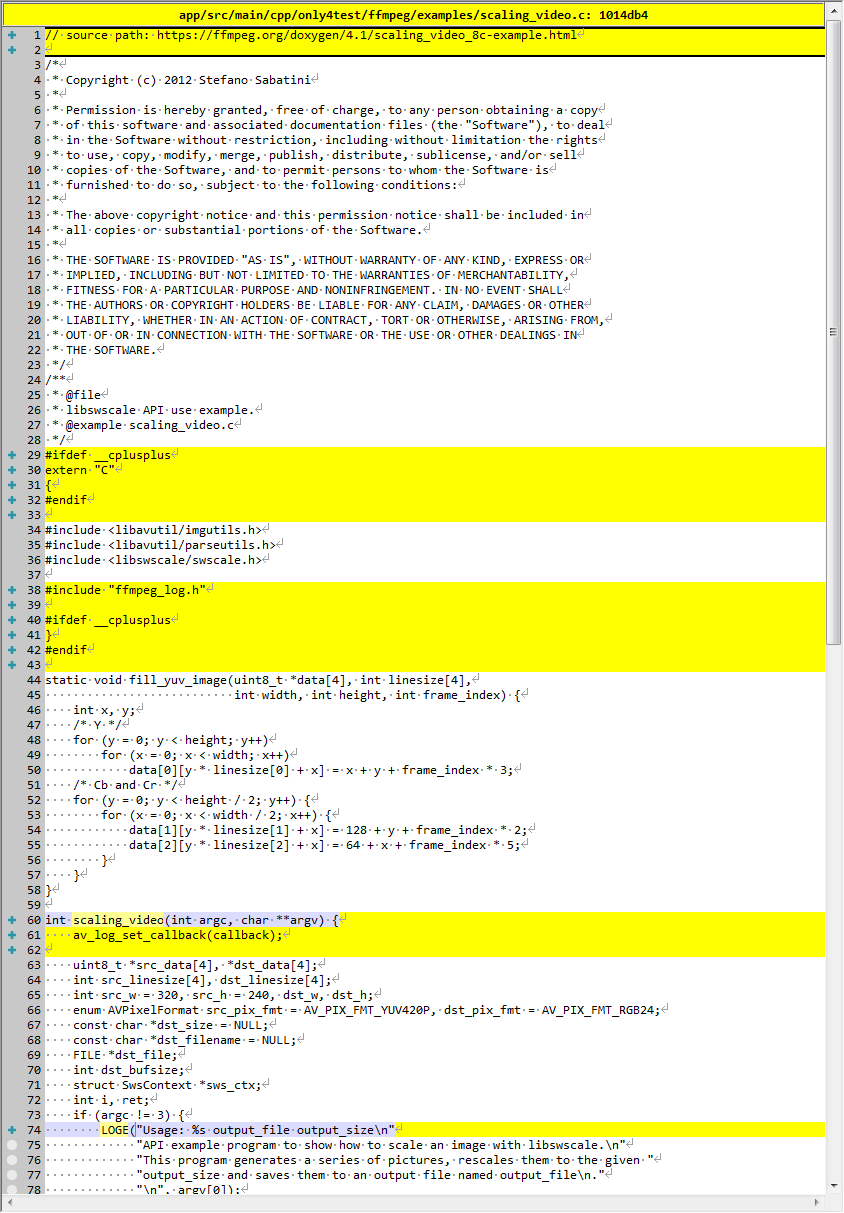

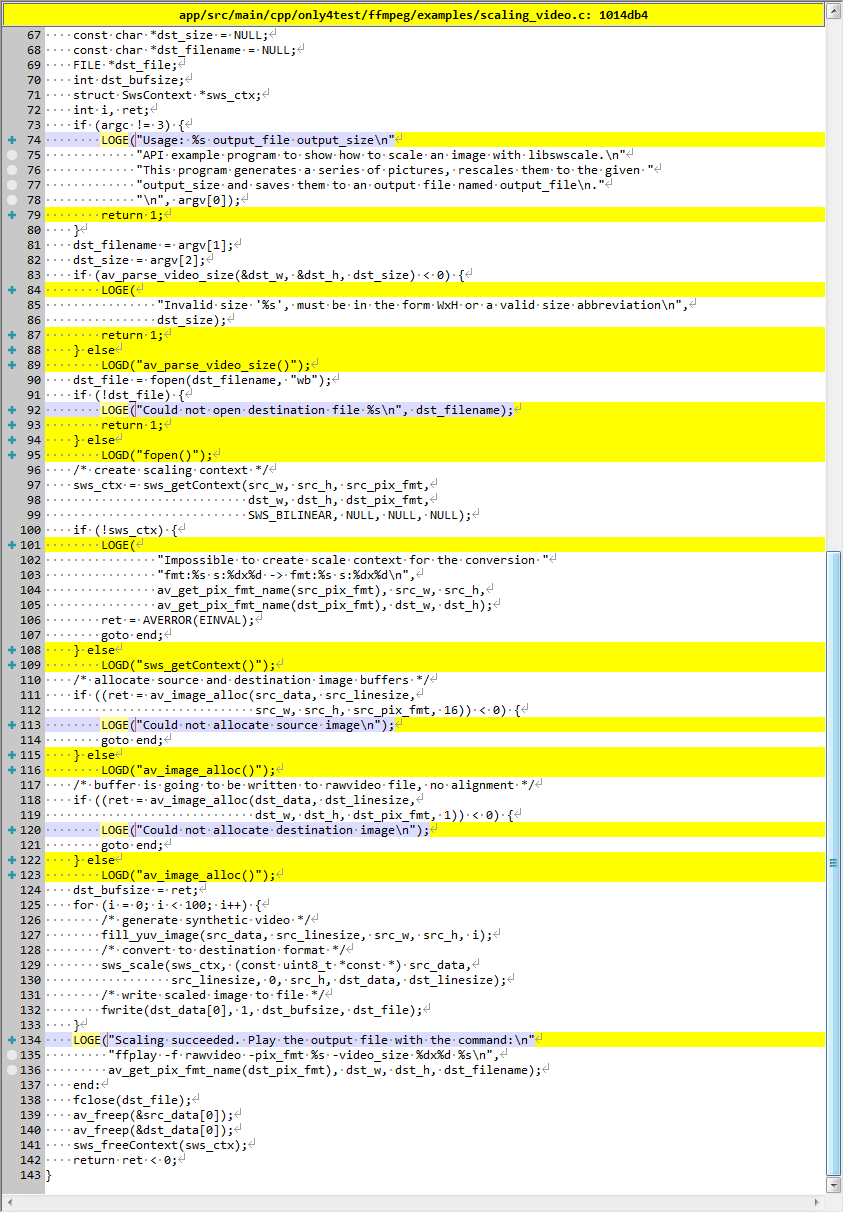

scaling_video

描述

libswscale API use example.

libswscale API 使用示例。

API example program to show how to scale an image with libswscale.

This program generates a series of pictures, rescales them to the given output_size and saves them to an output file named output_file.

展示如何使用 libswscale 缩放图像的 API 示例程序。

该程序生成一系列图片,将它们重新缩放到给定的 output_size 并将它们保存到名为 output_file 的输出文件中。

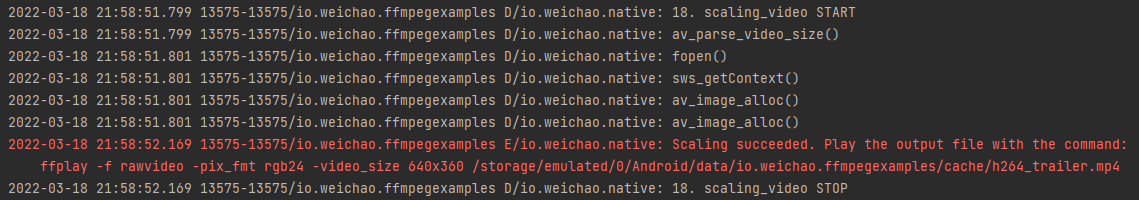

演示

在指定位置生成文件:

源代码修改

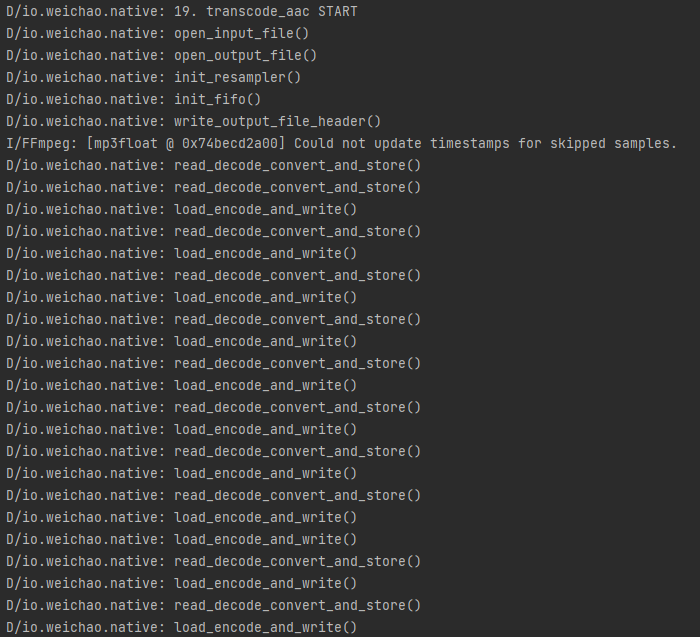

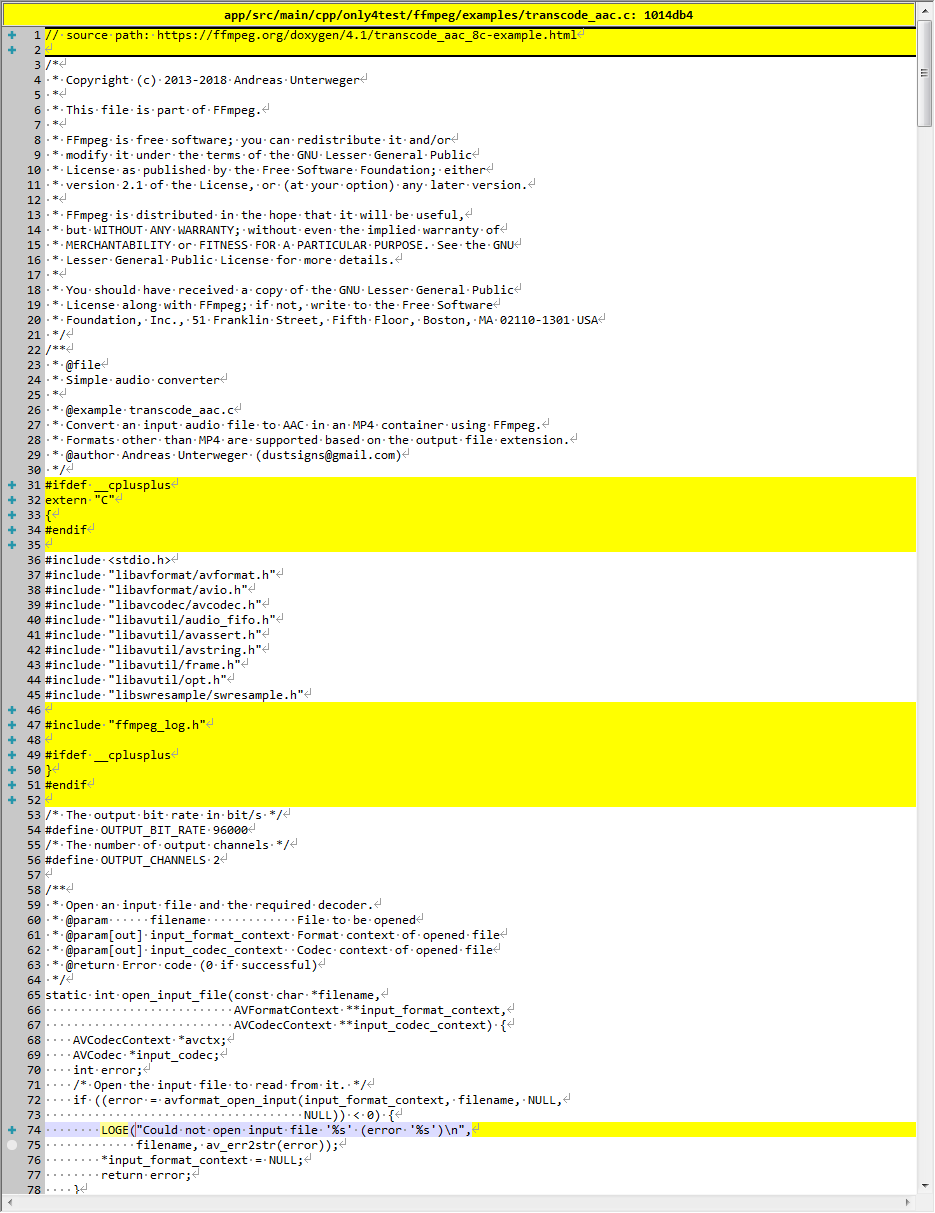

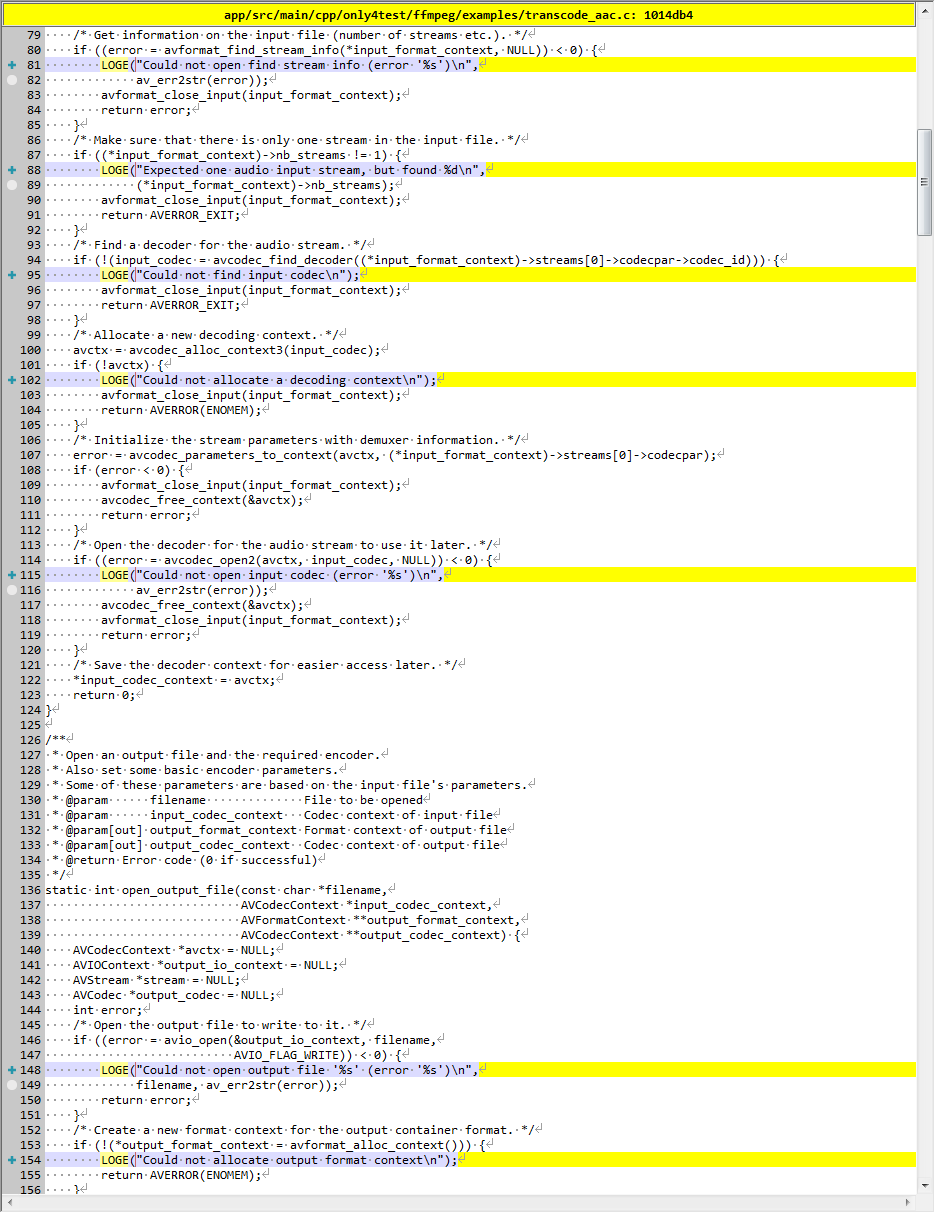

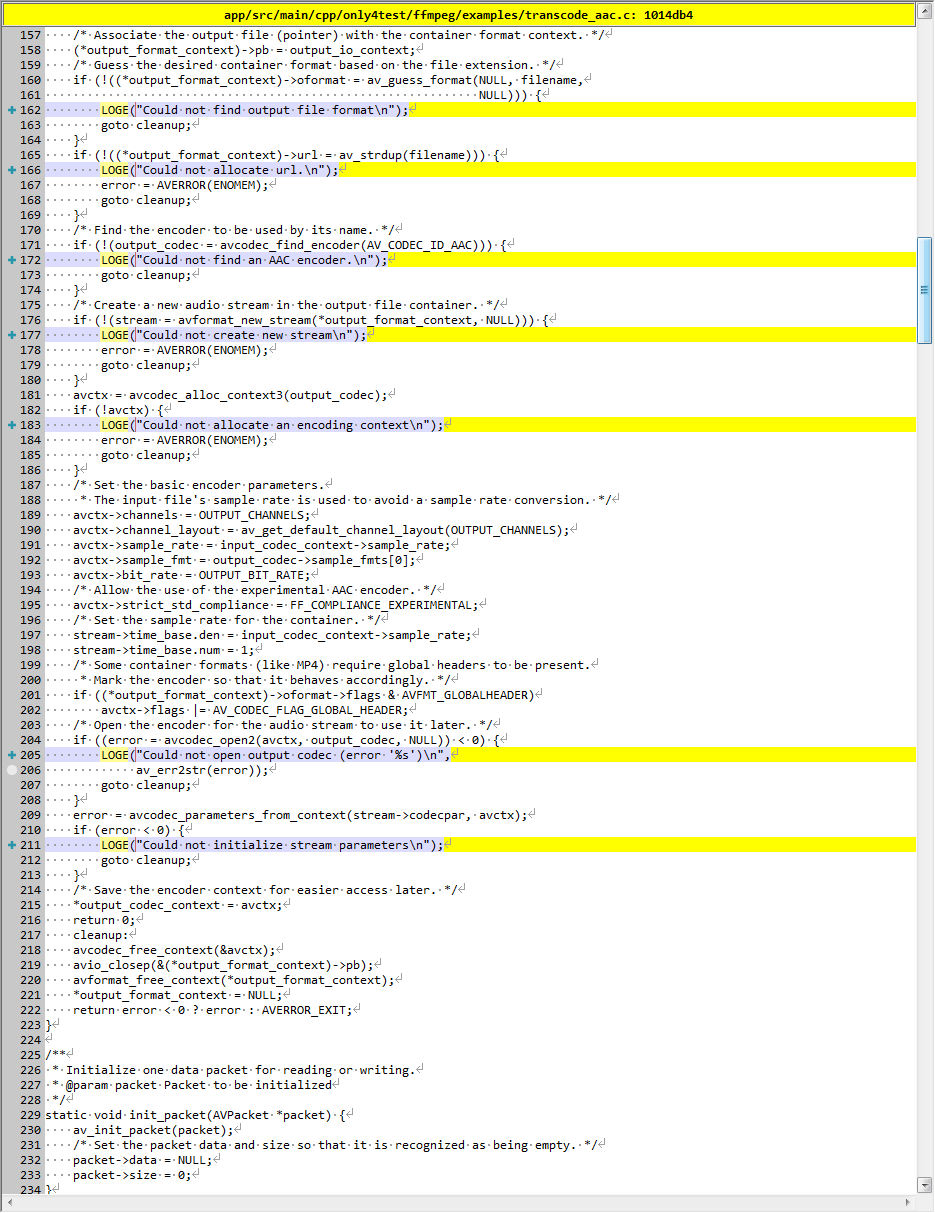

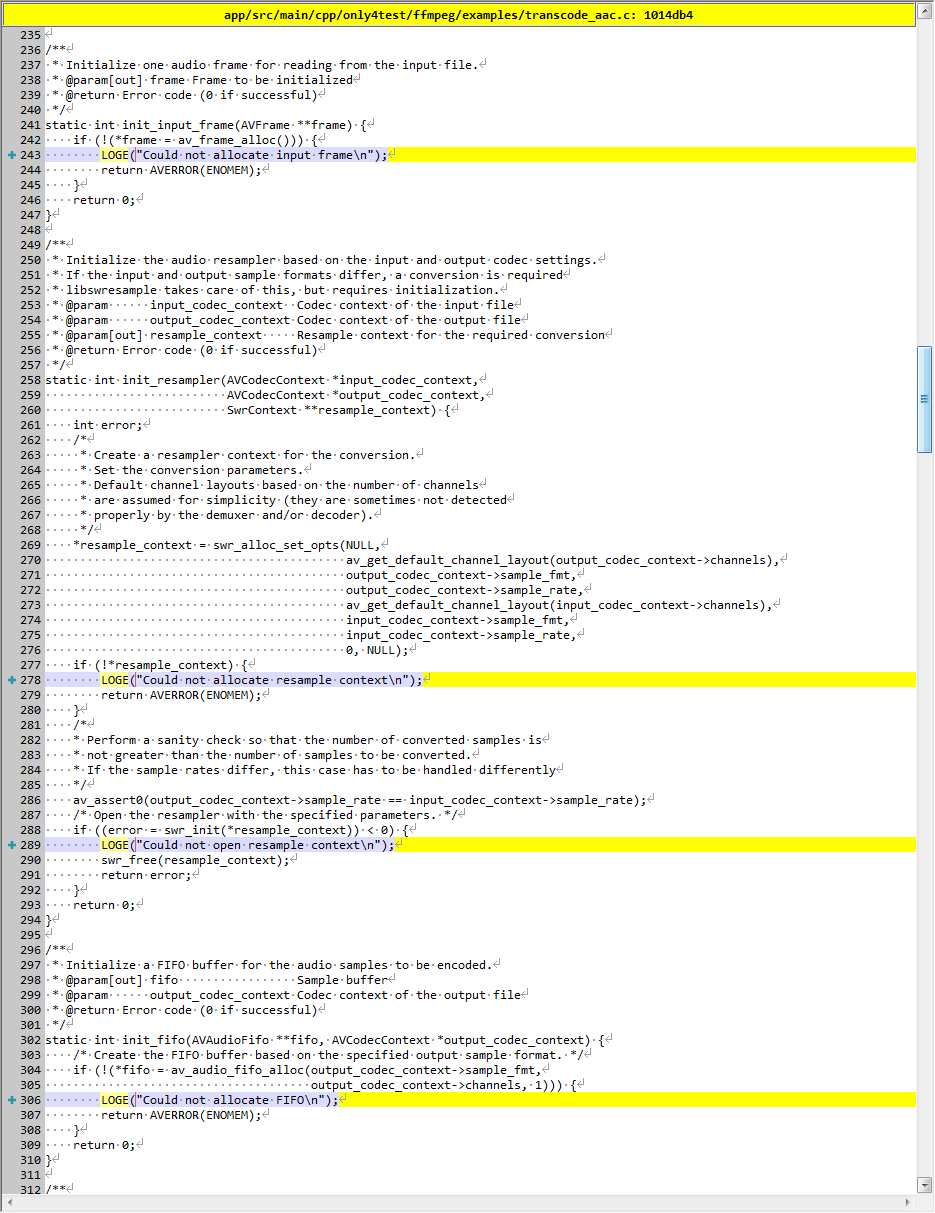

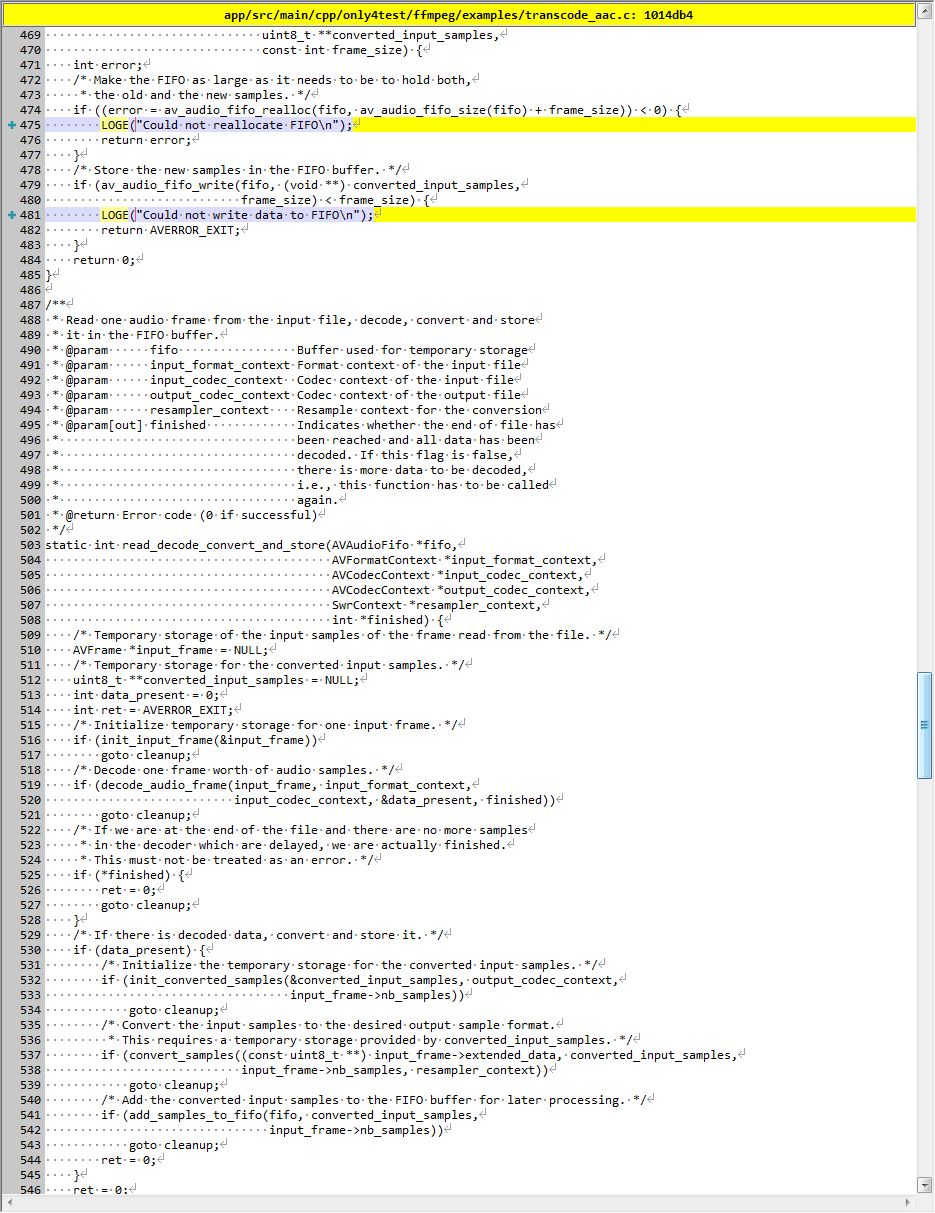

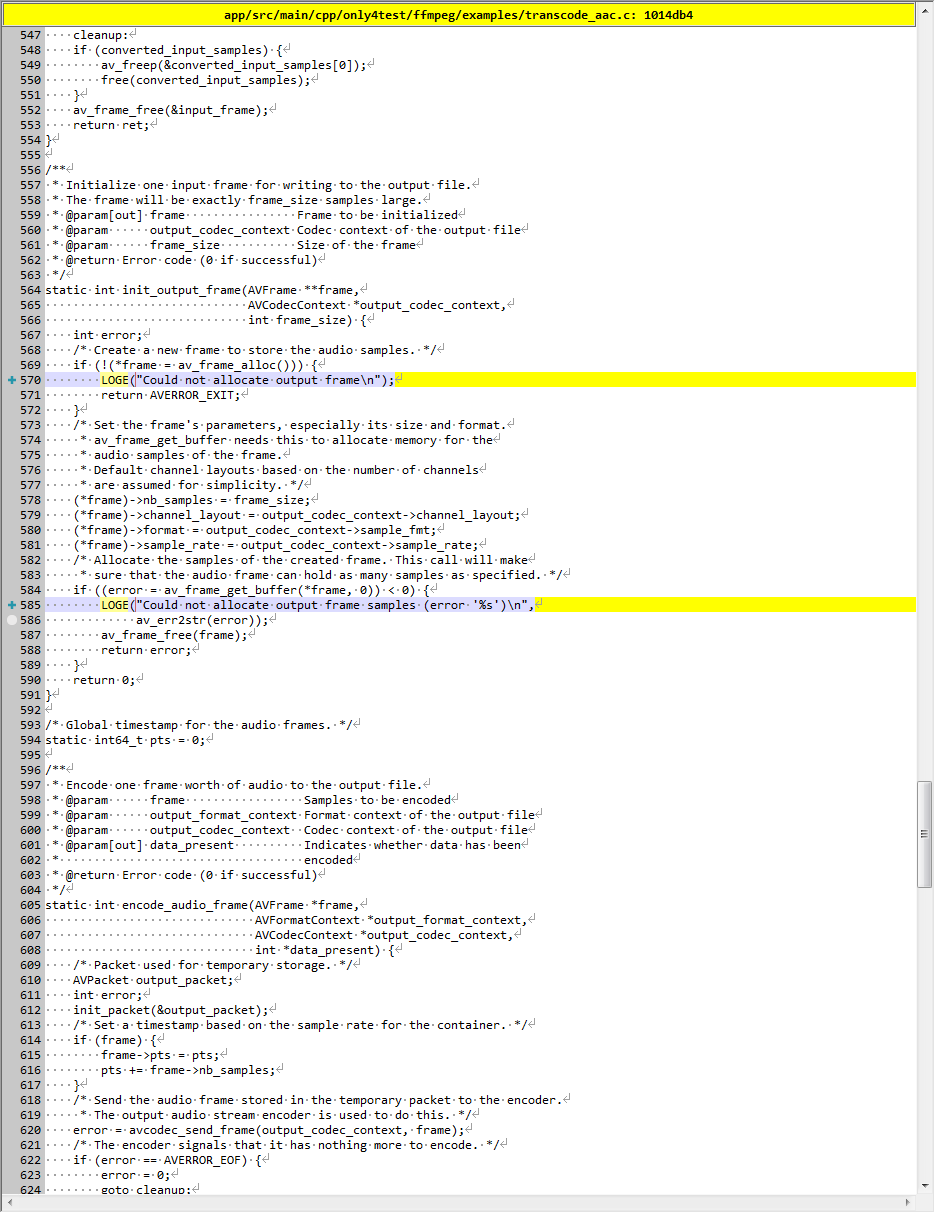

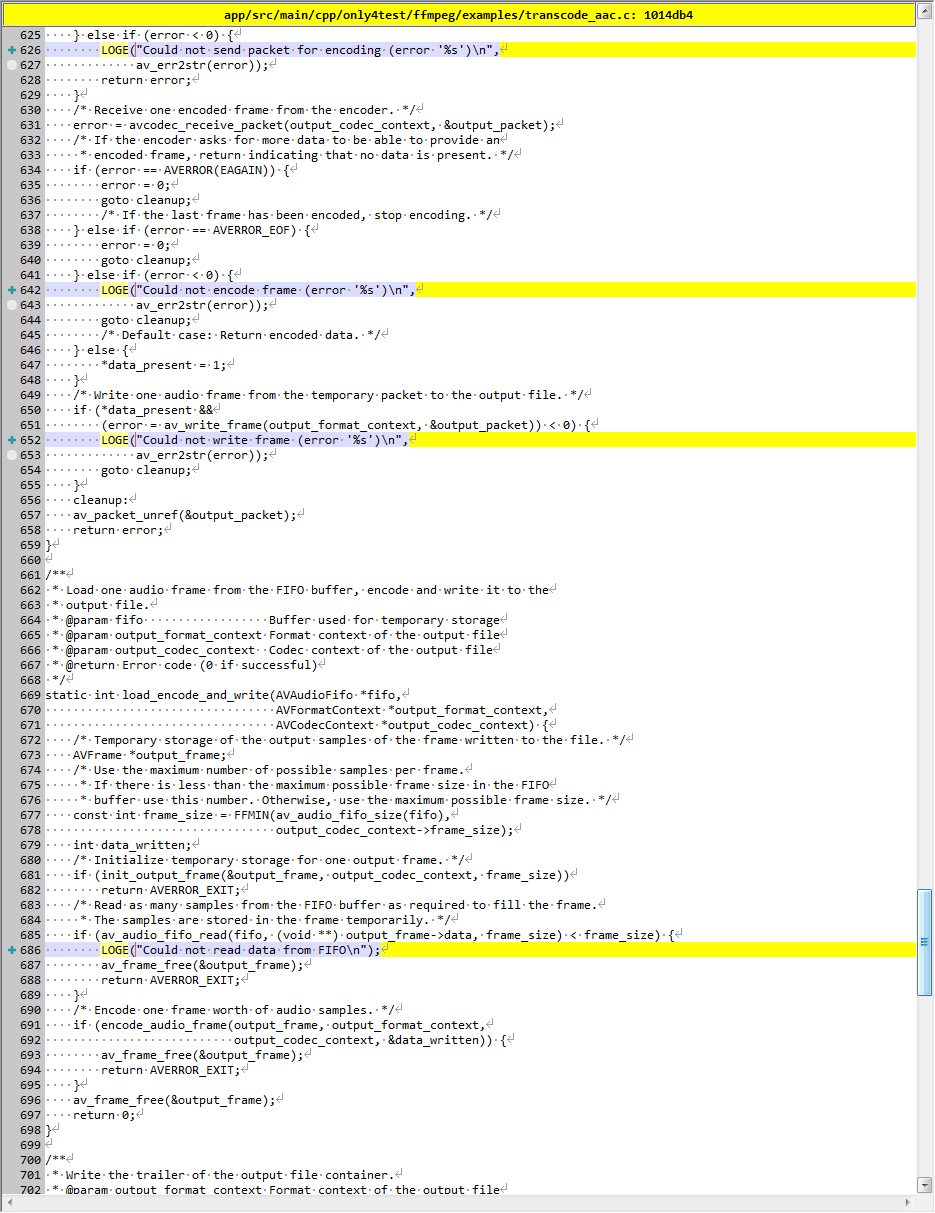

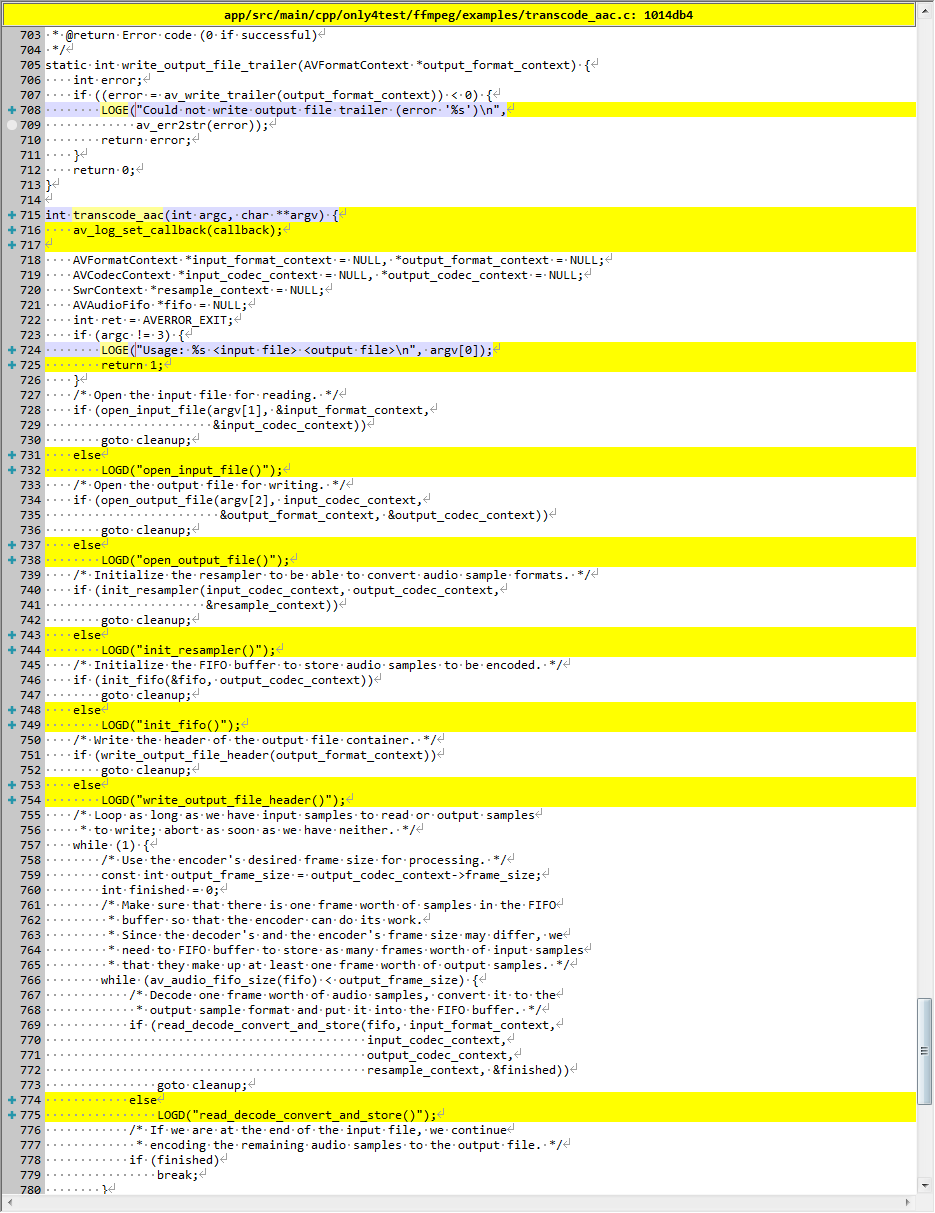

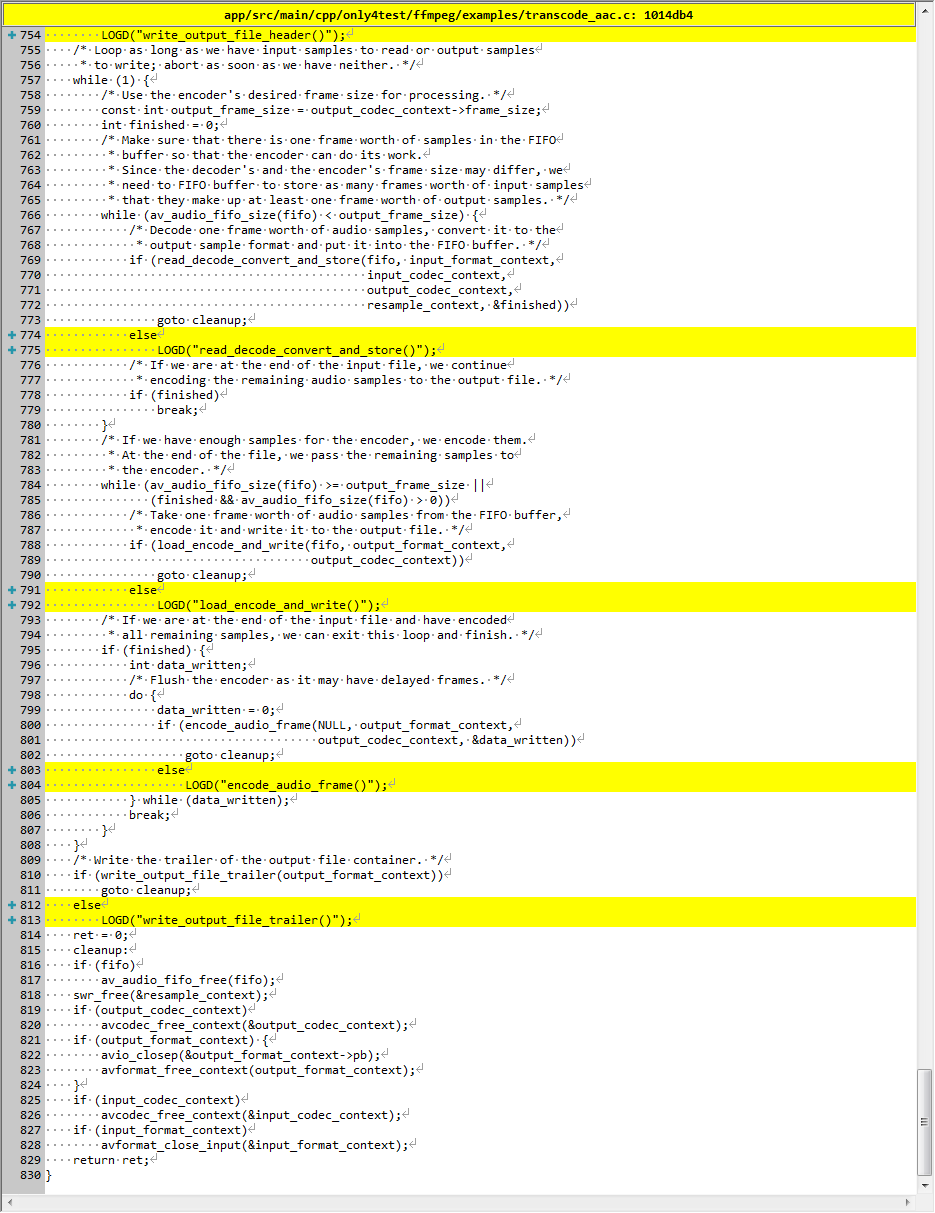

transcode_aac

描述

Simple audio converter

Convert an input audio file to AAC in an MP4 container using FFmpeg.Formats other than MP4 are supported based on the output file extension.

简单的音频转换器

使用 FFmpeg 将输入音频文件转换为 MP4 容器中的 AAC。根据输出文件扩展名,支持 MP4 以外的格式。

演示

(省略中间 log)

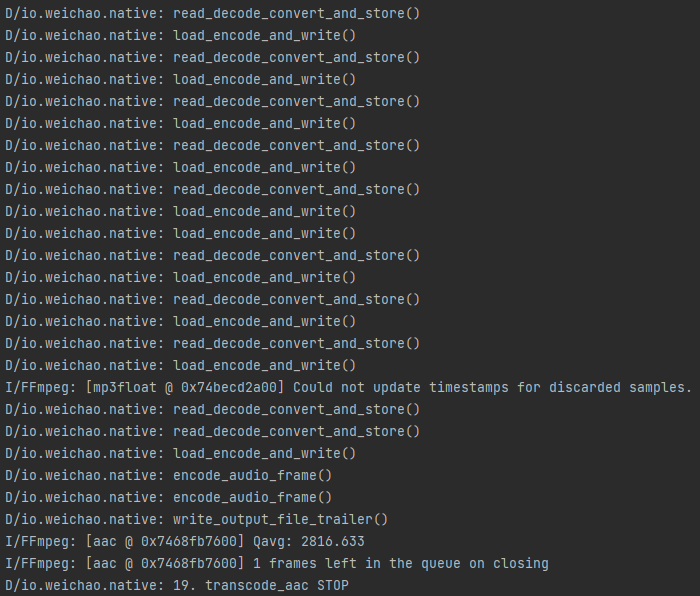

在指定位置生成文件:

源代码修改

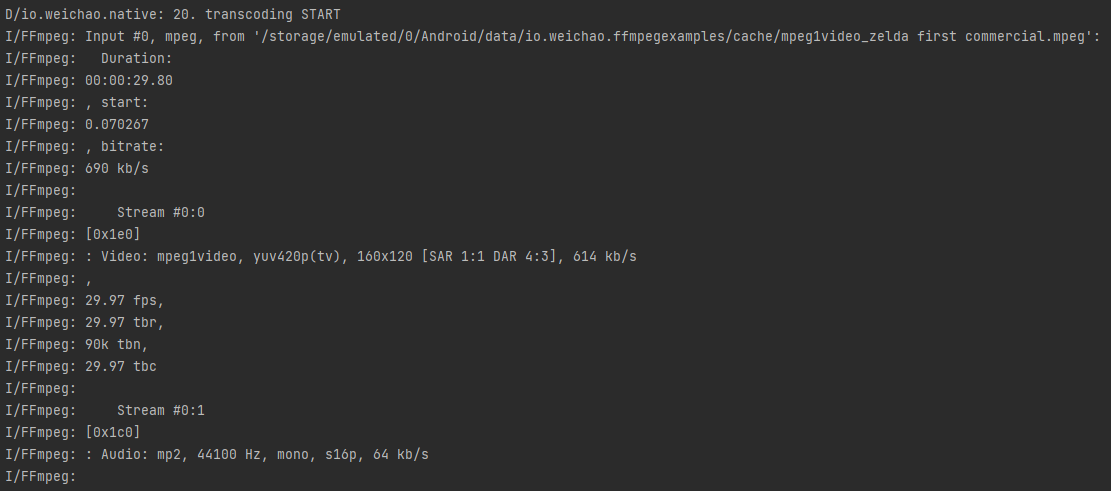

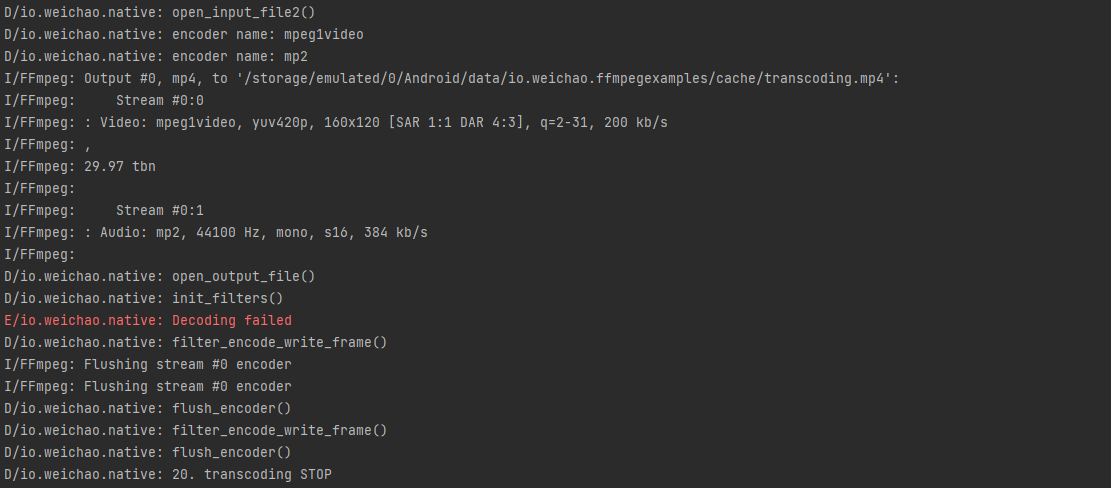

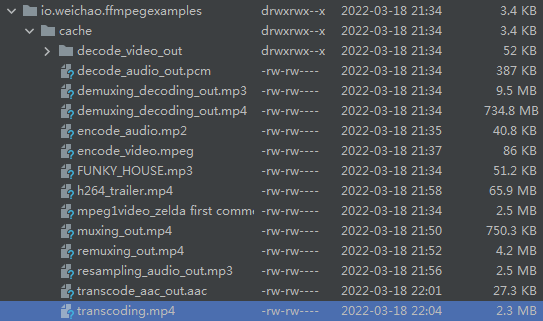

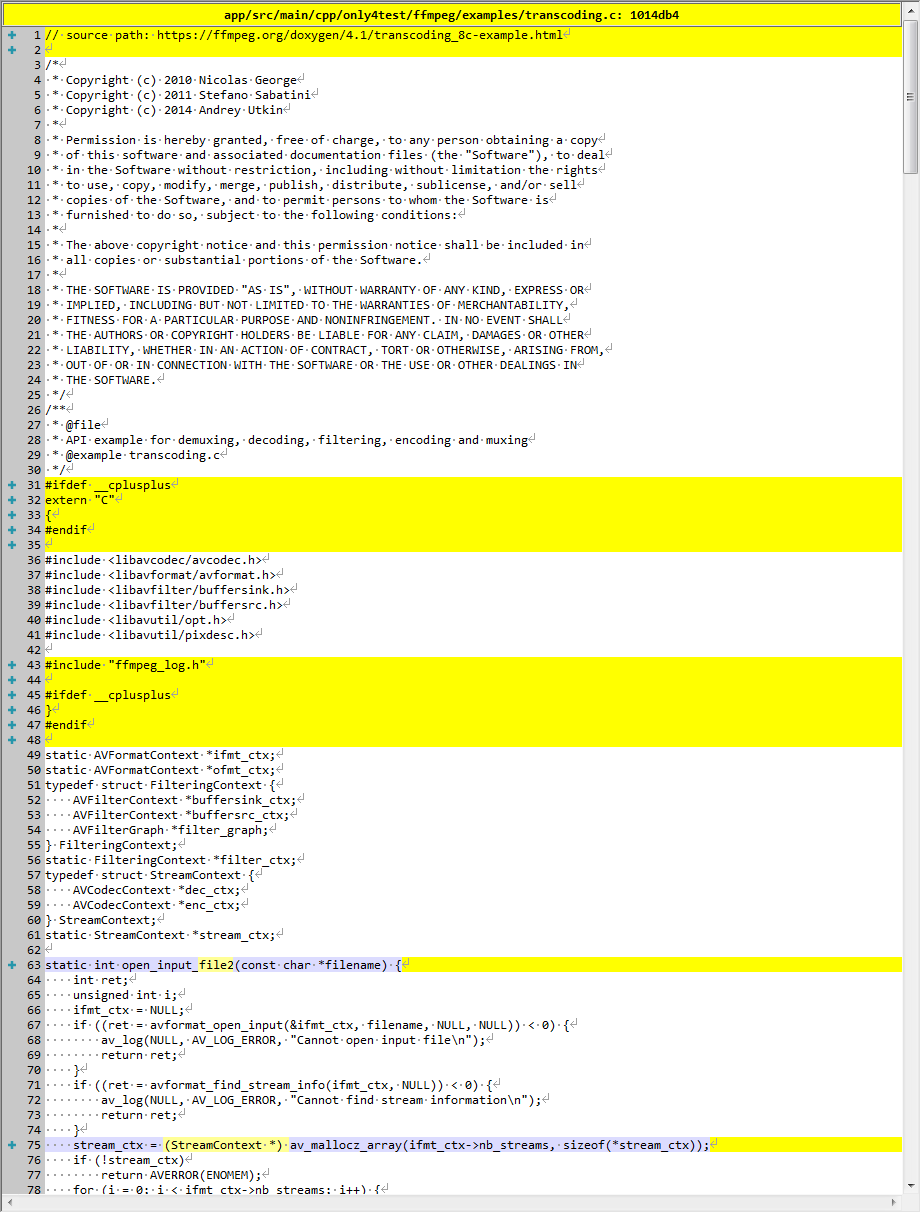

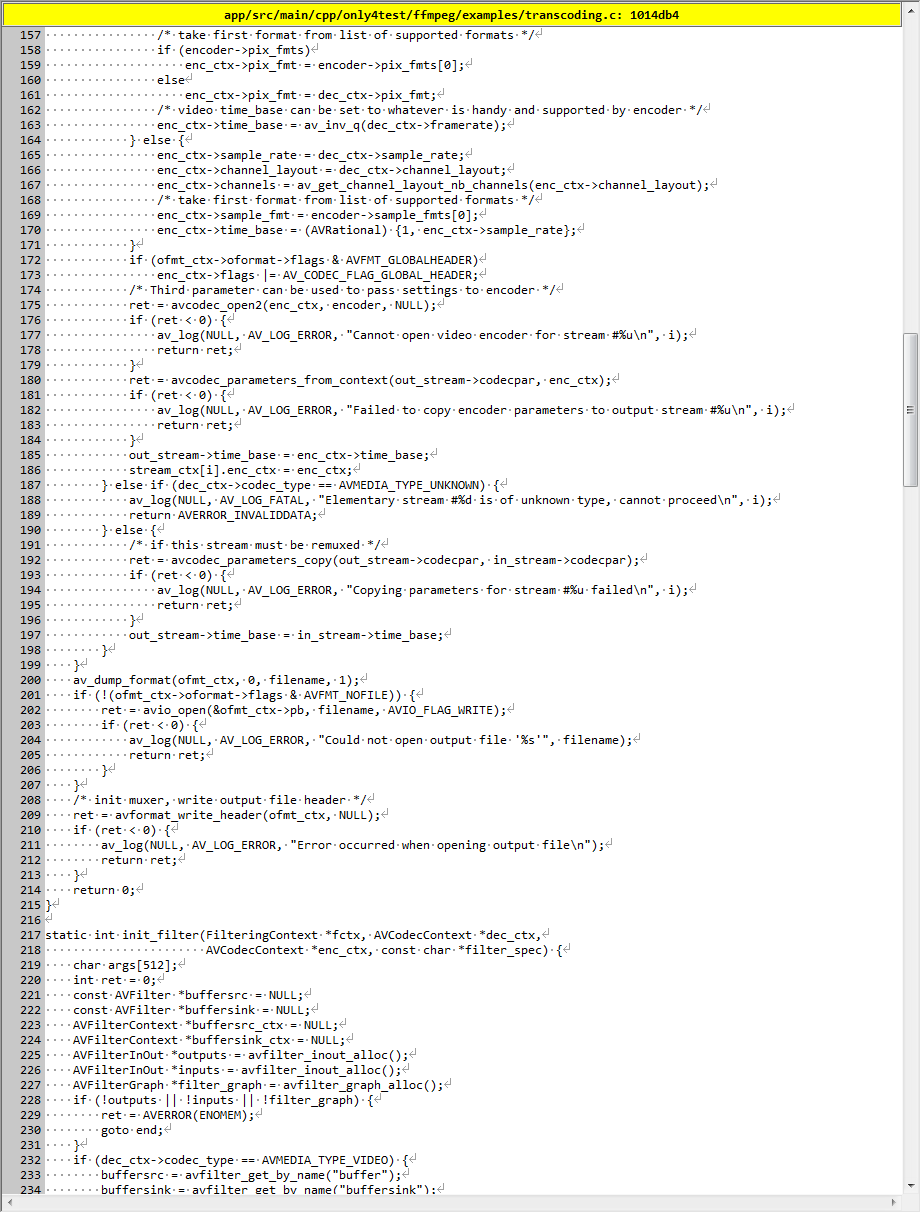

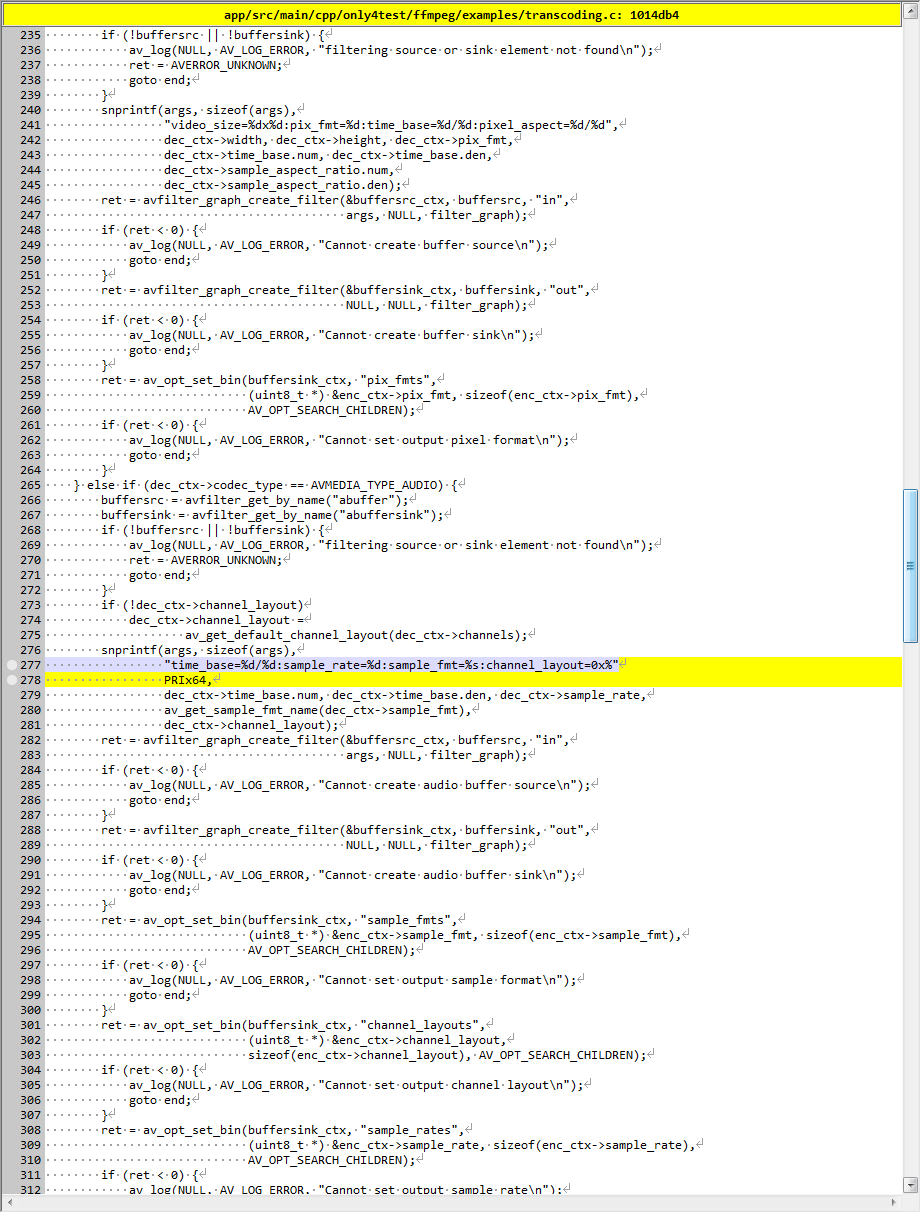

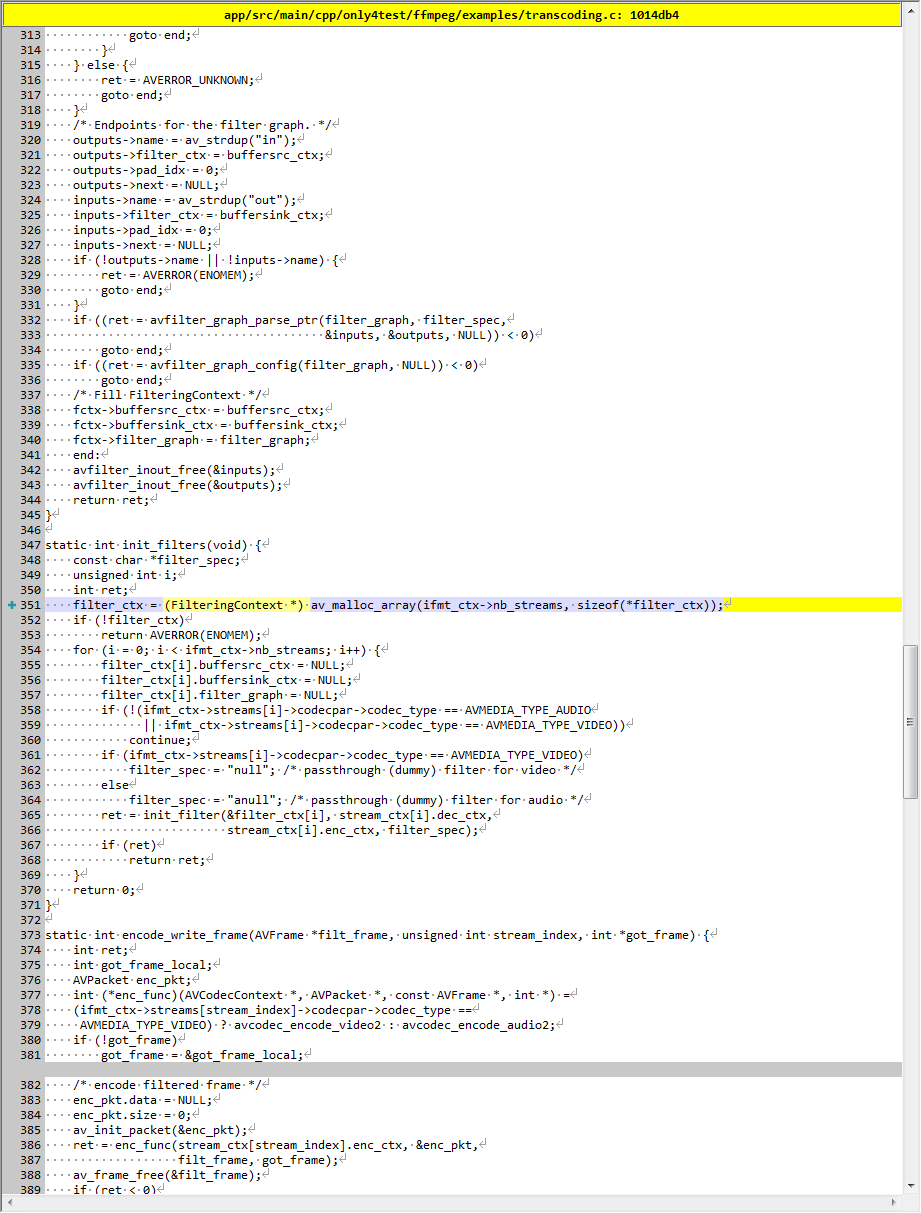

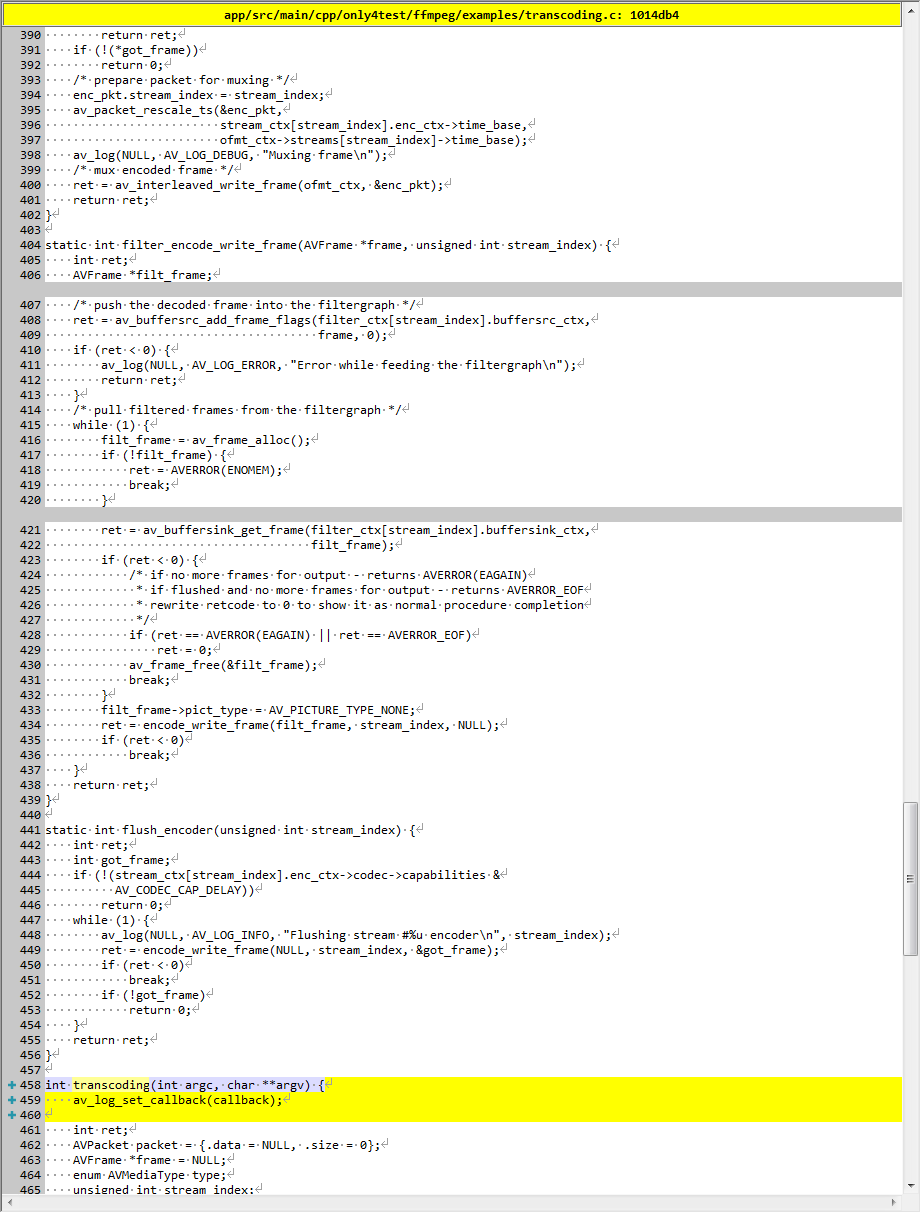

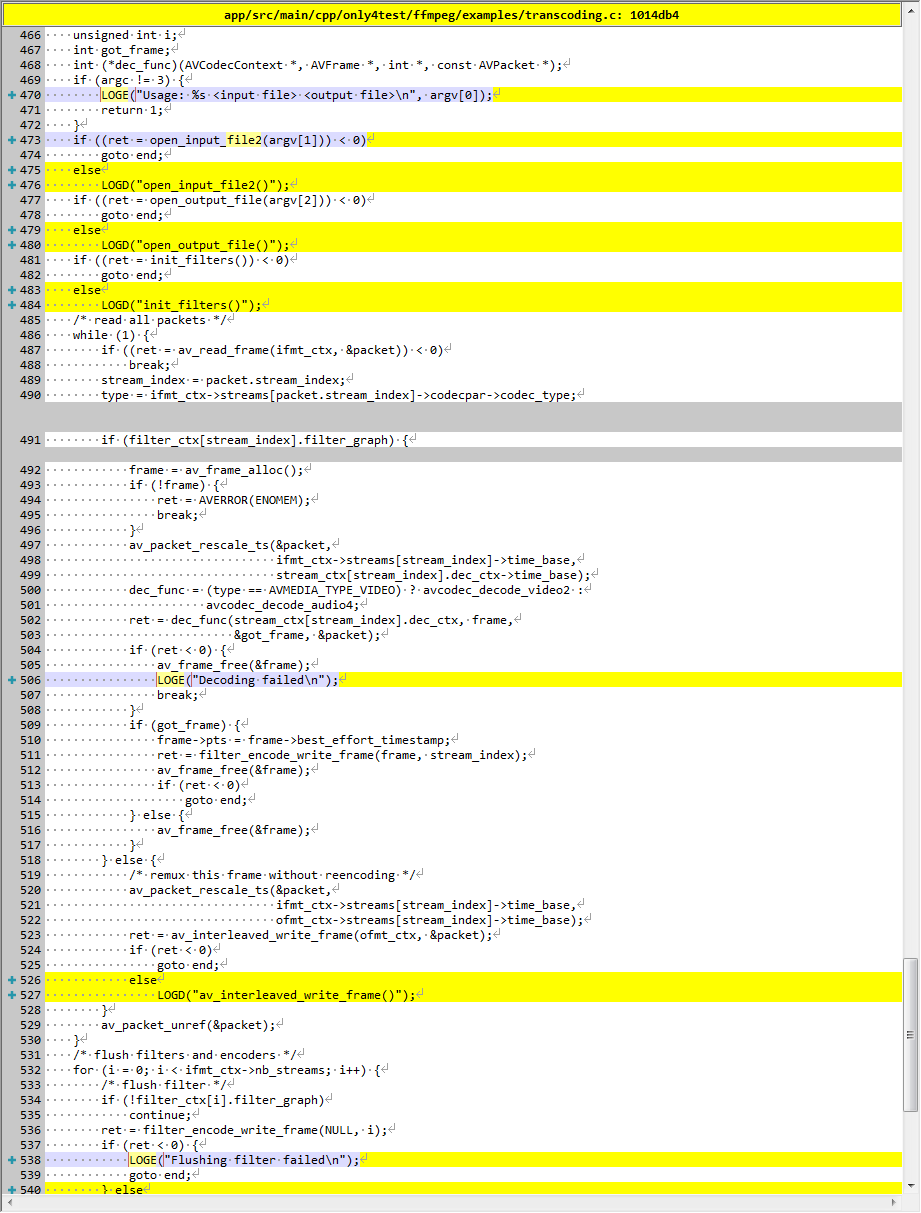

transcoding

描述

API example for demuxing, decoding, filtering, encoding and muxing

用于解复用、解码、过滤、编码和复用的 API 示例

演示

在指定位置生成文件:

源代码修改

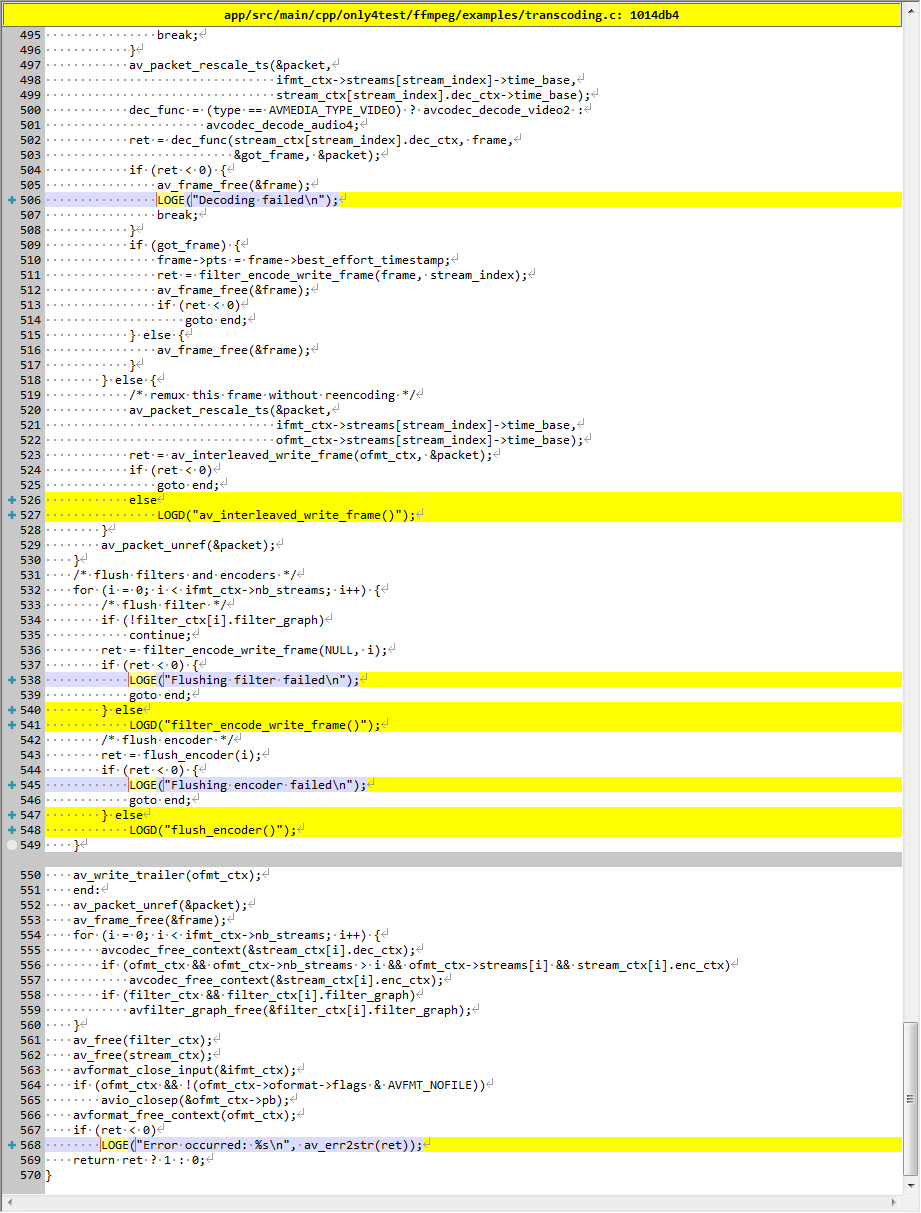

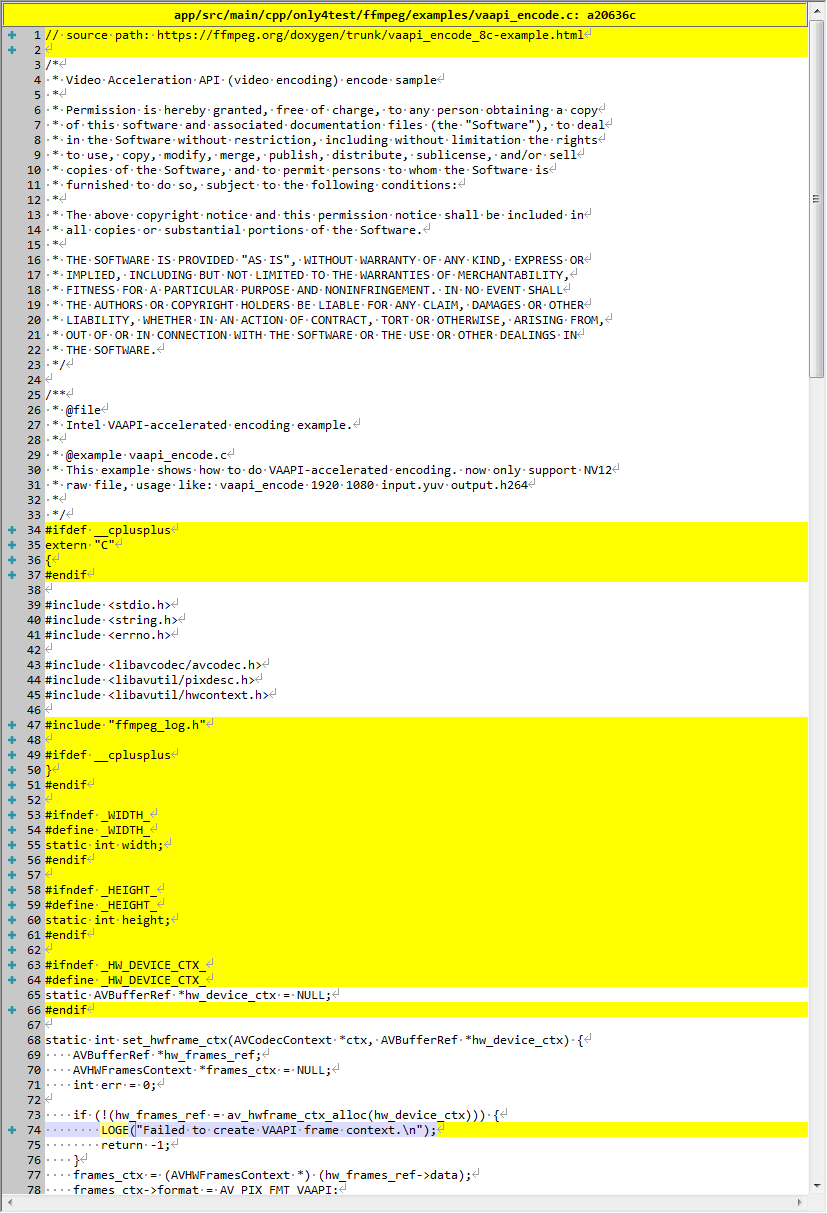

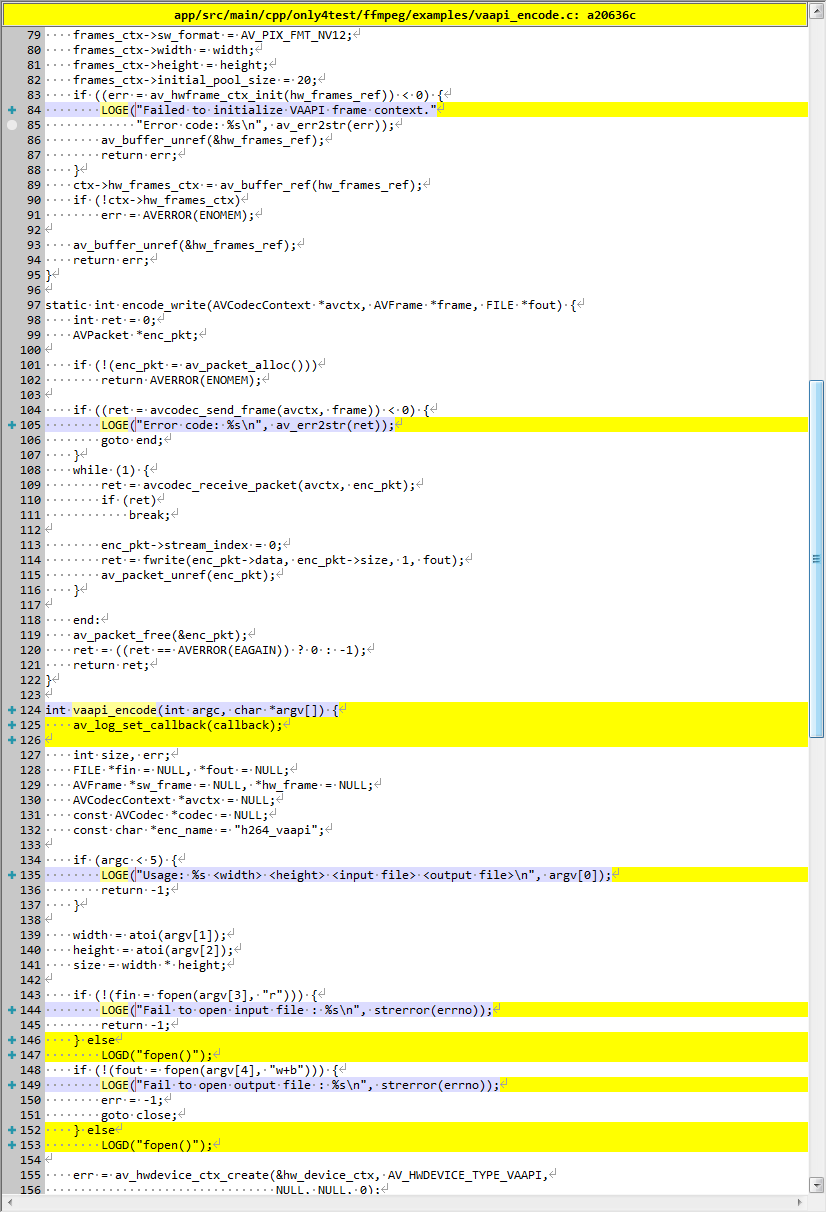

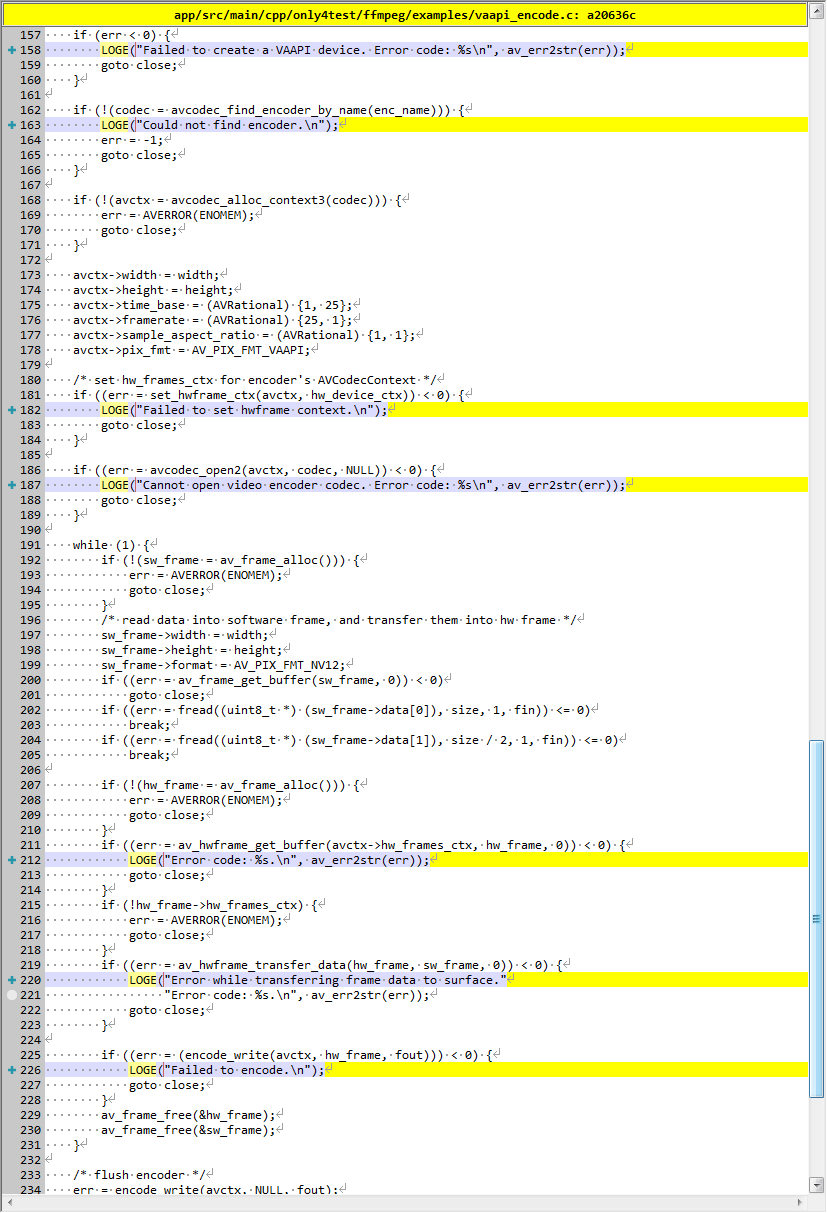

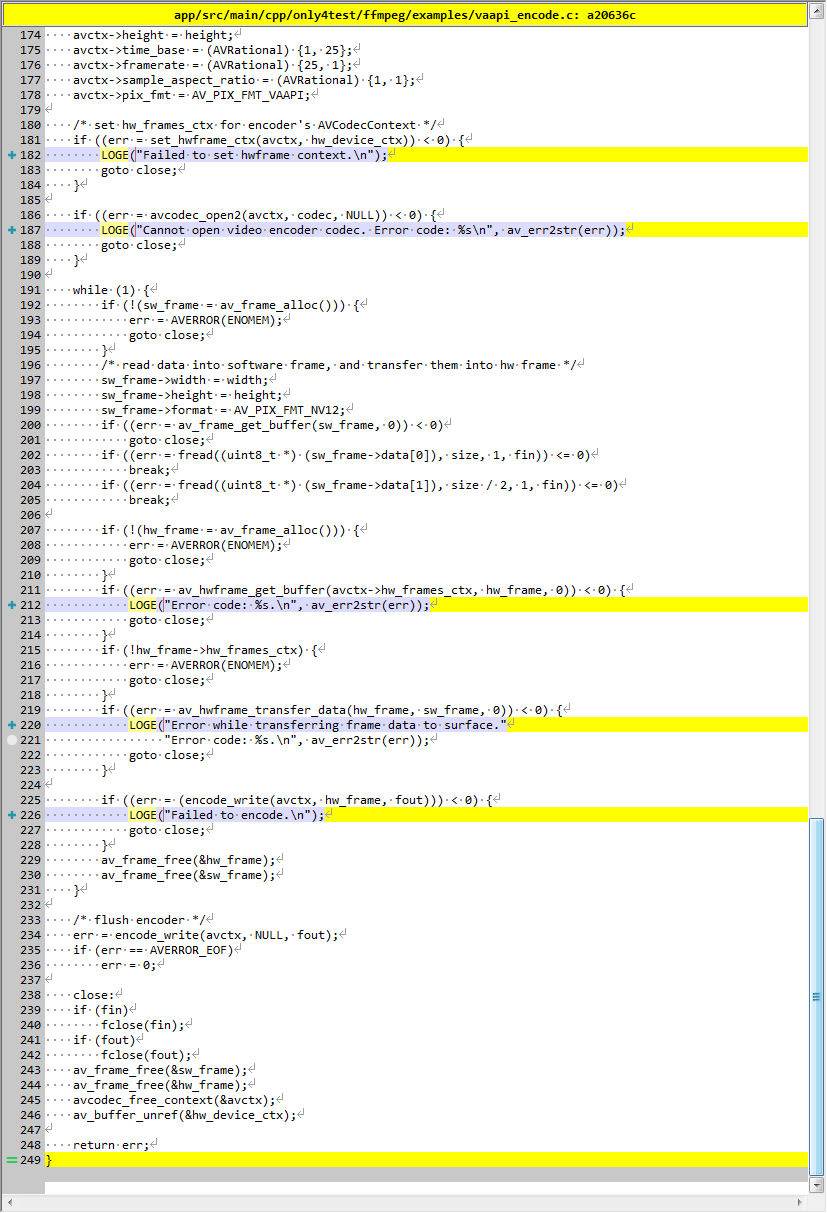

vaapi_encode TODO

描述

Intel VAAPI-accelerated encoding example.

This example shows how to do VAAPI-accelerated encoding. now only support NV12 raw file, usage like: vaapi_encode 1920 1080 input.yuv output.h264

英特尔 VAAPI 加速编码示例。

这个例子展示了如何进行 VAAPI 加速编码。 现在只支持 NV12 原始文件,用法如:vaapi_encode 1920 1080 input.yuv output.h264

演示

TODO 未测试成功,arm64-v8a 真机、x86_64 虚拟机都报错

源代码修改

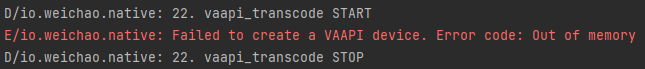

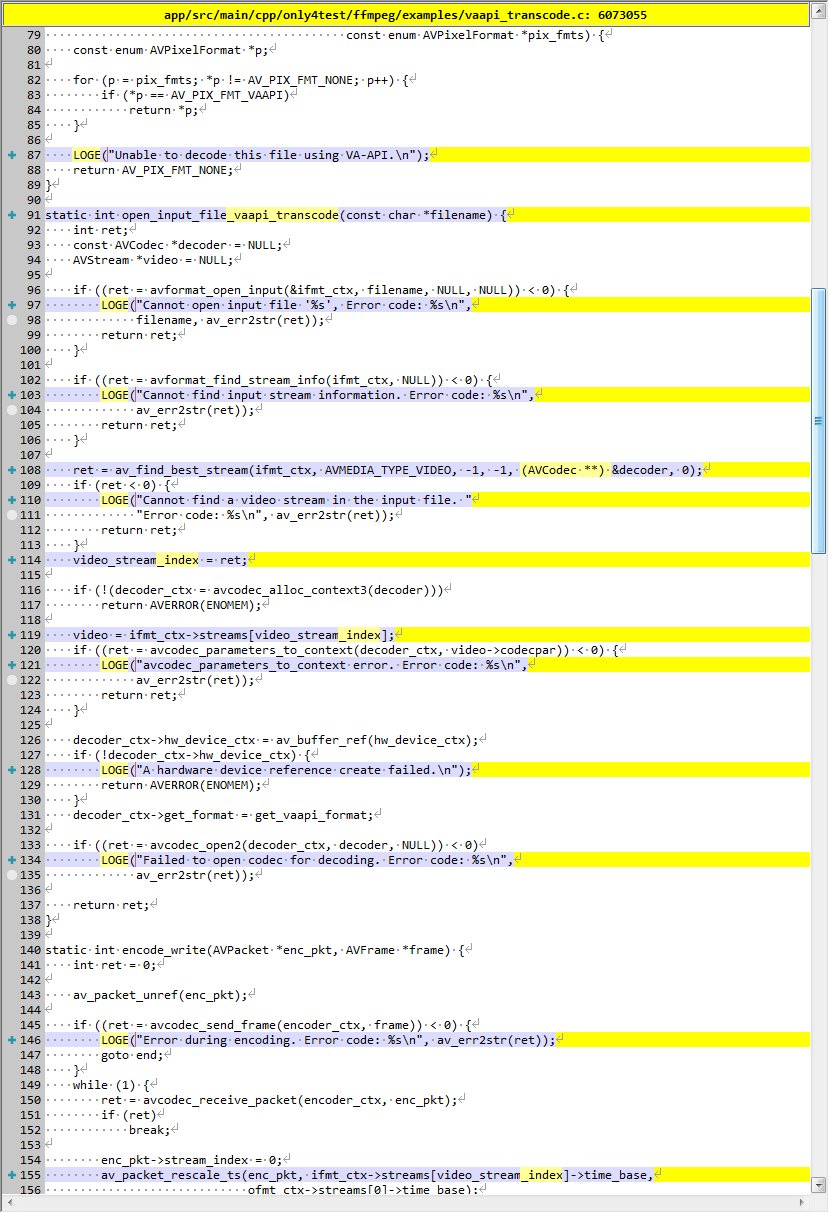

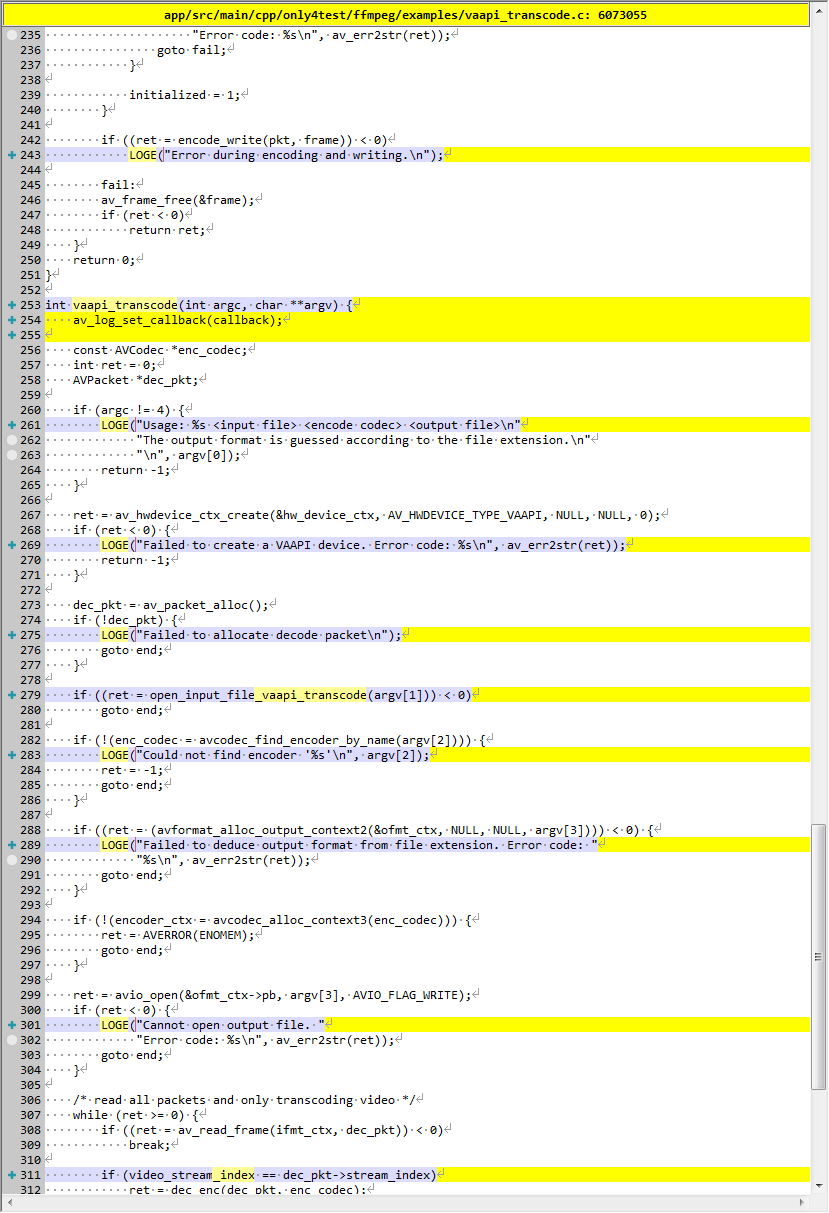

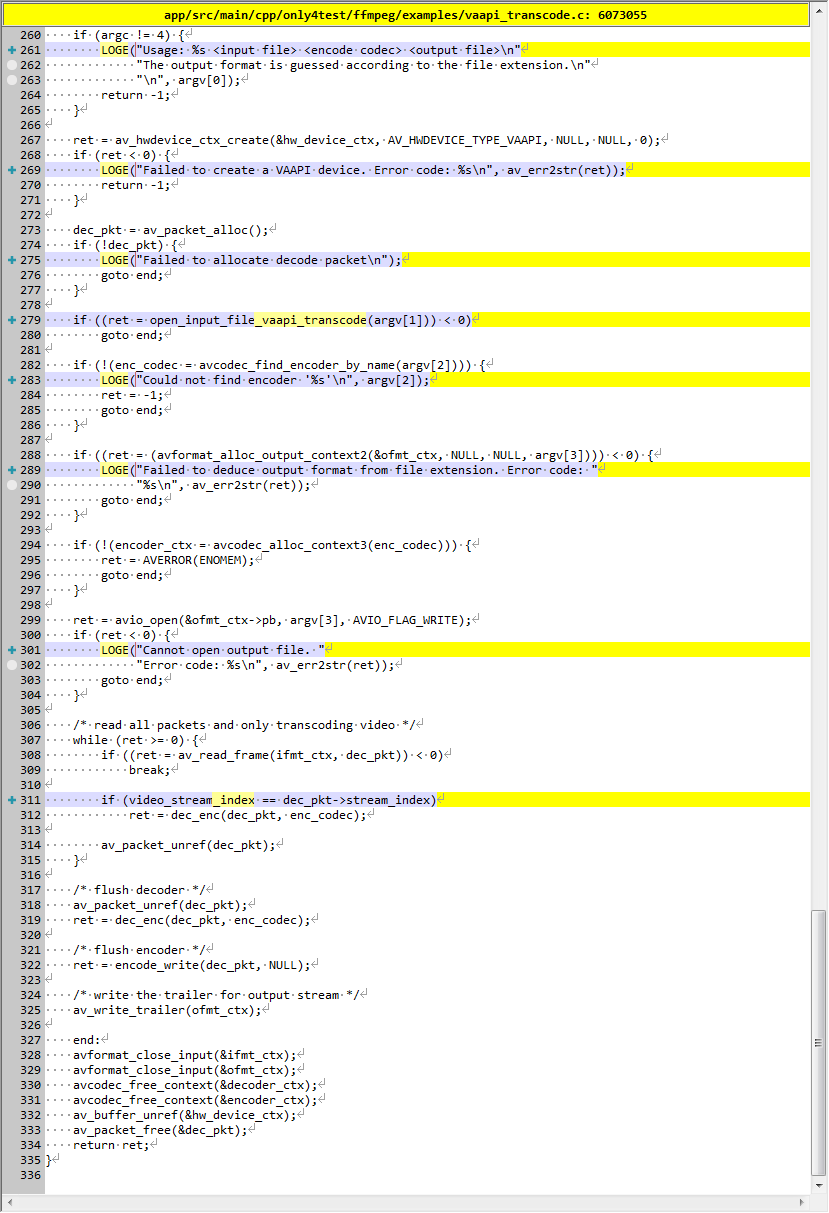

vaapi_transcode TODO

描述

Intel VAAPI-accelerated transcoding example.

This example shows how to do VAAPI-accelerated transcoding.

Usage: vaapi_transcode input_stream codec output_stream

e.g: vaapi_transcode input.mp4 h264_vaapi output_h264.mp4

vaapi_transcode input.mp4 vp9_vaapi output_vp9.ivf

The output format is guessed according to the file extension.

英特尔 VAAPI 加速转码示例。

这个例子展示了如何进行 VAAPI 加速的转码。

用法:vaapi_transcode input_stream codec output_stream

例如:vaapi_transcode input.mp4 h264_vaapi output_h264.mp4

vaapi_transcode input.mp4 vp9_vaapi output_vp9.ivf

根据文件扩展名猜测输出格式。

演示

TODO 未测试成功,arm64-v8a 真机、x86_64 虚拟机都报错

源代码修改

如果需要添加新的 example

1、在 FFmpegExamples\app\src\main\cpp\only4test\ffmpeg\examples 目录创建 .c 或 .cpp 文件(例如:example.c),复制代码并参照 example 修改。

2、在 FFmpegExamples\app\src\main\cpp\native-lib.cpp 文件中创建 JNI 函数,例如:

1 | |

添加

1 | |

即可在 JNI 函数中调用 example.c 中的函数。

3、点击菜单栏Build->Refresh Linked C++ Projects。

4、在 FFmpegExamples\app\src\main\java\io\weichao\ffmpegexamples\MainActivity.kt 文件中创建与 JNI 函数对应的本地方法,例如:

1 | |

5、在方法中调用 stringFromJNI()。